Tag: inference

AWS Bolsters SageMaker with New Capabilities

Amazon Web Services unveiled a half-dozen new SageMaker services today at its re:Invent conference in Las Vegas. The cloud giant bolstered its flagship AI development tool with new capabilities for data labeling, integra Read more…

Nvidia Inference Engine Keeps BERT Latency Within a Millisecond

It’s a shame when your data scientists dial in the accuracy on a deep learning model to a very high degree, only to be forced to gut the model for inference because of resource constraints. But that will seldom be the Read more…

An Open Source Alternative to AWS SageMaker

There’s no shortage of resources and tools for developing machine learning algorithms. But when it comes to putting those algorithms into production for inference, outside of AWS’s popular SageMaker, there’s not a Read more…

Baidu Releases PaddlePaddle Upgrades

An updated release of Baidu’s deep learning framework includes a batch of new features ranging from inference capabilities for Internet of Things (IoT) applications to a natural language processing (NLP) framework for Read more…

Intel’s Sentiment Algorithm Needs Less Training Data

Intel’s AI lab has released an open-source version of a sentiment analysis algorithm designed to boost natural language processing applications that currently lack the ability to scale across different domains such as Read more…

Why CTOs Should Reconsider FPGAs

Big data analytics adoption has soared in recent years. According to a 2018 market study, use of the technology has grown from 17 percent in 2015 to 59 percent in 2018, reaching a compound annual growth rate (CAGR) of 36 Read more…

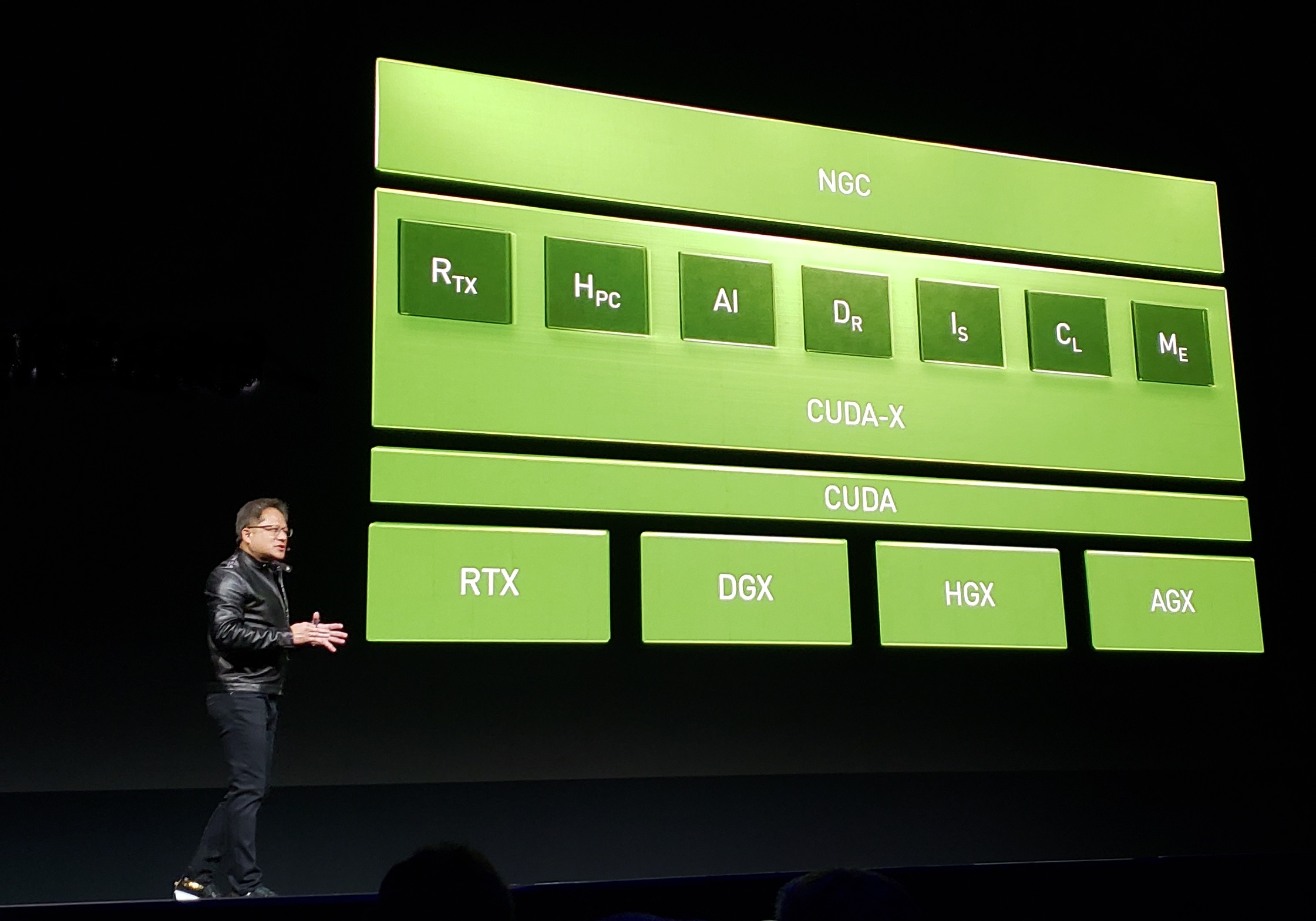

Nvidia Sees Green in Data Science Workloads

We already knew that GPUs are useful for lots of things besides making Fortnite uncomfortably realistic. All the biggest supercomputers in the world use GPUs to accelerate math, and more recently they've been used to pow Read more…

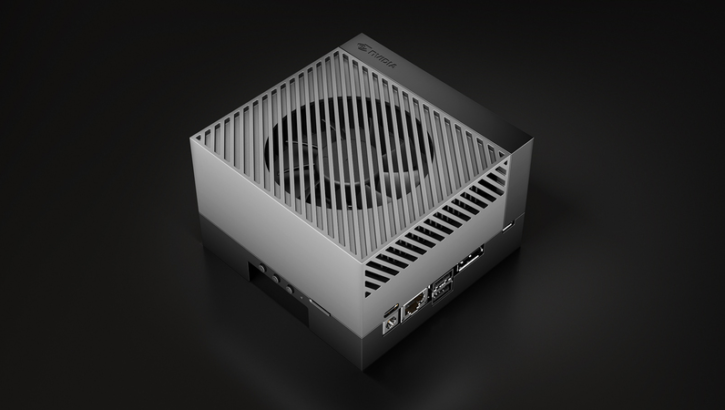

Inference Engine Aimed at AI Edge Apps

Flex Logix, the embedded FPGA specialist, has shifted gears by applying its proprietary interconnect technology to launch an inference engine that boosts neural inferencing capacity at the network edge while reducing DRA Read more…

DARPA Embraces ‘Common Sense’ Approach to AI

The Pentagon’s top research agency is focusing its considerable AI efforts on the interim stage of machine intelligence between “narrow” and “general” AI. The Defense Advanced Research Projects Agency (DARPA Read more…

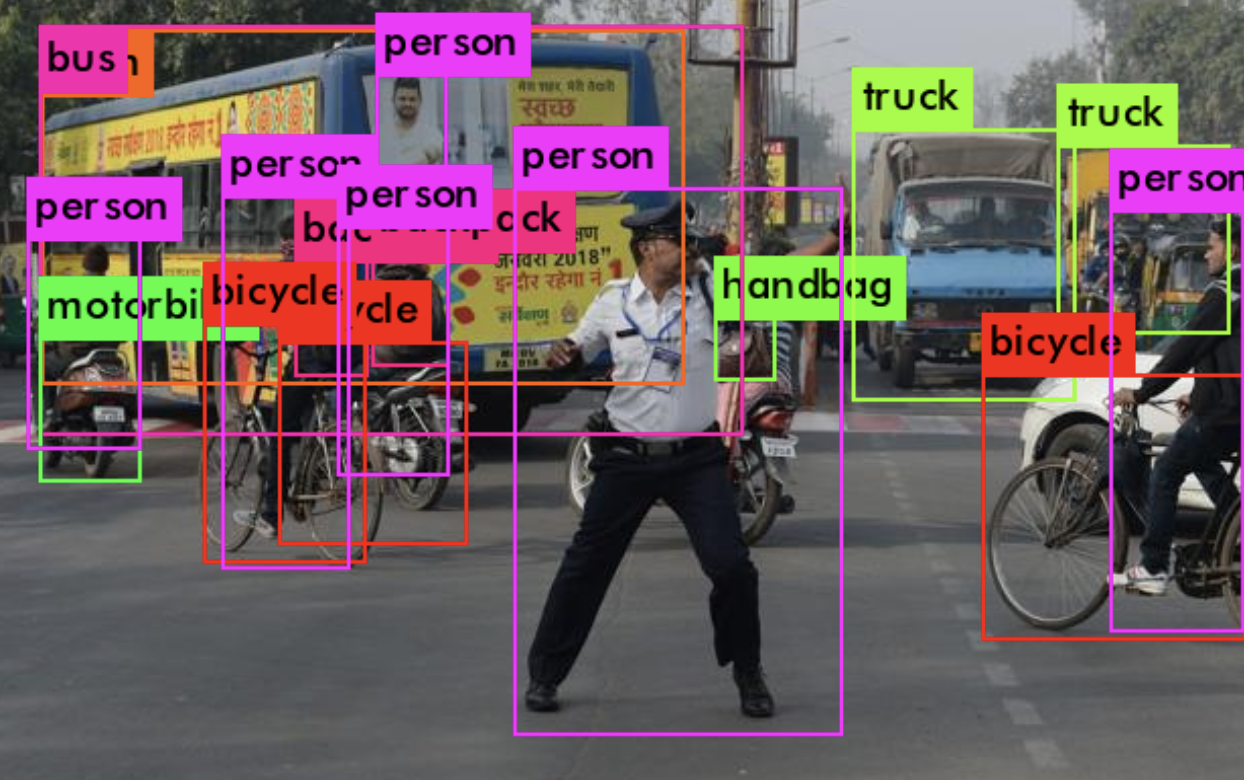

Arm ML Processors Eye Object Detection

Arm Ltd., the U.K. chip intellectual property vendor, is rolling out a machine learning platform designed to boost the functionality of devices at the network edge, beginning with an object detection capability. Arm, Read more…

Inference Emerges As Next AI Challenge

As developers flock to artificial intelligence frameworks in response to the explosion of intelligence machines, training deep learning models has emerged as a priority along with synching them to a growing list of neura Read more…

H2O Ups AI Ante Via Nvidia GPU Integration

H2O.ai, the machine-learning specialist that unveiled its "Driverless AI" platform this past summer, is upgrading the system via integration with GPU specialist Nvidia's AI development system. The Mountain View, Calif Read more…

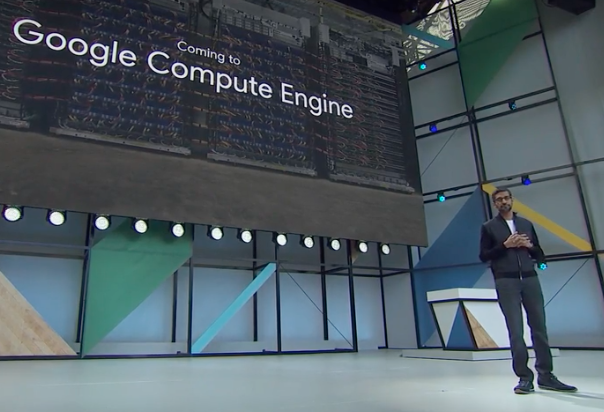

‘Cloud TPU’ Bolsters Google’s ‘AI-First’ Strategy

Google fleshed out its artificial intelligence efforts during its annual developers conference this week with the roll out of an initiative called Google.ai that serves as a clearinghouse for machine learning research, t Read more…