Why CTOs Should Reconsider FPGAs

(Giacomo-Carrabino/Shutterstock)

Big data analytics adoption has soared in recent years. According to a 2018 market study, use of the technology has grown from 17 percent in 2015 to 59 percent in 2018, reaching a compound annual growth rate (CAGR) of 36 percent. What’s driving this mainstream adoption is the massive surge of structured and unstructured data flowing from sources ranging from Internet of Things sensors and events, social media and web apps tracking data on usage and behavior, and transactional and operational data from a broad spectrum of vertical segments.

As enterprises transition from reliance on traditional business intelligence (BI) to advanced analytics via machine learning and deep learning, the demands on computing infrastructure increase exponentially. These high volumes of streaming data require new levels of performance, including lower latency and higher throughput. In this competitive environment, CTOs must step up their infrastructure and use the most efficient tools and programs available in order to differentiate their enterprises. However, many CTOs are overlooking a key technology needed to do so: field-programmable gate array (FPGA)-based accelerators.

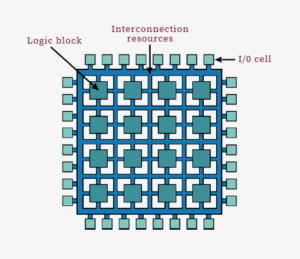

FPGAs are silicon devices that feature a large array of programmable logic blocks with reconfigurable interconnects, I/O cells and distributed memory. They allow the mapping of specific algorithmic functions to achieve extremely high performance, which is ideal for analytics programs.

Today’s real-time analytics solutions are based on a software platform using Kafka, Flink or similar frameworks for streaming the data, and Spark Streaming framework as a distributed framework for processing the data. As organizations expand the number of nodes in use to deal with the influx of data, they’re finding that performance does not scale linearly. These open source or proprietary software-only solutions cannot keep pace with increasing computational demands and low latencies required to support real-time analytics use cases.

To achieve better scale-out performance, a hardware solution is needed and FPGAs are positioned as the best hardware accelerator for big data analytics workloads. There are two main FPGA vendors: Intel (via its acquisition of Altera) and Xilinx are extending their products from traditional communication and industrial markets to accelerating computing in the data center. FPGAs from either vendor provide a reconfigurable sea of hardware gates that allow enterprises to

- Design a custom hardware accelerator with direct I/O connectivity and low latency.

- Deliver increased performance efficiencies for a single application.

- Quickly reconfigure the device as a new accelerator for a different application.

Essentially, these flexible FPGAs enable business and industry to meet the growing need for scale-out accelerators to power real-time streaming analytics that increasingly rely on machine learning and deep learning algorithms.

How FPGAs accelerate the analytics pipeline

We can map the computational steps in real-time analytics into three pipeline stages. Here’s why FPGAs are the ideal hardware accelerator device for each of these stages:

- Ingest. This stage involves processing streaming data in different formats (e.g., time series, video, images, speech) to extract the payload and prepare it for the next stage in the pipeline. FPGAs support network ports that process 10G, 40G and/or x25G of input streams in real time while simultaneously terminating the network protocols and the data protocols. This provides a unique advantage to FPGAs as they can ingest data at much higher rates and much lower latencies than CPUs or GPUs. GPUs have to rely on system NICs to ingest the data and transfer it over PCIe for processing.

- Transform. In this stage, the data is extracted, transformed and then loaded for analysis. For video streams, this stage may involve video decoding and resizing of the video frames. For text streams, this stage may involve operations like join or merge. These operations can be mapped to the FPGA and implemented inline with the streaming data to deliver high throughput at low latency. The functions can also be reprogrammed easily as the data streams change.

- Infer. This stage currently involves using deep neural networks (DNNs) to discern patterns in the data or recognize images. FPGAs have proven to be very competitive in implementing DNN for inferencing, compared to CPUs or GPUs. For example, Microsoft’s Project Brainwave has demonstrated that FPGAs are fast and flexible in implementing DNNs for artificial intelligence (AI) applications. The core matrix multiplication functions can utilize the hard multipliers in the FPGA fabric and can be customized for sparse matrices or lower precision weights. The other functions of the DNN can also be implemented efficiently in the data path.

With FPGAs on the adoption horizon, we see the evolution of the computing infrastructure in the data center in three waves:

- CPUs. In the last decade, the first wave of computing focused on mobile rollout and was enabled by the rise of hyper-scale cloud service providers (CPSs) using general purpose x86 CPUs.

- GPUs. The second wave of computing began earlier this decade with machine learning and AI applications. This drove the adoption of GPUs to meet increasing computing needs for deep learning training.

- FPGAs. The third wave of computing has already started. Today, specialized hardware accelerators based on FPGAs are being used to support the real-time streaming and machine learning/AI needs for analytics and other applications.

Barriers and solutions to FPGA adoption

Lowering latency at high volumes often cannot be achieved with the CPU alone. FPGAs can be utilized as part of a heterogeneous CPU and FPGA platform to implement reconfigurable accelerators and deliver better performance at lower latency. Although FPGAs deliver advantages from accelerated performance to flexibility and programmability, they can be complex to program and manage efficiently. In addition, deployment of FPGAs requires integration into data center infrastructure. These barriers to deployment are now being addressed by two recent trends.

First, FPGAs are now available for use in both the private and public data centers. Leading CSPs in the US and abroad (including AWS, Alibaba, Baidu and Tencent) are making FPGAs available as part of their infrastructure-as-a-service offerings. Leading original equipment manufacturers like Dell EMC, Hewlett Packard Enterprise, Fujitsu and IBM are now also shipping standard high-volume servers with FPGA PCIe cards installed.

And second, many FPGA IP providers are offering optimized FPGA libraries for integration into customers’ applications via marketplaces. This alleviates the need for application developers to acquire the expertise needed to effectively program the FPGAs. But application developers still have to integrate these libraries into their application frameworks and manage the FPGA resources.

To address this last hurdle, some independent software vendors are now offering complete vertical solutions for specific market segments. These solutions abstract the FPGA and allow enterprises to benefit from FPGA acceleration by integrating them transparently into Spark Streaming and other application frameworks.

It’s clear that these new horizons for FPGAs will drive their adoption by enterprises as managed services and usher in the third wave of computing in the data center. Now is the time for CTOs to reconsider how FPGAs can help them address the challenges of today’s analytics environment.

About the author: Prabhat K. (PK) Gupta is founder and CEO of Megh Computing, a leading provider and pioneer of real-time analytics acceleration for retail, finance and communication companies. Based in Portland, Oregon, Megh’s platform uses the Spark application framework with FPGA accelerators to enable easy configuration of FPGAs for faster processing of big data analytics and reducing computing costs. Previously he was the general manager of the Xeon+FPGA Products in the Data Center Group at Intel where he was responsible for developing the Xeon+FPGA product for accelerating cloud workloads. PK holds a doctorate in electrical engineering from the University of Rhode Island and an MBA from the Wharton School of Business.

Related Items:

A Wave of Purpose-Built AI Hardware is Building

Embedded FPGA Core Aimed at Deep Learning

FPGA System Smokes Spark on Streaming Analytics

Editor’s note: This story was updated to include Xilinx among the FPGA vendors.