How AWS Plans to Cope with GenAI’s Insatiable Desire for Compute

Companies that toyed around with generative AI this year will be looking to play for keeps in 2024 with production GenAI apps that move the business needle. Considering there’s not enough GPUs to go around now, where will companies find enough compute to meet soaring demand? As the world’s largest data center operator, AWS is working to meet compute demand in several ways, according to Chetan Kapoor, the company’s director of product management for EC2.

Most of AWS’s plans to meet future GenAI compute demands involve its partner, GPU-maker Nvidia. When the launch of ChatGPT sparked the GenAI revolution a year ago, it spurred a run on high-end A100 Hopper chips that are needed to train large language models (LLMs). While a shortage of A100s has made it difficult for smaller firms to obtain the GPUs, AWS has the benefit of having a special relationship with the chipmaker, which has enabled it to bring “ultra-clusters” online.

“Our partnership with Nvidia has been very, very strong,” Kapoor told Datanami at AWS’s recent re:Invent conference in Las Vegas, Nevada. “This Nvidia technology is just phenomenal. We think it’s going to be a big enabler for the upcoming foundational models that are going to power GenAI going forward. We are collaborating with Nvidia not only at the rack level, but at the cluster level.”

About three years ago, AWS launched its p4 instances, which enables users to rent access to GPUs. Since foundation models are so large, it typically requires a large number of GPUs to be linked together for training. That’s where the concept of GPU ultra-clusters came from.

“We were seeing customers go from consuming tens of GPUs to hundreds to thousands, and they needed for all of these GPUs to be co-located so that they could seamlessly use them all in a single combined training job,” Kapoor said.

At re:Invent, AWS CEO Adam Selipskyi and Nvidia CEO Jensen Huang announced that AWS would be building a massive new ultra-cluster composed of 16,000 Nvidia H200 GPUs and some number of AWS Trainium2 chips lashed together using AWS’s Ethernet-based Elastic Fabric Adapter (EFA) networking technology. The ultra-cluster, which will sport 65 exaflops of compute capacity, is slated to go online in one of AWS’s data centers in 2024.

Nvidia is going to be one of the main tenants in this new ultra-cluster for its AI modeling service, and AWS will also have access to this capacity for its various GenAI services, including Titan and Bedrock LLM offerings. At some point, regular AWS users will also get access to that ultra-cluster, or possibly additional ultra-clusters, for their own AI use cases, Kapoor said.

“That that 16,000 deployment is intended to be a single cluster, but beyond that, it will probably split out into other chunks of clusters,” he said. “There might be another 8,000 that you might deploy, or a chunk of 4,000 depending on the specific geography and what customers are looking for.”

Kapoor, who is part of the senior team overseeing EC2 at AWS, said he couldn’t comment on the exact GPU capacity that would released to regular AWS customers, but he assured this reporter that it would be substantial.

“Typically, when we buy from Nvidia, it’s not in the thousands,” he said, indicating it was higher. “It’s a pretty significant consumption from our side, when you look at what we have done on A100s and what we are doing actively on H100s, so it’s going to be a large deployment.”

However, considering the insatiable demand for GPUs, unless somehow interest in GenAI falls off a cliff, it’s clear that AWS and every other cloud provider will be oversubscribed when it comes to GPU demand versus GPU capacity. That’s one of the reasons why AWS is looking for other suppliers of AI compute capacity besides Nvidia.

“We’ll continue to be in the face of experimenting, trying out different things,” Kapoor said. “But we always have our eyes open. If there’s new technology that somebody else innovating, if there’s a new chip type that’s super, super applicable in a particular vertical, we’re more than happy to take a look and figure out a way to make them available in the AWS ecosystem.”

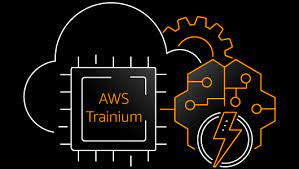

It also is building its own silicon. For instance, there is the aforementioned Trainium chip. At re:invent, AWS announced the successor chip, Trainium2, will deliver a 4x performance boost over the first gen Trainium chip, making it useful for training the largest foundation models, with hundreds of billions or even trillions of parameters, the company said.

Trainium1 has been generally available for over a year, and is being used in many ultra-clusters, Kapoor said, the biggest of which has 30,000 nodes. Trainium2, meanwhile, will be deployed in ultra-clusters with up to 100,000 nodes, which will be used to train the next generation of massive language models.

“We are able to support training of 100 to 150 billion parameter size models very, very effectively [with Trainium1] and we have software support for training large models coming shortly in the next few months,” Kapoor said. “There’s nothing fundamental in the hardware design that limits how large of a model [it can train]. It’s mostly a scale-out problem. It’s not how big a particular chip is–it’s how many of them you can actually get operational in parallel and do a really effective job in spreading your training workload across them.”

However, Trainium2 won’t be ready to do useful work for months. In the meantime, AWS is looking to other means of freeing up compute resources for large model training. That includes the new GPU capacity block EC2 instances AWS unveiled at re:Invent.

With the capacity block model, customers essentially make reservations for GPU compute weeks or months ahead of time. The hope is that it will convince AWS customers to stop hoarding unused GPU capacity for fear they won’t get it back, Kapoor said.

“It’s common knowledge [that] there aren’t enough GPUs in the industry to go around,” he said. “So we’re seeing patterns where there are some customers that are going in, consuming capacity from us, and just holding on to it for an extended period of time because of the fear that if they release that capacity, they might not get it back when they need it.”

Large companies can afford to waste 30% of their GPU capacity as long as they’re getting good AI model training returns from the other 70%, he said. But that compute model doesn’t fly for smaller companies.

“This was a solution that is geared to kind of solve that problem where small to medium enterprises have a predictable way of acquiring capacity and they can actually plan around it,” he said. “So if a team is working on a new machine learning model and they’re like, OK, we’re going to be ready in two weeks to actually go all out and do a large training run, I want to go and make sure that I have the capacity for it to have that confidence that that capacity will be available.”

AWS isn’t wedded to Nvidia, and has purchased AI training chips from Intel too. Customer can get access to Intel Gaudi accelerators via the EC2 DL1 instances, which offer eight Gaudi accelerators with 32 GB of high bandwidth memory (HBM) per accelerator, 768 GB of system memory, second-gen Intel Xeon Scalable processors, 400 Gbps of networking throughput, and 4 TB of local NVMe storage, according to AWS’s DL1 webpage.

Kapoor also said AWS is open to GPU innovation from AMD. Last week, that chipmaker launched the much-anticipated MI300X GPU, which offers some performance advantages over the H100 and upcoming H200 GPUs from Nvidia.

“It’s definitely on the radar,” Kapoor said of AMD in general in late November, more than a week before the MI300X launch. “The thing to be mindful of is it’s not just the silicon performance. It’s also software compatibility and how easy to use it is for developers.”

In this GenAI mad dash, some customers are sensitive to how quickly they can iterate and are more than willing to spend more money if it means they can get to market quicker, Kapoor said.

“But if there are alternative solutions that actually give them that ability to innovate quickly while saving 30%, 40%, they will certainly take that,” he said. “I think there’s a decent amount of work for everybody else outside of Nvidia to sharpen up their software capabilities and make sure they’re making it super, super easy for customers to migrate from what they’re doing today to a particular platform and vice versa.”

But training AI models is only half the battle. Running the AI model in production also requires large amounts of compute, usually of the GPU variety. There are some indications that the amount of compute required by inference workloads actually exceeds the compute demand on the initial training run. As GenAI workloads come online, that makes the current GPU squeeze even worse.

“Even for inference, you actually need accelerated compute, like GPUs or Inferential or Trainiums in the back,” Kapoor said. “If you’re interacting with a chatbot and you send it a message and you have to wait seconds for it correspond back, it’s just a terrible user experience. No matter how accurate or good of a response that it is, if it takes too long for it to respond back, you’re going to lose your customer.”

Kapoor noted that Nvidia is working on a new GPU architecture that will offer better inference capacity. The introduction of a new 8-bit floating point data type, FP8, will also ease the capacity crunch. AWS is also looking at FP8, he said.

“When we are innovating on building custom chips, we are very, very keenly aware of the training as well as inference requirements, so if somebody is actually deploying these models in production, we want to make sure we run them as effectively from a power and a compute standpoint,” Kapoor said. “We have a few customers that are actually in production [with GenAI], and they have been in production for several months now. But the vast majority of enterprises are still in the process of kind of figuring out how to take advantage of these GenAI capabilities.”

As companies get closer to going into production with GenAI, they will factor in those inferencing costs and look for ways to optimize the models, Kapoor said. For instance, there are distillation techniques that can shrink the compute demands for inference workloads relative to training, he said. Quantization is another method that will be called upon to make GenAI make economic sense. But we’re not there yet.

“What we’re seeing right now is just a lot of eagerness for people to get a solution out in the market,” he said. “Folks haven’t gotten to a point, or are not prioritizing cost production and economic optimization at this particular point in time. They’re just being like, okay, what is this technology capable of doing? How can I use it to reinvent or provide a better experience for my developers or customers? And then yes, they are mindful that at some point, if this really takes off and it’s really impactful form a business standpoint, they’ll have to come back in and start to optimize cost.”

Related Items:

Inside AWS’s Plans to Make S3 Faster and Better

AWS Adds Vector Capabilities to More Databases

AWS Teases 65 Exaflop ‘Ultra-Cluster’ with Nvidia, Launches New Chips