Mantium Lowers the Barrier to Using Large Language Models

(Ryzhi/Shutterstock)

Large language models like GPT-3 are bringing powerful AI capabilities to organizations around the world, but putting them into production in a secure and responsible manner can be difficult. Now a company called Mantium is launching a service to simplify the deployment and on-going management of large language models in the cloud.

There are a variety of applications that organizations are looking to build with large language models, such as BERT, which was open sourced by Google Research in 2018; OpenAI’s GPT-3, which debuted in 2020; and Megatron-Turing Natural Language Generation (MT-NLG), which Microsoft and Nvidia unveiled last month.

This list includes items like customer service chatbots, search engines, and automated text summarization and generation. For each of these applications, the main attraction of large language models is the capability to mimic humans with uncanny accuracy. Out of the box, these models–which have been pre-trained on huge corpuses of data using large fleets of GPUs over a course of months–are remarkably accurate. And with training on custom data sets for specific use cases, they get even better.

The natural language processing (NLP) and NLG capabilities unleashed by these new models have spurred a flood of text-based AI. COVID-19 helped to accelerate the shift away from human customer service reps to digital ones, and organizations in the medical, legal, and financial fields are finding practical ways to put these new AI powers to use understanding the human experience. There has also been talk about large language models bringing us closer to artificial general intelligence (AGI), although most agree we’re not there yet.

There’s a lot of potential upside to this new deep learning technology, but there are some big hurdles to overcome if they’re going to be deployed in production, says Ryan Sevey, CEO and Co-Founder of Mantium, which offers a service that automates processes involved in building and managing large language models on OpenAI, Eleuther, AI21, and Cohere.

“The first one is, even if you are a software developer, there are a number of security go-live requirements,” Sevey says. “The last time I checked, these are applicable if what you’re creating is going to be shared with more than five people.”

Because of the potential for abuse, companies that offer large language models as a service require their customer to have logging and monitoring in place. “You must demonstrate that you have rate limiting, input output validation, and just a whole slew of other things,” Sevey says. “When you combine them together, we’re talking about many, many hours of work for a software developer, if not weeks of work.”

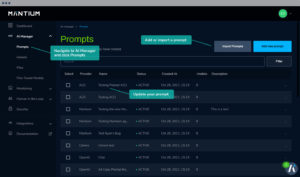

Mantium’s service handles many of these requirements for its customers. It’s service provides security controls, logging, monitoring, as well as a “human-in-the-loop” workflow that customers can plug into their application.

“So we make getting through that security checklist, or that security go-live process, a breeze,” Sevey tells Datanami. “The other thing within Mantium is you literally click a button that says ‘deploy.’ We spin up SPA, or a single-page application, that has your prompt embedded into it, and now you can just share that out with your friends. So we don’t have to waste time trying to set up an environment and then figuring all the DNS and all that. It’s just, here you go.”

The idea is to enable folks with a minimum of technical skills begin to play around with large language models and see how they can fit them into their workflows and applications. No data science skills are required to use Mantium (the company taps into the models already developed and run by OpenAI, Eleuther, AI21, and Cohere). But now customers don’t need traditional software development skills (let alone data science skills) to deploy them, either.

“There’s a lot of people out there, specifically within these large language model communities, who aren’t programmers, and so expecting them to learn how to code this to share their creation I think is a is certainly an obstacle that we want to help remove,” Sevey says.

Last week, Mantium announced that it raised a $12.75 million seed round. Sevey says the money will be used to scale up the Columbus, Ohio company, including hiring more developers and engineers around the world (it currently has around 30 employees, with plans to scale to 50). Mantium already has employees in nine countries, and is hoping to bring large language models easier to people who don’t speak English or Mandarin, which are the two most common languages for these models, Sevey says.

In addition to handling the security, logging, and monitoring functions, Mantium also provides a way for customers to train their model with custom data sets. It’s all part of the “good, better, best” progression of services, Sevey says.

“So ‘good’ is you just use a large language model out of the box. You’re just using OpenAI out of the box, and you’re getting pretty good results,” he says. “Now a better approach is, in the OpenAI world, we have files. They have a files endpoint and that supports thousands of examples. So we allow you to upload the file. We do all that using our interface, and then you’re getting a different endpoint that you’re now hitting that’s tied to the file and that typically gives way better results than just using the default thing out of the box.”

To get the best results requires fine-tuning the model and training it with custom data sets. “Fine tuning would be even more examples,” Sevey says. “That’s more exhaustive. That’s typically where a data science team is going to get engaged. You’ll feed it a whole bunch of labeled data as a JSON list. And yes, Mantium does support our users throughout that journey.”

Most customers will show up with a bunch of labeled training data in a CSV file, but that doesn’t cut it, Sevey says. It typically needs to be in the JSON format. (There should be open source tools available on the Internet that do that conversion automatically, but Sevey’s team couldn’t find them. “We searched for them and did not really find anything on our own,” he says. “But who knows. There’s probably something somewhere

Once customers have signed up with OpenAI, Eleuther, AI21, or Cohere (HuggingFace will be next on the list), they register their API with Mantium, and they’re off and running. Mantium is not currently charging for its service (it’s in “GA beta” at the moment), and as customers push inference workload to the large language model service providers, they will be billed directly by them.

The company has a number of customers that are serving langauge models in production. Currently, Sevey is more interested in hashing out its platform than making money. Once it has nailed down any loose ends and customer satisfaction is high, it will start charging for its service.

“We’ll figure out pricing later on. That’s not really something that we’re super sensitive out today,” he says. “We just want to make sure that our users are successful and seeing value out of large language models.”

Related Items:

An All-Volunteer Deep Learning Army

One Model to Rule Them All: Transformer Networks Usher in AI 2.0, Forrester Says

OpenAI’s GPT-3 Language Generator Is Impressive, but Don’t Hold Your Breath for Skynet