Spark 3.0 to Get Native GPU Acceleration

NVIDIA today announced that it’s working with Apache Spark’s open source community to bring native GPU acceleration to the next version of the big data processing framework. With Spark version 3.0, which is due out next month, organizations will be able to speed up all of their Spark workloads, from ETL jobs to machine learning training, without making wholesale changes to their code.

The company says Spark users will be able to be train their machine learning models on the same Spark cluster where they are running extract, transform, and load (ETL) jobs to prepare the data for processing. NVIDIA claims this is a first for Spark, which it says is used by 500,000 data scientists and data engineers around the world.

Running Spark workloads on GPUS simplifies the data pipeline and accelerates the machine learning lifecycle, according to Nvidia CEO Jensen Huang.

“Customers are interacting with your model constantly. You’re collecting more data. You want to use that data, the data that you’re using to refine and update your model,” Huang said in a conference call with yesterday. “And once you update your model, you want to release it back into the server. That loop is happening all the time…And so instead of having a whole bunch of Volta GPU servers, a bunch of T4 GPU servers, and CPU servers, all of that could can now be unified into one server, Ampere server.”

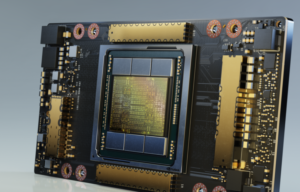

Ampere is the latest GPU architecture, which NVIDIA also announced today. The company had been planning to make its big announcements at the GPU Technology Conference (GTC) in March, but cancelled the in-person event due to the coronavirus outbreak. Huang’s keynote address traditionally is the biggest draw at GTC and how NVIDIA makes its big announcements. NVIDIA today released Huang’s “kitchen keynote,” which you can watch here.

NVIDIA also unveiled the A100, which is the first GPU to use the Ampere architecture. The A100 is deigned on a 7 nanometer process, features 54 million transistors, and can deliver up to 5 petaflops of processing power. The chip, which is available now, is intended to be used for AI and scientific computing workloads, in addition to processing graphics in cloud-scale platforms.

Of course, Spark users won’t need an A100-equipped server to be able to take advantage of the native GPU acceleration in Apache Spark 3.0. Users should be able to take advantage of the GPU acceleration using existing GPUs in their data centers or cloud providers.

This isn’t the first time that NVIDIA and the Spark community have worked together. In 2018, the Spark community worked with NVIDIA to support RAPIDS, its open source data science framework based on the NVIDIA CUDA programming model. Databricks, the cloud company founded by the creators of Apache Spark, adopted RAPI

DS and integrated it into its Spark-based data services.

NVIDIA is developing a new RAPIDS Accelerator for Spark 3.0 that intercepts and accelerates ETL pipelines by dramatically improving the performance of Spark SQL and DataFrame operations, the company said.

But the RAPIDS work is just one of the ways that Spark is getting “transparent GPU acceleration,” according to NVIDIA. There is also a modification being made to core Spark components, including the Catalyst query optimizer, “which is what the RAPIDS Accelerator plugs into to accelerate SQL and DataFrame operators,” the company said on this website. “When the query plan is executed, those operators can then be run on GPUs within the Spark cluster.”

NVIDIA is also developing a new Spark shuffle implementation “that optimizes the data transfer between Spark processes,” the company said. “This shuffle implementation is built upon GPU-accelerated communication libraries, including UCX, RDMA, and NCCL.”

Lastly, Spark 3.0 will feature a GPU-aware scheduler that sees GPUs as first-class resources in a Spark cluster, along with CPUs and system memory. NVIDIA said its engineers have contributed to this “major Spark enhancement,” and that it will work in various Spark implementations, including standalone Spark and Spark running on YARN (which is Hadoop’s traditional scheduler) and Kubernetes.

In Spark 3.0, you can now have a single pipeline, from data ingest to data preparation to model training. Data preparation operations are now GPU-accelerated, and data science infrastructure is consolidated and simplified.

Adobe is one of the first companies to partake of the new GPU acceleration in Spark 3.0. According to Adobe, it achieved a 7x performance boost and a 90% cost savings in one test of its Adobe Experience Cloud, running in Databricks’ environment.

“We’re seeing significantly faster performance with NVIDIA-accelerated Spark 3.0 compared to running Spark on CPUs,” William Yan, a senior director of machine learning at Adobe, stated in a press release. “With these game-changing GPU performance gains, entirely new possibilities open up for enhancing AI-driven features in our full suite of Adobe Experience Cloud apps.”

Related Items:

A Decade Later, Apache Spark Still Going Strong

RAPIDS Momentum Builds with Analytics, Cloud Backing