Is Hadoop Officially Dead?

(Christos-Georghiou/Shutterstock)

The merger of Cloudera and Hortonworks was applauded by many people in the big data community, and even Wall Street liked the news initially. But as the confetti from the party clears, some are asking tough questions, like whether the merger signals the death of Hadoop as a viable computer platform moving forward. The answer is probably not. Here’s why.

The October 3 announcement that Hortonworks will join forces with its arch-rival Cloudera to create a single company with about $730 million in annual revenue, 2,500 customers, and a $5.2 billion market valuation took a lot of people by surprise.

“My first reaction was I think it’s good news for people like us,” says Splice Machine CEO Monte Zweben, who had a front-row seat to the first dot-com boom and bust. “We have seen a tremendous opportunity that we didn’t see two years ago to operationalize all of the data lakes that those two companies and others have successfully deployed.”

“It was a smart move,” Jay Kreps, the CEO of Confluent and co-creator of Apache Kafka told ZDNet. “These were two companies competing on the same product, which makes the competition more fierce, ironically.”

“I think it’s all good,” says Kunal Agarwal, the CEO of Unravel Data. “I feel both of these companies putting their technology profiles together, instead of trying to beat each other and create two of everything, they can now focus on providing the right machine learning tool, providing the right IoT platform, providing the right AI tooling.”

But not all the comments have been positive. “I am weary about whether the new Cloudera (or even Cloudera and Hortonworks individually) will grow as quickly as its management team and investors expect, which is why I am staying away for now,” Virginia Backaitis, a freelance tech reporter, wrote in a Seeking Alpha piece.

Bloomberg Opinion columnist Shira Ovide was no less derisive, calling the merger of the two perpetually unprofitable tech providers “a seafaring union of two underwater companies.”

“It’s the Sears-K-Mart merger,” Teradata COO Oliver Ratzesberger told Datanami this week at the vendor’s user conference. “It’s the only way for them to potentially survive this. Hadoop in itself has become irrelevant.”

Mathew Lodge, Anaconda‘s senior vice president of products and marketing, pointed out in a story on VentureBeat how the center of the big data universe shifted away from Hadoop to the cloud, where storing data in object storage system like Amazon‘s S3, Microsoft Azure Blob Storage, and Google Cloud Storage is five times cheaper than storing it on HDFS.

“The leading cloud companies don’t run large Hadoop/Spark clusters from Cloudera and Hortonworks – they run distributed cloud-scale databases and applications on top of container infrastructure,” Lodge wrote. “It’s time for the Hadoop and Spark world to move with the times.”

Making Hadoop More Cloud-Like

Indeed, the Apache Hadoop community has been responding to the threat posed by the public cloud vendors – as well as nimble startups like Databricks and Snowflake, which saw a $450 million investment by venture capitalists last week — by moving to adopt some of the technologies that make big data analytics on the cloud so much cheaper and easier: object storage and container technology.

With the launch of Hadoop version 3 earlier this year, customers were given the option to use erasure coding, which is the data protection technique used by object storage systems like S3, to boost storage efficiency by 50%. The launch of Hadoop 3.1 will bring greater support for Docker in YARN. Both Cloudera and Hortonworks were working towards supporting Kubernetes with their Hadoop distributions before the announcement, which is something that MapR already supports with its converged data platform that’s in part based on Hadoop.

But there is a ton of work for the Hadoop community to do before it catches up. Last month, Mike Olson Cloudera’s Chief Strategy Officer, told Datanami that the community would need 12 to 24 months to develop Kubernetes support in the open source Apache Hadoop project.

“YARN is good at long running batch job scheduling but as a general purpose resource management framework for clusters, it was never well-designed,” Olson said. “Kubernetes is going to step in and take over a whole bunch of that.” As for the on-prem object store piece, Cloudera is not actively developing that, and instead is waiting for the market to shake out and a winner to emerge.

It begs the question: Will Hadoop still be Hadoop when YARN is replaced with Kubernetes and HDFS is replaced with whatever S3-compatible object storage system emerges as the winner? If you consider Hadoop to be a loose collection of 40 open source projects – HBase, Spark, Hive, Impala, Kafka, Flink, MapReduce, Presto, Drill, Pig, Kudu, etc. etc. etc. – then maybe the question is moot.

From a practical standpoint, there’s just no way that customers are suddenly going to turn off the millions of Hadoop nodes that have been deployed over the years because the two biggest Hadoop distributors are consolidating. For the thousands of companies that have built Hadoop data lakes, the focus will remain the same: Figuring out how to get value out of all that data.

While classic Hadoop may be a legacy technology, there’s still incentive in the community to adapt it to support emerging requirements, just as IBM had done with its mainframe platforms. The question is whether it can catch up fast enough to keep the installed base growing.

Simplifying Hadoop

Ever since the first MapReduce program went online more than a decade ago, developers have bemoaned the complexity of Hadoop. Even companies as big as Facebook have struggled to use Hadoop effectively, particularly when it comes to having the low-level Java programming skills required to get information out of Hadoop in a timely fashion.

The trend since then has been to abstract away that complexity, but the Hadoop community did not make progress fast enough to prevent the cloud vendors from building market share with more simple offerings, even if it means eliminating some configurability and accepting more vendor lock-in as the trade-off for turning raw big data sets into useful information quickly.

“I think it spells a transformation of Hadoop,” says Splice Machine’s Zweben. “There’s going to be increasingly number of engines out there that software providers use, but I just don’t see, in the long run, the average enterprise operating it…. It’s just too hard for the Global 2000 to do with the heavy lifting of Hadoop.”

Now that Cloudera and Hortonworks engineers will be working together, they’ll be better equipped to handle the challenge of building system that can work on premise, in the cloud, and in a hybrid configuration, says Unravel Data’s Agarawal. “That’s a huge project that will still require a ton of these engineers time to sit down and make it a successful platform on top of Kubernetes,” he says. “There’s so much development yet to be done.”

If there’s a light at the end of the tunnel, it’s this: If Cloudera (as the new company will be known) remakes Hadoop into a hybrid, containerized platform that resides atop Kubernetes and can store data in any S3-compatbile object store, then there may be the potential for it to achieve some of its goals yet and potentially claim some of the market, which IDC pegs as a $65 billion opportunity.

“I think they have one trump card that cloud guys don’t, which is they have this hybrid strategy,” Agarwal says of Cloudera. “From our personal experience, working with these Fortune 1000 companies, they don’t to go the cloud directly. They want this hybrid strategy. So I think that’s going to be a path forward to have something of value for these customers.”

From Disillusionment to Productivity

From its earliest days, Hadoop was basically synonymous with big data. If you had a big data problem, then the answer was Hadoop.

Of course that was wrong, and many people said so — from Mike Stonebraker’s comments about Google engineers “laughing in their beer” to TIBCO‘s CTO pleading with people to “stop chasing yellow elephants” — but that’s largely what Hadoop’s marketing message was for many years.

It’s human nature to look for silver bullets to complex problems, says Teradata chief technology officer Steven Brobst. “People want to believe that new technology is going to solve all their problems,” the legendary technologist said during his keynote address Tuesday at the company’s user conference. “It’s going to do everything for you. It’s going to bring you coffee in the morning.”

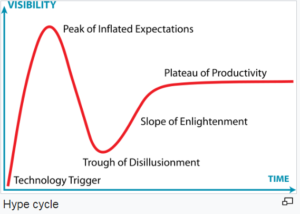

Hadoop was once the most overhyped technology, and today that title belongs to AI. “The problem when you have these overinflated expectations is there’s no choice but to fail,” Brobst says. “What happens is, when the expectations are set inappropriately, you’re going to crash to the trough of disillusionment.

“We see this happening to Hadoop right now,” Brobst continues. “Hadoop is in this trough of disillusionment. ‘Throw it all away! It’s not working!’ It’s not working because expectations were that it was going to do everything. And you had no opportunity except to fail with that expectation.”

While people have struggled with Hadoop, it doesn’t mean that Hadoop has no value, Brobst says. Rather, it means that organizations and users need to reset their expectation, and ask themselves what it’s good for.

“So Hadoop and big data will come out of the trough of disillusionment where Gartner has placed it right now, and it will come into the plateau of productivity,” he says. “And it will be not a big data strategy but a data strategy….It will be part of an ecosystem. It will not be the solution to all problems.”

Related Items:

Cloudera and Hortonworks to Merge in $5.2 Billion Deal

Mike Olson on Zoo Animals, Object Stores, and the Future of Cloudera

Hadoop Has Failed Us, Tech Experts Say