Amazon Bedrock: New Suite of Generative AI Tools Unveiled by AWS

(Michael Vi/Shutterstock)

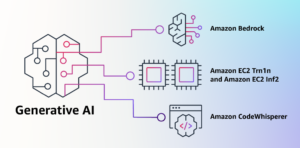

AWS has entered the red-hot realm of generative AI with the introduction of a suite of generative AI development tools. The cornerstone of these is Amazon Bedrock, a tool for building generative AI applications using pre-trained foundation models accessible via an API through AI startups like AI21 Labs, Anthropic, and Stability AI, as well as Amazon’s own Titan family of foundation models (FMs).

Bedrock offers serverless integration with AWS tools and capabilities, enabling customers to find the right model for their needs, customize it with their data, and deploy it without managing costly infrastructure. Amazon states that the infrastructure supporting the Bedrock service will employ a mix of Amazon’s proprietary AI chips (AWS Trainium and AWS Inferentia) and GPUs from Nvidia.

AWS is positioning Bedrock as a way to democratize FMs, as training these large models can be prohibitively expensive for many companies. Working with pre-trained models allows businesses to build custom applications using their own data. AWS says customization is easy with Bedrock and requires only a few labeled data examples for fine-tuning a model for a specific task.

Swami Sivasubramanian, VP of database, analytics, and machine learning at AWS wrote about this “democratizing approach to generative AI” in a blog post announcing the new tools: “We work to take these technologies out of the realm of research and experiments and extend their availability far beyond a handful of startups and large, well-funded tech companies. That’s why today I’m excited to announce several new innovations that will make it easy and practical for our customers to use generative AI in their businesses.”

Amazon’s Titan FMs include a large language model for text generation tasks, as well as an embeddings model for building applications for personalized recommendations and search. The models have built-in safeguards to mitigate harmful content, including filters for violent or hateful speech.

Models provided by startups include the Jurassic-2 family of LLMs from AI21 Labs which can generate text in French, Spanish, Italian, German, Portuguese, and Dutch. OpenAI competitor Anthropic’s Claude model is also part of Bedrock and can be used for conversational tasks. Stability AI’s text-to-image model, Stable Diffusion, is available for customers to generate images, art, logos, and designs.

AWS also announced the general availability of its CodeWhisperer, an AI coding companion similar to GitHub’s Copilot. AWS has also made CodeWhisperer free for developers with no use restrictions. There is also a new CodeWhisperer professional tier for business users that has features such as single sign-on with AWS Identity and Access Management integration. CodeWhisperer generates code in real-time, supports multiple languages, and can be accessed through various IDEs. Examples of supported coding languages include SQL, Go, Rust, C, C++, and others.

Additionally, there are two new Elastic Compute Cloud instances released for GA. There are Trn1n instances, powered by Trainium, that have 1600 Gbps of network bandwidth and 20% more performance over Trn1 instances. The second new instance to reach GA is Inf2 instances, powered by Inferentia2. AWS says these are optimized specifically for large-scale generative AI apps with models containing hundreds of billions of parameters, as they deliver up to 4x higher throughput and up to 10x lower latency compared to the prior generation Inferentia-based instances. AWS claims this speed-up amounts to 40% better inference price performance.

Bedrock is available now in limited preview. One customer is Coda, maker of a collaborative document management platform: “As a longtime happy AWS customer, we’re excited about how Amazon Bedrock can bring quality, scalability, and performance to Coda AI. Since all our data is already on AWS, we are able to quickly incorporate generative AI using Bedrock, with all the security and privacy we need to protect our data built-in. With over tens of thousands of teams running on Coda, including large teams like Uber, the New York Times, and Square, reliability and scalability are really important,” said Shishir Mehrotra, co-founder and CEO of Coda, in Sivasubramanian’s blog announcement.

Related Items:

AWS Moves Up the Application Stack

Native AI Raises $3.5M Seed for AI-powered Consumer Research

Seek AI Finds $7.5M in Seed to Grow Its Generative AI Platform