Hate Hadoop? Then You’re Doing It Wrong

(mw2st/Shutterstock)

There’s been a lot of Monday morning quarterbacking saying Hadoop never made sense in the first place and that it has met its demise. Comments from “Hadoop is slow for small, ad hoc jobs” to “Hadoop is dead and Spark is the victor” are now commonplace.

The frenzy around Hadoop’s deficiencies is almost as fierce as the initial hype around how powerful and disruptive it was. However, while it’s understandable that people have come up against difficulties deploying Hadoop, that doesn’t mean the negative chatter is true.

Out of Sight

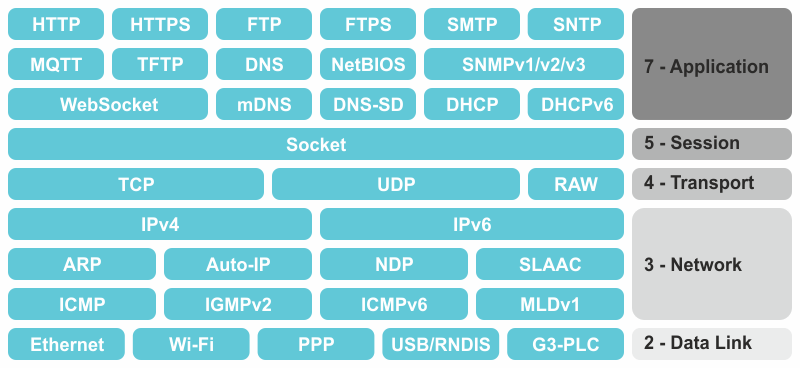

TCP/IP powers the Internet, your email, your apps and more. But chances are you don’t hear about it. When you request a ride sharing service, stream media or surf the Internet, you’re benefitting from its power.

For the most part, we all rely on TCP/IP on a daily basis, yet have no interest or need to configure it. We don’t spend time going on our Macs typing commands like ifconfig to see how your WiFi adapter is configured to get online.

The complexity of the TCP/IP stack is mostly invisible to us now, as Hadoop’s complexity will eventually be

In the 1990s, TCP/IP used to be sold as a product, and adoption was somewhat tepid. Eventually, TCP/IP got built into operating systems and, perhaps paradoxically, that’s when it conquered all. It became a universal standard, and at the same time it disappeared from plain sight.

Hadoop Is Infrastructure

Similarly, Hadoop is the TCP/IP of the Big Data world. It’s the infrastructure that delivers huge benefits. But that benefit is greatly diluted when the infrastructure is exposed. Hadoop has been marketed like a Web browser, but it’s much more like TCP/IP.

If you’re working with Hadoop directly, you’re doing it wrong. If you’re typing “Hadoop” and a bunch of parameters at the command line, you’ve got it all backwards. Do you want to configure and run everything yourself, or do you just want to work with your data and let analytics software handle Hadoop on the back end?

Most people would choose the latter, but the Big Data industry often directed customers to the former. The industry did it with Hadoop before, and they’re doing it with Spark and numerous machine-learning tools now.

It’s a case of the technologist tail wagging the business user dog, and that never ends well.

Dev Tools Aren’t Biz Tools

It’s not that the industry has been totally oblivious to this problem. Some vendors have tried to up their tooling game and smooth out Hadoop’s rough edges. Open source projects with names like Hue, Jupyter, Zeppelin and Ambari have cropped up, aiming to get Hadoop practitioners off the command line.

Getting Hadoop users off the command line doesn’t necessarily mean they’re more productive

But therein lies the problem. We need tools for business users, not Hadoop practitioners. Hue is great for running and tracking Hadoop execution jobs or for writing queries in SQL or other languages. Jupyter and Zeppelin are great for writing and running code against Spark, in data science-friendly languages like R and Python, and even rendering the data visualizations that code produces.

The problem is these tools don’t get rid of command line tasks; they just make people more efficient at doing them. Getting people physically off the command line may be helpful, but having them do the same stuff, even if it’s easier, doesn’t really change the equation.

There’s a balancing act here. To do Big Data analytics right, you shouldn’t have to use the engine – Hadoop in this case – directly, but you still want its full power. To make that happen, you need an analytics tool that tames the technology, without dismissing it or shooing it away.

Find that middle ground and you’ll be on the right track.

The Path Forward for Hadoop

Hadoop isn’t dead, nor is it the problem. Hadoop is an extremely powerful, critical technology. But it’s also infrastructure. It never should have been the poster child for Big Data. Hadoop (and Spark, for that matter) is technology that should be embedded in other technologies and products. That way those technologies can, in turn, leverage their power, without exposing their complexity.

Hadoop’s not anymore dead than TCP/IP. The problem is how people have used it, not what it does. If you want to do big data analytics right, then exploit its power behind the scenes, where Hadoop belongs. If you do it that way, Hadoop will be resurrected, not by magic, but by common sense.

But you probably won’t even notice. And if that’s the case, then you’re doing it right.

About the author: Andrew Brust is Senior Director, Market Strategy and Intelligence at Datameer, liaising between the Marketing, Product and Product Management teams, and the big data analytics community. And writes a blog for ZDNet called “Big on Data” (zdnet.com/blog/big-data); is an advisor to NYTECH, the New York Technology Council; serves as Microsoft Regional Director and MVP; and writes the Redmond Review column for VisualStudioMagazine.com.

Related Items:

Hadoop Has Failed Us, Tech Experts Say

Hadoop at Strata: Not Exactly ‘Failure,’ But It Is Complicated

Charting a Course Out of the Big Data Doldrums

April 26, 2024

- Google Announces $75M AI Opportunity Fund and New Course to Skill One Million Americans

- Elastic Reports 8x Speed and 32x Efficiency Gains for Elasticsearch and Lucene Vector Database

- Gartner Identifies the Top Trends in Data and Analytics for 2024

- Satori and Collibra Accelerate AI Readiness Through Unified Data Management

- Argonne’s New AI Application Reduces Data Processing Time by 100x in X-ray Studies

April 25, 2024

- Salesforce Unveils Zero Copy Partner Network, Offering New Open Data Lake Access via Apache Iceberg

- Dataiku Enables Generative AI-Powered Chat Across the Enterprise

- IBM Transforms the Storage Ownership Experience with IBM Storage Assurance

- Cleanlab Launches New Solution to Detect AI Hallucinations in Language Models

- University of Maryland’s Smith School Launches New Center for AI in Business

- SAS Advances Public Health Research with New Analytics Tools on NIH Researcher Workbench

- NVIDIA to Acquire GPU Orchestration Software Provider Run:ai

April 24, 2024

- AtScale Introduces Developer Community Edition for Semantic Modeling

- Domopalooza 2024 Sets a High Bar for AI in Business Intelligence and Analytics

- BigID Highlights Crucial Security Measures for Generative AI in Latest Industry Report

- Moveworks Showcases the Power of Its Next-Gen Copilot at Moveworks.global 2024

- AtScale Announces Next-Gen Product Innovations to Foster Data-Driven Industry-Wide Collaboration

- New Snorkel Flow Release Empowers Enterprises to Harness Their Data for Custom AI Solutions

- Snowflake Launches Arctic: The Most Open, Enterprise-Grade Large Language Model

- Lenovo Advances Hybrid AI Innovation to Meet the Demands of the Most Compute Intensive Workloads

Most Read Features

Sorry. No data so far.

Most Read News In Brief

Sorry. No data so far.

Most Read This Just In

Sorry. No data so far.

Sponsored Partner Content

-

Get your Data AI Ready – Celebrate One Year of Deep Dish Data Virtual Series!

-

Supercharge Your Data Lake with Spark 3.3

-

Learn How to Build a Custom Chatbot Using a RAG Workflow in Minutes [Hands-on Demo]

-

Overcome ETL Bottlenecks with Metadata-driven Integration for the AI Era [Free Guide]

-

Gartner® Hype Cycle™ for Analytics and Business Intelligence 2023

-

The Art of Mastering Data Quality for AI and Analytics

Sponsored Whitepapers

Contributors

Featured Events

-

AI & Big Data Expo North America 2024

June 5 - June 6Santa Clara CA United States

June 5 - June 6Santa Clara CA United States -

CDAO Canada Public Sector 2024

June 18 - June 19

June 18 - June 19 -

AI Hardware & Edge AI Summit Europe

June 18 - June 19London United Kingdom

June 18 - June 19London United Kingdom -

AI Hardware & Edge AI Summit 2024

September 10 - September 12San Jose CA United States

September 10 - September 12San Jose CA United States -

CDAO Government 2024

September 18 - September 19Washington DC United States

September 18 - September 19Washington DC United States