Forget the Algorithms and Start Cleaning Your Data

The idea that the combination of predictive algorithms and big data will change the world is a tempting one. And it may end up being true. But for right now, the industry is facing a reality check when it comes to big data analytics. Instead of focusing on what algorithms to use, your big data success depends more on how well you cleaned, integrated, and transformed your data.

The dirty little secret of big data analytics is all the work that goes into prepping and cleaning the data before it can be analyzed. You may have the sharpest data scientists on the planet writing the most advanced algorithms the universe has ever seen, but it won’t amount to a hill of beans if your data set is dirty, incomplete, or flawed. That’s why up to 80 percent of the time and effort in big data analytic projects is spent on cleaning, integrating, and transforming the data.

As the data scientist heading up Cloudera’s Oryx project to create a new machine learning framework, Sean Owen lives and breathes algorithms. But even Owen wonders if the industry’s fascination with algorithms has gone a bit too far.

“It’s interesting that a lot of the conversation amongst these startups seems to be who’s got more algorithms, who’s got the faster algorithms, who’s got the better accuracy on a given data set,” he tells Datanami. “These are all important in some context, but I think some of these are missing the more basic needs out there.”

“It’s interesting that a lot of the conversation amongst these startups seems to be who’s got more algorithms, who’s got the faster algorithms, who’s got the better accuracy on a given data set,” he tells Datanami. “These are all important in some context, but I think some of these are missing the more basic needs out there.”

Instead of focusing on algorithms, people ought to be focused on data. “Everybody basically has the same algorithms,” he says. “I have a hard believing there’s a fundamentally different new algorithm that’s going to come out and change the world…..Really, the differentiators from one company to the next is data–who has more data, who has better data. More better data plus a dumb algorithm is going to beat a really good algorithm on bad data.”

| Cloudera’s Sean Owen | |

The folks at RapidMiner have a similar viewpoint. The German-born advanced analytics software provider that Gartner considers a credible threat to the dominance of SAS and IBM‘s SPSS in this field has about 1,500 algorithms in its product, which it claims is the most of any company in its field.

But only about 250 of RapidMiner’s algorithms are aimed at what we might consider “the good stuff”—analyzing data in useful and creative ways. The rest of the algorithms are there to help ease the burden of integrating, cleaning, and transforming the data prior to analysis.

“Algorithms are one thing, but the more important thing is usually how you’re transforming the data and preparing the data before it goes into a predictive model,” Ingo Mierswa, co-founder and CEO of RapidMiner, tells Datanami. “In my 15 years working in this field, I’ve found that it’s much more about finding the right way of preparing the data and making the right variations and explaining those models than the actual algorithms.”

Even the best algorithm will only move the needle a little bit, Mierswa says. “And the big breakthroughs, when it comes to solving a certain problem like churn or predictive maintenance or whatever the business problem is–it’s more of how you are including certain data sets and how are you transforming the data set in a way so it covers more information for the actual predictive algorithm,” he says.

Hadoop analytics vendor Datameer has discovered the same thing about algorithms. “What we’re finding is people are spending 80 percent of time just in getting that data ready, because the data is so raw, because there’s so much of it, and it’s in so many formats,” Datameer’s senior director of product marketing Karen Hsu tells Datanami. “The data is a mess and you have to clean it up quickly. The first step is finding where the problem in your data are, and that’s where profiling comes in.”

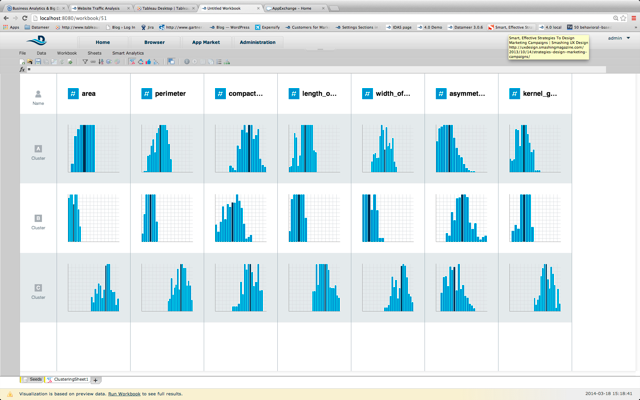

Today the company officially unveiled a new visual data profiling function in its version 4.0 release that allows analysts to see where their data might be a little out of whack before running it through one of four general algorithms Datameer provides, including clustering, column dependencies, decision trees, and recommendations. In addition to predictive analytics (which it unveiled last June), the company’s soup-to-nuts analytics stack covers data ingestion and visualization.

|

|

| Datameer 4.0 gives users visual clues about the quality of their data | |

The new data profiling function gives users a visual representation of their data set from Datameer’s Excel-like interface. The software uses “visual checkpoints” to communicate information about the data to the user, including data type and counts, and various statistical measure, including maximum, minimum, cardinality, means, and average. The software enables the user to change the automatically make a change, and then inspect the data again before moving onto actual analysis using algorithms.

Hsu says the data profiling function gives customers some of what Trifacta and Paxata offer in their automated data transformation tool sets, but does so within a larger suite that also includes data integration, analytics, and visualization layers. “We’re finding customers don’t want to have 50 tools they have to work with,” she says. “A business analyst wants one environment that they can use for everything.”

By the way, RadidMiner this week announced that it has officially moved its headquarters from Germany to the Cambridge area of Massachusetts. Besides the proximity to top universities, other big data startups, and venture firms, just having a physical presence on U.S. soil will help the company with American prospects, Mierswa says.

Related Items:

SAS and IBM King of Analytics Hill, But for How Long?

Datameer Reels in $19M as Venture Funds Move Up the Hadoop Stack