Bare Metal or the Cloud, That is the Question…

ROI is one of the big hurdles that organizations face when deciding to jump into the Hadoop big data lake. One of the big questions at the outset of a deployment is whether to “roll your own” on bare metal, do something in between, or jump full-bore into the clouds. Each of these options is fraught with their own set of concerns and challenges.

One of the main questions that IT is faced with when considering a Hadoop deployment is whether it is to be done in house, or off-premise – in the cloud. Traditionally, on-premise, bare metal clusters have been a popular deployment option for Hadoop, with a thought towards avoiding I/O overhead in virtualized environments. No so fast, says Accenture Technology Labs, who argues that that their cloud benchmarks show comparable price/performance ratio between the two options, putting them on near equal footing. A recent study gives the details.

One of the main questions that IT is faced with when considering a Hadoop deployment is whether it is to be done in house, or off-premise – in the cloud. Traditionally, on-premise, bare metal clusters have been a popular deployment option for Hadoop, with a thought towards avoiding I/O overhead in virtualized environments. No so fast, says Accenture Technology Labs, who argues that that their cloud benchmarks show comparable price/performance ratio between the two options, putting them on near equal footing. A recent study gives the details.

Organizations have four models to choose from when deciding on a Hadoop deployment, explained Michael Wendt, a developer with the Data Insights R&D group at Accenture during the recent Hadoop Summit. These options range from straight custom bare metal deployment to Hadoop as a service in the cloud:

- On-Premise Full Custom – The “bare metal” commodity hardware is purchased by the organization, who installs the software, and has complete customization and control if the deployment from start to finish

- Hadoop Appliance – A Hadoop appliance is installed into the datacenter. The organization can quickly jumpstart their analytics without the hassles (or specific benefits) of the initial custom technical configuration.

- Hadoop Hosting – The traditional ISP model where the organization is having Hadoop hosted, and configured by the service provider

- Hadoop-as-a-Service – This is Hadoop on demand with instant, virtualized clusters. Usually a pay per use consumption model

While the two options in the middle have their own set up pros and cons, Wendt explained that for their study, they set out to determine total cost of ownership between the far ends of the spectrum: bare metal vs. Hadoop in the clouds. The study, he explains, compared the price-performance between the two modes, and then matched the total cost of ownership (TCO) between the two. Once this was done, they combined that data with their own Accenture Data Platform Benchmark, which he says is a set of real world applications that they’ve used from their internal research and studies.

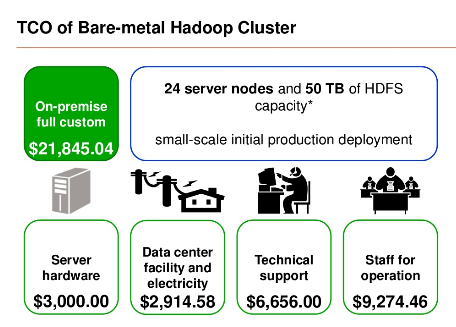

The first step, explained Wendt, was to determine the TCO for the bare metal cluster. To do this, the Accenture Labs team took a small scale, initial production deployment which they modeled on 24 server nodes with 50 TB of HDFS capacity using a replication factor of three.

To determine TCO, they evaluated the costs involved with the buying and maintaining of the aforementioned server hardware, the costs involved with the datacenter (including electricity for powering and cooling the servers), technical support, and the in-house people that are dedicated to the operation and management of the cluster. They came up with a total cost of $21,845.04 per month for their TCO budget for the bare metal deployment.

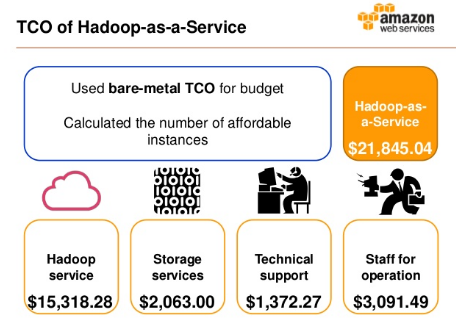

The group then took that budget and applied it to Hadoop-as-a-service, essentially using it as a fixed budget, and then calculating the number of affordable instances on AWS. After subtracting costs associated with storage, technical support, and staff, they were left with a total budget for Hadoop instances on Amazon.

They had to do a bit of truncation in this process, as there are 14 different Amazon EMR instance types with 3 different pricing models, giving a total of 42 different combinations (let no one say Hadoop is altogether easy in the cloud). For their benchmarks, Accenture Labs narrowed it down to three different instance types to represent different workload levels: standard extra-large (m1.xlarge), high-memory quadruple extra-large (m2.4xlarge), and cluster compute eight extra-large (cc2.8xlarge) (Note: more details about their decision making can be found in the white paper). They then ran each of these instance types using three different cluster configurations, including on-demand instances (ODI), reserved instances (RI), and reserved + spot instances (RI + SI)

Once they had the deployments set-up with Amazon, as well as a bare metal system housed by MetaScale, they tested the systems using their internally developed Accenture Data Platform Benchmark, a suite which they say “comprises multiple real-world Hadoop MapReduce applications.” These applications include log management, customer preference prediction, and text analytics, with workloads around sessionization, recommendation engine, and document clustering.

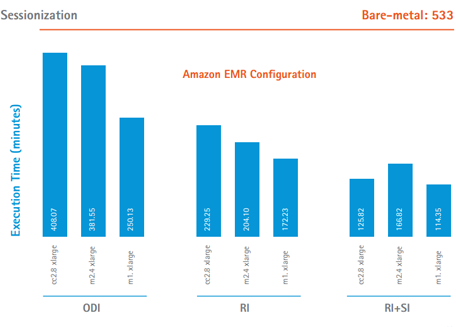

The results? While there are fine-point details that can be haggled over, the results came out competitive. In the case of the sessionization workload, the Amazon EMR cloud configuration outperformed the bare metal execution time across the board.

Where the recommendation engine was concerned, the bare metal deployment finished ahead of the cloud configuration in most cases according to the benchmark tests, with two instance types either meeting or beating it – and some others falling within parameters that some might find acceptable.

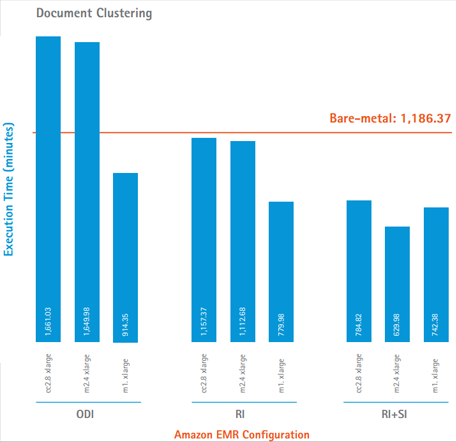

The document clustering workload benchmarks followed the same pattern as the recommendation engine, with most falling below the bare metal results, and two on the ODI performance end beating it. Again, there were results that came within ranges that some might find acceptable.

While there are a lot of factors to be considered when making a decision on where and how to deploy Hadoop (among them, security & data privacy, up-front and back-end costs, scaling, tuning, etc.), Accenture concludes that from a price-performance TCO perspective, Hadoop-as-a-service offers comparable – if not better price-performance ratio in comparison to bare metal.

With Hadoop adoption still in its infancy, there is a lot that will still shake out in this arena. These benchmarks didn’t include comparisons towards the middle of the spectrum, where Hadoop appliances live – we’re eager to see how this market shakes out. With a new benchmark suite to play with, it will be interesting to see what more Accenture Labs can do with it.

Related items:

Treasure Data Gains New Steam for Cloud-based Big Data

Hadoop Market Expected to Reach $20.9 Billion Globally in 2018

Yahoo! Spinning Continuous Computing with YARN

April 26, 2024

- Gartner Identifies the Top Trends in Data and Analytics for 2024

- Satori and Collibra Accelerate AI Readiness Through Unified Data Management

- Argonne’s New AI Application Reduces Data Processing Time by 100x in X-ray Studies

April 25, 2024

- Salesforce Unveils Zero Copy Partner Network, Offering New Open Data Lake Access via Apache Iceberg

- Dataiku Enables Generative AI-Powered Chat Across the Enterprise

- IBM Transforms the Storage Ownership Experience with IBM Storage Assurance

- Cleanlab Launches New Solution to Detect AI Hallucinations in Language Models

- University of Maryland’s Smith School Launches New Center for AI in Business

- SAS Advances Public Health Research with New Analytics Tools on NIH Researcher Workbench

- NVIDIA to Acquire GPU Orchestration Software Provider Run:ai

April 24, 2024

- AtScale Introduces Developer Community Edition for Semantic Modeling

- Domopalooza 2024 Sets a High Bar for AI in Business Intelligence and Analytics

- BigID Highlights Crucial Security Measures for Generative AI in Latest Industry Report

- Moveworks Showcases the Power of Its Next-Gen Copilot at Moveworks.global 2024

- AtScale Announces Next-Gen Product Innovations to Foster Data-Driven Industry-Wide Collaboration

- New Snorkel Flow Release Empowers Enterprises to Harness Their Data for Custom AI Solutions

- Snowflake Launches Arctic: The Most Open, Enterprise-Grade Large Language Model

- Lenovo Advances Hybrid AI Innovation to Meet the Demands of the Most Compute Intensive Workloads

- NEC Expands AI Offerings with Advanced LLMs for Faster Response Times

- Cribl Wins Fair Use Case in Splunk Lawsuit, Ensuring Continued Interoperability

Most Read Features

Sorry. No data so far.

Most Read News In Brief

Sorry. No data so far.

Most Read This Just In

Sorry. No data so far.

Sponsored Partner Content

-

Get your Data AI Ready – Celebrate One Year of Deep Dish Data Virtual Series!

-

Supercharge Your Data Lake with Spark 3.3

-

Learn How to Build a Custom Chatbot Using a RAG Workflow in Minutes [Hands-on Demo]

-

Overcome ETL Bottlenecks with Metadata-driven Integration for the AI Era [Free Guide]

-

Gartner® Hype Cycle™ for Analytics and Business Intelligence 2023

-

The Art of Mastering Data Quality for AI and Analytics

Sponsored Whitepapers

Contributors

Featured Events

-

AI & Big Data Expo North America 2024

June 5 - June 6Santa Clara CA United States

June 5 - June 6Santa Clara CA United States -

CDAO Canada Public Sector 2024

June 18 - June 19

June 18 - June 19 -

AI Hardware & Edge AI Summit Europe

June 18 - June 19London United Kingdom

June 18 - June 19London United Kingdom -

AI Hardware & Edge AI Summit 2024

September 10 - September 12San Jose CA United States

September 10 - September 12San Jose CA United States -

CDAO Government 2024

September 18 - September 19Washington DC United States

September 18 - September 19Washington DC United States