Privacy and Ethical Hurdles to LLM Adoption Grow

(jittawit21/Shutterstock)

Large language models (LLMs) have dominated the data and AI conversation through the first eight months of 2023, courtesy of the whirlwind that is ChatGPT. Despite the consumer success, few companies have concrete plans to put commercial LLMs into production, with concerns about privacy and ethics leading the way.

A new report released by Predibase this week highlights the surprisingly low adoption rate of commercial LLMs among businesses. For the report, titled “Beyond the Buzz: A Look at Large Language,” Predibase commissioned a survey of 150 executives, data scientists, machine learning engineers, developers, and product managers at large and small companies around the world.

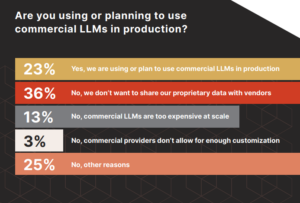

The survey, which you can read about here, found that, while 58% of businesses have started to work with LLMs, many remain in the experimentation phase, and only 23% of respondents had already deployed commercial LLMs or planned to.

Privacy and concerns about sharing data with vendors was cited as the top reason why businesses were not deploying commercial LLMs, followed by expense and a lack of customization.

“This report highlights the need for the industry to focus on the real opportunities and challenges as opposed to blindly following the hype,” says Piero Molino, co-founder and CEO of Predibase, in a press release.

While few companies have plans to use commercial LLMs like Google’s Bard and OpenAI’s GPT-4, more companies are open to using open source LLMs, such as Llama, Alpaca, and Vicuna, the survey found.

Privacy, or the lack thereof, is a growing concern, particularly when it comes to LLMs, which are trained predominantly on human words. Large tech firms, such as Zoom, have come under fire for their training practices.

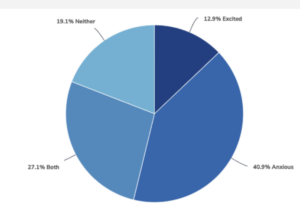

Now a new survey by PrivacyHawk has found that trust in big tech firms is at a nadir. The survey of 1,000 Americans, conducted by Propeller Research, found that nearly half of the U.S. population (45%) are “very or extremely concerned about their personal data being exploited, breached, or exposed,” while about 94% are “generally concerned.”

Only about 6% of the survey respondents are not concerned at all about their personal data risk, the company says in its report. However, nearly 90% said they would like to get a “privacy score,” similar to a credit score, that shows how expose their data is.

More than 3 times as many people are anxious about AI than excited, according to PrivacyHawk’s “Consumer Privacy, Personal Data, & AI Sentiment 2023” report

“The people have spoken: They want privacy; they demand trusted institutions like banks protect their data; they universally want congress to pass a national privacy law; and they are concerned about how their personal data could be misused by artificial intelligence,” said Aaron Mendes, CEO and co-founder of PrivacyHawk, in a press release. “Our personal data is core to who we are, and it needs to be protected…”

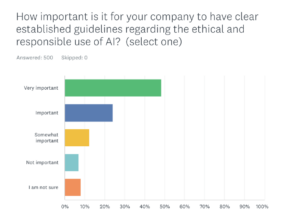

In addition to privacy, ethical concerns are also providing headwinds to LLM adoption, according to a separate survey released this week by Conversica.

The chatbot developer surveyed 500 business owners, C-suite executives, and senior leadership personnel for its 2023 AI Ethics & Corporate Responsibility Survey. The survey found that while more than 40% of companies had adopted AI-powered services, only 6% have “established clear guidelines for the ethical and responsible use of AI.”

Clearly, there’s a gap between the AI aspirations that companies have versus the steps they have taken to achieve those aspirations. The good news is that, among companies that are further along in their AI implementations, 13% more state they recognize the need for clear guidelines for ethical and responsible AI compared to the survey population as a whole.

“Those already employing AI have seen firsthand the challenges arising from implementation, increasing their recognition of the urgency of policy creation,” the company says in its report. “However, this alignment with the principle does not necessarily equate to implementation, as many companies [have] yet to formalize their policies.”

One in five respondents that have already deployed AI “admitted to limited or no knowledge about their organization’s AI-related policies,” the company says in its report. “Even more disconcerting, 36% of respondents claimed to be only ‘somewhat familiar’ with these concerns. This knowledge gap could hinder informed decision-making and potentially expose businesses to unforeseen risks.”

Related Items:

Zoom Data Debacle Shines Light on SaaS Data Snooping

Digital Assistants Blending Right Into Real World

Anger Builds Over Big Tech’s Big Data Abuses