Regs Needed for High-Risk AI, ACM Says–‘It’s the Wild West’

(Lightspring/Shutterstock)

The probability of AI going off the rails and hurting people has increased considerably thanks to the explosion in use of generative AI technologies, such as ChatGPT. That, in turn, is making it necessary to regulate certain high-risk AI use cases in the United States, the authors of a new Association of Computing Machinery paper said last week.

Using a technology like ChatGPT to write a poem or to write smooth-sounding language when you know the content well and can error-check the results yourself is one thing, said Jeanna Matthews, one of the authors of the ACM Technology Policy Council’s new paper, titled “Principles for the Development, Deployment, and Use of Generative AI Technologies.”

“It’s a completely different thing to expect the information you find there to be accurate in a situation where you are not capable of error-checking it for yourself,” Matthews said. “Those are two very different use cases. And what we’re saying is there should be limits and guidance on deployments and use. ‘It’s safe to use this for this purpose. It is not safe to use it for this purpose.’”

The new ACM paper, which you can access here, lays out eight principles that it recommends users follow when creating AI systems. The first four principles–regarding transparency; auditability and contestability; limiting environmental impact; and heightened security and policy–were borrowed from a previous paper published in October 2022.

Generative AI adoption is accelerating the discussion over AI regulation (khunkornStudio/Shutterstock)

OpenAI released ChatGPT the following month, which set off one of the most interesting, and tumultuous, periods in AI’s history. The subsequent surge in generative AI usage and popularity necessitated the addition of four more principles in the just-released paper, including limits and guidance on deployment and use; ownership; personal data control; and correctability.

The ACM paper recommends new laws should be put in place to limit the use of generative AI in certain situations. “No high-risk AI system should be allowed to operate without clear and adequate safeguards, including a ‘human in the loop’ and clear consensus among relevant stakeholders that the system’s benefits will substantially outweigh its potential negative impacts,” the ACM paper says.

As the use of generative AI has widened over the past eight months, the need to limit the most hurtful and high-risk uses has become more clear, says Matthews, who co-write the paper with Ravi Jain and Alejandro Saucedo. The goal of the paper was to inform not only the engineers and business leaders about the possible ramifications of use of high-risk generative AI, but also to give policymakers in Washington, D.C. a framework to follow as they craft new regulation.

After incubating for a few years, generative AI jumped into the scene in a big way, says Jain. “It’s been in the works for years, but it became really visible and tangible with things like Midjourney and ChatGPT and those kinds of things,” Jain says. “Sometimes you need things to become… really instantiated for people to really appreciate the dangers and opportunities as well.”

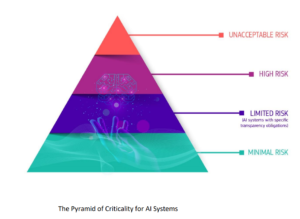

There are some parallels between the new ACM principles and the EU AI Act (on track to possibly become law in 2024), specifically around the concept of a hierarchy of risk levels, Matthews says. Some AI use cases are just fine, while others can have detrimental consequences on people’s lives if bad decisions are made.

The US should adopt a risk-based approach like the Europeans have proposed in the EU AI Act, which regulates the most high-risk AI use cases, authors of a new ACM report say

“We see a real gap there,” Matthews tells Datanami. “There’s been an implication that you can use this in scenarios that we think are just not responsible. But there are other scenarios in which it can be great. So keeping those straight is important and those people who are releasing technologies like this, we think would be much more involved in establishing those boundaries.”

The tendency for large language models (LLMs) to hallucinate things that aren’t there is a real problem in certain situations, Matthews says.

“It really is not a tool that should be used primarily for information discovery because it produces inaccurate answers. It makes up stuff,” she says. “A lot of it is the way it is presented or advertised to people. If it was advertised to people as this creates plausible language, but very often inaccurate language, that would be a completely different phrasing than ‘Oh my gosh, this is a genius. Go ask all of your questions.’”

The ACM’s new principles regarding ownership of AI and control over personal data are also byproducts of the LLM and Gen AI explosion. Attention should be paid to working out looming issues of copyright and intellectual property issues, Jain says.

“Who owns an artifact that’s generated by AI?” says Jain, who is the Chair of the ACM Technology Policy Council’s Working Group on Generative AI. “But on the flip side, who has the liability or the responsibility for it? Is it the provider of the base model? Is the provider of the service? Is it the original creator of the content from which the AI-based content was derived? That’s not clear. That’s an area of IP, law and policy that needs to be figured out–and pretty quickly, in my opinion.”

Issues over personal data are also coming to the fore, pitting a clash between the Internet’s historically open underpinnings and the capability for people to profit off data shared openly via Gen AI products. Matthews uses an analogy about the expectations one has when inviting a housecleaner into one’s home.

“Yes, they’re going to need to have access to your personal spaces to clean your house,” she says. “And if you found out that they were taking pictures of everything in your closet, everything in your bathroom, everything in your bedroom, every piece of paper they encountered, and then doing stuff with it that you had no [idea] that they were going to do, you wouldn’t say, oh, that’s a great, innovative business model. You’d say, that’s horrible, get out of my house.”

While the use of AI is not broadly regulated outside of industries that are already heavily regulated, such as healthcare and financial services, there is wide, bi-partisan support building for possible law, Matthews says. The ACM has been working with the office of Senator Chuck Schumer (D-NY) on possible regulation. Regulation could come sooner than expected, as Sen. Schumer has said that U.S. Federal regulation is possibly months away, not years, Matthews says.

There are multiple issues around generative AI, but the need to rein in the most dangerous, high-risk use cases appears to be top of mind. The ACM recommends following the European model here by creating a hierarchy of risks and focusing on the most high-risk use cases. That will allow AI innovation to continue while stamping out the most dangerous aspects, Matthews says.

“The way to have some regulation without harming innovation inappropriately is to laser-focus regulation on the highest severity, highest probability of consequence systems,” Matthews says. “And those systems, I bet you most people would agree, deserve some mandatory risk management. And the fact that we don’t have anything currently requiring that seems like a problem, I think to most people.”

While the ACM has not called for a pause in AI research, as other groups have done, it’s clear that the no-holds-barred approach to American AI innovation can’t continue as is, Matthews says. “I think it is a case of the Wild West,” she adds.

Related Items:

Europe Moves Forward with AI Regulation

AI Researchers Issue Warning: Treat AI Risks as Global Priority

Self-Regulation Is the Standard in AI, for Now