All Eyes on Snowflake and Databricks in 2022

(Sergey Nivens/Shutterstock)

It’s hard to overstate the impact that Snowflake and Databricks have had on the data industry, for customers, partners, and competitors. As data practitioners gear up for 2022, they’re keeping a watchful eye upon these two independent powerhouses to determine what comes next.

The explosive growth of Snowflake and Databricks over the past couple of years is remarkable for several reasons. For starters, that growth comes as the three major public cloud providers from Amazon, Google, and Microsoft have consolidated their market reach, becoming three of the biggest companies in the world with a mind boggling $5.5 trillion in collective market capitalization. The fact that the much smaller operations of Snowflake and Databricks have not only managed to maintain their independence, but to grow in such a climate relative to the cloud partners they depend on, is a testament to the companies’ execution.

Databricks’ Growth

Databricks, which was founded in 2013, has parlayed its early position as the commercial entity behind Apache Spark into a trusted cloud data platform that goes well beyond Spark. When the fortunes of a similar open source framework, Apache Hadoop, crashed and burned in 2019, Databricks’ pivot away from a single technology looks prescient.

Today, Databricks is arguably known best for its lakehouse platform, which blends the unstructured storage and processing capabilities of a data lake (like Hadoop or S3) with the structured storage an processing chops of traditional data warehouses. Largely through its Delta Lake offering, Databricks is credited with popularizing the lakehouse concept, which is slowly being adopted by the cloud giants, including AWS and Google. (Databricks has a closer partnership with Microsoft, which has leaned on Databricks for Spark expertise as well as Delta Lake. Databricks entire offering has only been available on Google Cloud for about a year.)

Databricks arguably has focused more on data science and data engineering that data analytics in the past, but that is starting to change. In late 2021, it went GA with its Databricks SQL offering, which brings the ANSI SQL standard to bear on data that’s stored in its lakehouse.

In 2022, you can expect to hear a lot more about lakehouses from Databricks, as the company seeks to convert its mindshare into market share. The San Francisco-based company unveiled its first industry-specific lakehouse for retail and consumer goods earlier this month, so it would seem likely that the company will follow up with additional offerings for other industry-specific verticals.

Don’t be surprised if you also hear more about data sharing, which it debuted last May. The ability for partners to share data is a growing concern, particularly among security- and privacy-conscious companies. Databricks, which disclosed that it has around 5,000 customers during its last round of funding, can also be expected to emphasize its solutions for real-time streaming data, which looks like it (finally) may have its moment in the sun after being a solution in search of a problem for so many years.

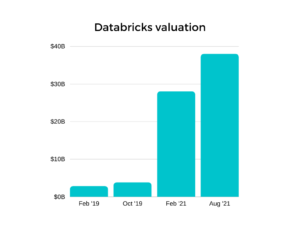

But the big question, of course, will be whether the company has an initial public offering (IPO) of stock. The company has raised $2.6 billion in funding in just the past 11 months, so it doesn’t necessarily need the money. But the company’s leadership has talked about the importance of being public in the past, and it seems likely that the company will eventually make this move–unless first it gets gobbled up by some industry giant looking to get even bigger, which is always a possibility.

Snowflake Rising

Snowflake has also grabbed the data spotlight in recent years, banking on its success in bringing the world of on-prem data warehousing into the public realm. The company’s much-ballyhooed 2020 IPO, which was dubbed the biggest software IPO of all time, still lingers in the minds of industry watchers as a reminder of how quickly a company can go from a rather obscure tech company into a worldwide powerhouse.

Snowflake initially garnered a lot of attention for its speedy column-oriented analytical database, which the company first brought to market in 2016. Back then, the big dog in the market was Hadoop, which attracted all kinds of attention. But then Snowflake CEO Bob Muglia wasn’t shy in bashing Hadoop and talking about how easy it was to run a large data warehouse in Snowflake.

That hard-won ease-of-use for customers has been the secret to Snowflake’s success, which to some appears as an overnight sensation but is really the culmination of a lot of hard, technical work by Snowflake’s founding team and its engineers, who have been at this game for many years and understood what the next-generation of cloud-based warehouses would required. They delivered it, and are reaping the rewards of it today.

Today, Snowflake runs in all major clouds. But beyond the fast OLAP processing that grabs the headlines, it’s really the surrounding element—such as the separation of compute and storage, on-demand scalability, and broad support for different data types and programming languages–that are differentiating Snowflake from the increasingly crowded field of cloud data warehousers.

More recently, the company has gone beyond SQL to embrace data science, including machine learning workloads. The introduction of its Snowpark offering gives Snowflake the capability to embrace ETL and data pipeline jobs that would normally be done in a framework like Spark or Dask. In November, the company announced support for Python with a new DataFrame API in Snowpark, giving the company the means to embrace the number one data science language and the considerable machine learning workloads that it drives. And with its data marketplace, Snowflake envisions itself as a one-stop shop for all your data analytics and data science needs. Some might even call it a “data cloud.”

So, what will 2022 bring? One area of possibility is that Snowflake will flesh out its lakehouse architecture. As stated earlier, the lakehouse idea was popularized by Databricks, but is being adopted across the industry.

The need for faster ingest of data into analytic data stores is also lurking in the backs of data architects minds as we enter 2022. There are limits into how quickly you can write data into traditional data warehouses without impacting reads, which is one of the main reasons why real-time data processing has evolved along a separate technological track, with its own separate frameworks and functions. Technologies like Spark Streaming, Apache Flink, and Google Beam, not to mention the applications built atop Apache Kafka by Confluent (another looming data superstar), have sought to give companies the real-time answers they demand.

One thing is for certain: Wall Street analysts are bullish on Snowflake’s 10th year in business, with a median forecast of a 39% increase in its stock price (NYSE: SNOW) and a high of 100%, which would represent another $86 billion in market capitalization added to the company from Bozeman, Montana, which CEO Frank Slootman selected as the new headquarters early in the pandemic.

Related Items:

Snowflake Adds Python Support with Winter Release

Databricks SQL Now GA, Bringing Traditional BI to the Lakehouse