Flash and the Future of Storage in a Software-Defined World

The ongoing explosion of data is forcing organizations to make tough decisions about how they store information. While petabyte-scale analytic systems like Hadoop will likely continue running on traditional hard disks for the foreseeable future, the migration to solid-state technology and software-defined storage approaches appear inevitable for most other classes of business applications.

The market for solid-state arrays (SSAs) hit a turning point in 2013 according to Gartner. Up until then, SSAs occupied a small niche for a class of applications where high-speed, low-latency access to data outweighed the higher cost and lower overall maturity of SSAs. Arrays built on good old fashioned spinning disks–which hadn’t changed much in a generation–still dominated the bulk of the $30-billion storage market.

But starting in 2013, SSA makers using disks built with NAND flash memory started adding advanced features–such as deduplication, compression, thin provisioning, snapshots and replication technologies–that combined to help drop the cost of SSAs and make them competitive with traditional storage arrays. The market responded by selling $667-million worth of SSAs in 2013, a mind-boggling 182 percent increase over the prior year.

With SSAs effectively at cost-parity with traditional arrays but holding an order-of-magnitude sized performance edge, SSA makers are eager to gobble up market share from vendors who are building with spinning disks. We’re witnessing how SSA pure-plays like Pure Storage, Violin Memory, SolidFire, Nimbus Data, Skyera (since bought by Western Digital), and Kaminario are going up against incumbents like IBM, EMC, Hewlett-Packard, and NetApp.

According to Gartner, SSAs are now competitive with general-purpose storage arrays in every category except for scale. (That’s why you won’t see Hadoop clusters heavily invested in flash, at least for a while.) The most popular workloads for SSAs are online transaction processing, analytics, server virtualization, and virtual desktop infrastructures.

No Room for Hybrids?

Eager to protect their share of a rapidly evolving market, many incumbent storage vendors have taken a hybrid approach that combines state drives with traditional spinning disks in a single chassis. But Gartner warns that this “stopgap” approach may not prove viable over the long term.

Matt Kixmoeller, vice president of products at SSA maker Pure Storage, agrees that the hybrid approach won’t fly in the long run. “Flash really changes everything,” Kixmoeller says. “We’re finding that, when customers engage in head-to-head POCs [proof of concepts], they very quickly understand the difference between an all-flash array that was built from scratch for flash versus a legacy architecture that had some flash shoved in it.”

Gartner rated the flash players last August in its debut Magic Quadrant for Solid-Stay Arrays (SSAs).

The problem with the hybrid approach, Kixmoeller says, stems from how applications today run in modern data centers, and the algorithms in hybrid disk arrays. Hybrid arrays rely heavily on predictive algorithms to determine whether a piece of data should be cached in the “hot” zone (flash) or in the “cold” zone (tradition spinning media). But according to Kixmoeller, those algorithms don’t work in today’s virtualized data center environment, where the next piece of I/O can’t be accurately predicted.

“We think it’s technologically the wrong way to go,” he says. “We’ve been able to drive the cost of flash down to that of hybrid architectures or under [or $2 to $3 per GB], so if you can get an all-flash architecture for the same cost as hybrid, why bother?”

Disk As The New Tape

The writing appears to be on the wall for spinning disk, at least as far as front-line business applications are concerned: You will be replaced eventually by flash-based drives. While disk will retain a foothold in offline storage–backup, archive, and petabyte-scale big data clusters–it would appear that flash is emerging as the primary media for storing the world’s active data.

“Disk is dead” declared SSA maker Violin Memory. Violin is so confident in disk’s downfall that it’s giving away tickets to Grateful Dead’s 50th anniversary concert as part of its marketing campaign. “Disk drives served the IT community well. But IT requirements have changed, disk drives can’t keep up, and flash storage technology simply provides a superior solution,” said Amy Love, Violin’s chief marketing officer.

The exponential growth of the SSA market is causing people to rethink how they view storage, according to Mark Peters, a senior analyst with ESG Storage Systems. “It is clear that most IT organizations are no longer wondering if, but rather when to make the leap [to flash],” he says. “They move not only for the obvious benefits of flash, but also to leave behind many of the inherent challenges long associated with–but equally long accepted of–all-disk environments.”

Pure’s Kixmoeller sees tape potentially outliving spinning disk. “There’s an argument that can be made that long term the answer is flash plus tape, and that maybe disk is the thing that gets squeezed in the middle,” he says. “I think for the cold storage, deep-archive space, there will be a disk-versus-tape showdown over time.”

Enter Software-Defined Storage

The move to flash-based arrays for primary storage is taking place at the same time the industry is embracing software-defined storage (SDS), which is essentially about adopting virtualization at the storage tier to optimize storage and data access. The adoption of features like deduplication, snapshotting, and replication in flash arrays is an example of SDS at work.

According to SDS software maker DataCore, which recently conducted a survey on SDS uptake, sales of SDS solutions will grow at about a 35 percent clip through 2019, when it will account for $6 billion in revenue. Those aren’t flash-like numbers, but they’re exceeding the rate of growth in the overall storage market.

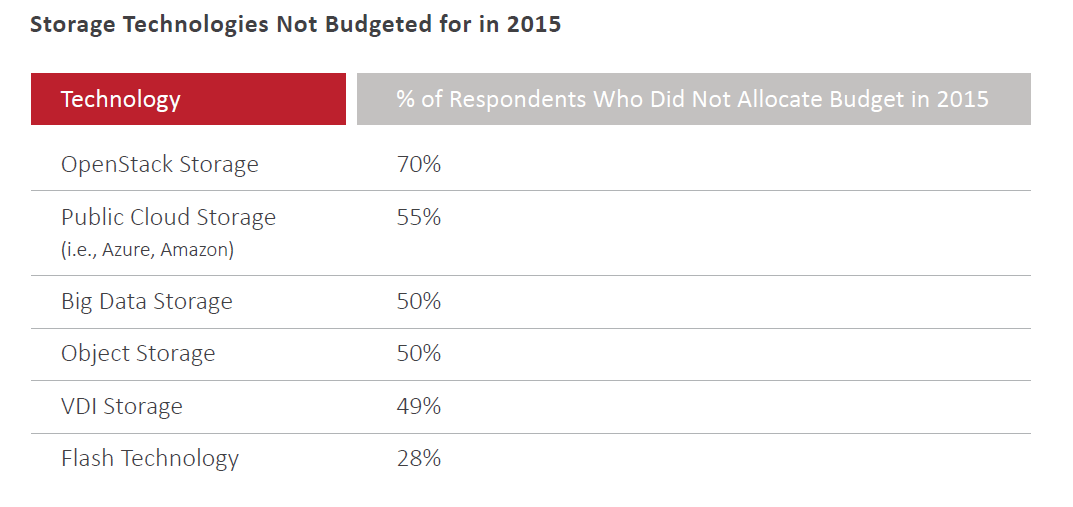

According to DataCore’s survey, most organizations are not currently investing in OpenStack, Hadoop, or flash.

DataCore President and CEO George Teixeira says the growth in SDS comes in part from the fact that customers today don’t want storage tied to specific hardware. They don’t want to be “locked to particular hardware or being forced to go ‘all new…’ to modernize their storage infrastructure,” he says.

Interestingly, DataCore found flash adoption to be smaller than expected in its survey, which touched 477 organizations. Big data and object-based storage systems were other big-hyped technologies that disappointed when the survey results were tallied.

DataCore will be getting some competition from Hedvig, an SDS startup that today announced the close of an $18 million Series B funding round. Hedvig was founded by Avinash Lakshman, who led the development of the Dynamo database at Amazon and the Cassandra database at Facebook. He’ll be using those NoSQL skills to create the Hedvig Distributed Storage Platform, which “combines the flexibility of cloud and commodity infrastructure to reimagine the economics of enterprise data storage and management,” the company says.

Seeding Flash with SDS

While the hardware-focused approach of flash array vendors may be anathema to the “virtualize everything” approach of the SDS crowd, the two trends are complementary. In fact, maintaining upgradeability and avoiding technology lock-in is a theme in Pure Storage’s announcements today.

According to Pure Storage’s Kixmoeller, the company’s new FlashArray//m was designed to give customers the performance and storage density advantages of an appliance-based architecture, but to do so without giving up the modularity and upgradeability of a traditional chassis-based architecture. While Pure Storage has traditionally focused on innovating at the software layer, it saw how other SSA vendors were doing interesting stuff at the hardware layer, and sought to emulate them.

“Those vendors say, ‘Hey flash chips are different. If I can redesign the controller and chassis, I can get to a really interesting level of density and simplicity,'” Kixmoeller tells Datanami. “But for the most part, those vendors have software as an afterthought and weren’t able to deliver interesting software features. So we saw an opportunity combine those two areas.”

The FlashArray//m is able to pack 120 TB of storage into a 3U chassis that consumes less than 1 kilowatt of power. That’s 2.6 times as dense as its first-gen FlashArray series, and up to 40 times as dense as traditional tier-one disk arrays, the company says. On the power efficiency front, the FlashArray//m is 2.3 times as efficient as the first-gen FlashArray series, and 10 to 20 times more efficient than spinning-disk arrays.

The energy envelope for the new array is impressive. A traditional storage array consumes about as much energy as it takes to power 10 houses, Kixmoeller says. “The FlashArray//m consumes about what your toaster oven does,” he says. “If you can move all those arrays from 10 houses to a toaster oven, and multiply that to a global scale, that’s two nuclear plants we can avoid.”

The convergence of flash’s storage efficiency and SDS’s maintainability combined with Pure Storage’s other announcement today: the Pure1 service offering. Under the new Pure1 service, the company has pledged to keep its customers’ arrays functioning and refreshed with the latest NAND and storage controller technologies for seven to 10 years.

“Customers are drowning in a sea of complexity,” Kixomoeller says. “Fundamentally we’d like to take away the pain from customers and do more of the management ourselves. We said, How can we use technology to deliver better support? Can we modernize this whole thing and deliver a cloud or SaaS-based model to deliver and structure management, and can we be more proactive? That’s the thinking behind Pure1.”

Flashy Competition

Not be outdone, Pure Storage’s competitor Hewlett-Packard today announced a new iteration of its flash array. The 3PAR StoreServ Storage 2000 family can cram 280 TB into a 2U space, which is 85 percent smaller footprint than traditional high-end arrays. What’s more, the new device weighs in at a meager $1.50 per gigabyte, which is on par with the cost of 10,000 RPM SAS hard drives that are ubiquitous today. But when you factor in the data de-duplication technologies resident in the 3PAR line, it effectively reduces the per-GB cost to $.25, HP says.

HP also unveiled the 3PAR StoreServ 20800, a converged flash array that scales up to 15 petabytes of usable capacity. Both of the new models support block and file storage approaches, HP says, while the increased density “assures that customers can prevent flash-array sprawl caused by introducing separate, capacity limited flash architectures into their datacenter.”

The new SSAs show that flash is ready for deployment throughout the data center, says Manish Goel, senior vice president and general manager for HP Storage. “Early flash adopters are seeing added benefits such as extreme savings and productivity enhancements that are leading to an all-flash strategy for more applications,” Goel says.

The amount of data we’re generating doubles every two years, which is creating crushing pressures on storage professionals to keep it all manageable and affordable. The combination of SSAs at the hardware level and SDS at the software level will be instrumental in helping data professionals to avoid being caught in the undertow of big data.

Related Items:

How Machine Learning Is Eating the Software World

Peering Into Computing’s Exascale Future with the IEEE

5 Ways Big Geospatial Data Is Driving Analytics In the Real World