The Power and Promise of Big Data Paring

I think a lot about big data and the challenges it proposes. I guess I started thinking about big data a long time before I ever heard the term. I began to think about it when I heard and read one of the conclusions of the post-mortem for the events on and around September 11th, 2001: That we had the information to realize that this attack was coming, but we simply didn’t analyze the data fast enough. This has always stuck with me and kept the problem on my mind, quite a while before I was aware of the term big data.

One of the more well-known challenges for big data is that you have to pay a lot more attention to where the data is now. This isn’t a new challenge–I remember a customer laughing as he told me how 15ish years ago his team would express mail hard drives from one site to another because the network transfer was slower than the postal service–but the size of data is growing so quickly that this is changing from a fringe concern to a core concern.

You always hear about the need to localize computational resources and make intelligent data staging decisions, but one of the dimensions of this problem that needs to be more discussed is data paring. The need for this is fairly obvious: data is growing exponentially, and growing your compute data exponentially will require budgets that aren’t realistic.

One of the keys to winning at big data will be ignoring the noise. As the amount of data increases exponentially, the amount of interesting data doesn’t; I would bet that for most purposes the interesting data added is a tiny percentage of the new data that is added to the overall pool of data.

To explain these claims, let’s suppose I’m an online media streaming provider attempting to predict what you’d be interested in seeing based on what you’re looking at now. This is an incredibly difficult machine learning problem. Every time a user watches some content it has to be cross-referenced with everything else that that user has watched, potentially creating hundreds, thousands, or even more new combinations that can be used to predict what else you might like to see.

These are then compared with all of the other empirical data from all other customers to determine the likelihood that I might also want to watch the sequel, other work by the director, other work from the stars in the movie, things from the same genre, etc. As I perform these calculations, how much data should be ignored? How many people aren’t using the multiple user profiles and therefore don’t represent what one person’s interests might be? How many data points aren’t related to other data points and therefore shouldn’t be evaluated as a valid permutation the same as another point?

Answering these questions through paring and sifting algorithms is a dimension of big data that will be only grow in significance over time. Data capturing will always be fundamentally faster and easier than data analysis, and data will continue to multiply faster than bunny rabbits. Not wasting time on irrelevant data will be one of the keys to staying ahead of the competition.

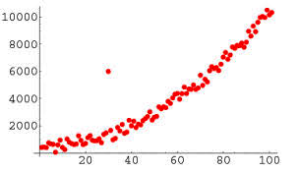

The scientific community has been determining how to remove irrelevant data for a long time, so long that the term outlier is very well-known. As big data moves more and more to the forefront, organizations that can adapt techniques to ignore outliers and draw intelligent conclusions based on higher-correlated data are going to lead the way.

Obviously, generalizing the technique of paring data is difficult, but perhaps thinking more about outliers can help us on our quest. One mathematical definition of an outlier is any data point that is either 1.5 quartile ranges below the first quartile or above the third quartile. In layman’s terms, an outlier is a data point that is too different from the other data points to be meaningful to predict trends.

Now, if there is a way to represent the data as a single number, then one could simply translate the different data into those numbers and run all the numbers through a simple program that would calculate the outliers. In many cases, representing data points as a single number may not be possible, but some variation of this method may be helpful. However, there are certain more complicated data sets where condensing the data to a single point results in unacceptably high amounts of data loss, and we need to do something else.

Most examples are going to be very specific to the kind of data that we are analyzing. If we re-visit the idea of predicting what kind of media content one might be interested in based on previous viewing history, perhaps we can make outliers based on different categories.

For example, we might find that the specific sci-fi and fantasy movies one has viewed in the past are important for what kind of romantic comedies the same person or account might want to see. Perhaps the sci-fi and fantasy movies are for one circumstance and the romantic comedies are for when one is in a different mood or in different company.

Another kind of outlier-by-category, as we might call it, could be by the movie’s rating. Different user profiles are possible but not always used. It could be that G-rated content is what one views typically with her or his children, and PG-13 or R-rated content is what one views whe n children are not present, meaning the episodes of My Little Pony can be ignored when trying to predict the next drama or action movie. We can see that for more complicated datasets we can look into categories for potentially identifying meaningful outliers.

n children are not present, meaning the episodes of My Little Pony can be ignored when trying to predict the next drama or action movie. We can see that for more complicated datasets we can look into categories for potentially identifying meaningful outliers.

A final technique for paring down data might be identifying thresholds for when sufficient data has been analyzed to draw a conclusion, despite having more available data. In the streaming example, this might be that a certain person has watched six movies which are directed by Tim Burton and starring Johnny Depp. In such a scenario, one can probably recommend any other movies that are directed by Tim Burton and starring Johnny Depp without needing to look at the other movies that he or she has viewed previously.

The same technique can be used to remove possibilities. Since not all the world is online streaming, allow me to use a machine vision example: suppose I’m trying to recognize pictures of skyscrapers. Instead of looking at 3264 pixels by 2448 pixels to determine if it’s a picture of a skyscraper, I can examine a band somewhere towards the middle of the picture to see if anything in that band matches what a band of a skyscraper might look like. If it doesn’t the picture can be skipped immediately, with much of the data ignored.

Obviously, it takes creativity and some underlying understanding of the nature of the data in order to effectively apply these techniques. One must make a special effort not to ignore important data while at the same time realizing that taking too much time to analyze the data may lead us to the correct conclusion at a time when the correct conclusion has already happened or the opportunity to prevent it is already gone. Interesting data is a wonderful indicator of the likely future, and separating the interesting from the uninteresting is probably the greatest barrier keeping us from getting there.

Related Items:

How T-Mobile Got More from Hadoop

The Big Data Inside Amazon’s New Fire Phone

Are You Data Literate? Education for the Information Economy