Amazon Goes SSD with RedShift ‘Dense Compute Nodes’

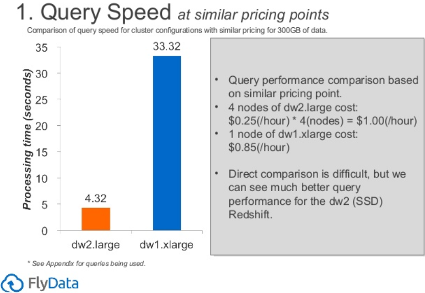

Do you have a need for speed in your data warehouse? In January, Amazon rolled out a new version of its Redshift online data store that’s powered by solid state disks (SSD). According to benchmarks, the Dense Compute Nodes proved anywhere from four to eight times faster than the traditional nodes, at the same price level.

Amazon says the new Dense Compute Nodes it unveiled January 24 deliver a high ratio of CPU, RAM, and I/O capability to storage, which makes them an ideal place to host high-performance data warehouses.

Amazon says the new Dense Compute Nodes it unveiled January 24 deliver a high ratio of CPU, RAM, and I/O capability to storage, which makes them an ideal place to host high-performance data warehouses.

It didn’t take long for the folks at FlyData to put the new nodes (also called DW2) to the test. The company says it took DW2 about 4.3 seconds to run its queries against a 300GB, compared to more than 33 seconds for the traditional hard disk (DW1) nodes. A 1.2 TB dataset can be queried in under 10 seconds, which makes Redshift a place to run new workloads, such as ad delivery optimization and financial trading systems.

Amazon is selling two versions of the RedShift Dense Compute Nodes. The Large setup includes160 GB of SSD storage, two Intel Xeon E5-2670v2 virtual cores, and 15 GB of RAM. It is also selling an “Eight Extra Large” package that includes 2.56 TB of SSD storage, 32 Intel Xeon E5-2670v2 virtual cores, and 244 GB of RAM.

In Amazon’s case, fast data processing does not correlate with big data. Customers will save money by moving their workloads to DW2 (the Dense Compute Nodes) only when their data set is smaller than 480 GB. Above that figure, DW2 should only be considered if query and load performance is the primary object, and cost is not an consideration.

“If you have less than 500 GB of compressed data, your lowest cost and highest performance option is Dense Compute,” Amazon says in its blog. “Above that, if you care primarily about performance, you can stay with Dense Compute and scale all the way up to clusters with thousands of cores, terabytes of RAM and hundreds of terabytes of SSD storage. Or, at any point, you can use switch over to our existing Dense Storage nodes that scale from terabytes to petabytes for little as $1,000/TB/year.”

Redshift, you will remember, exposes an SQL interface, and is built on ParAccel massively parallel processing (MPP) platform now owned by Actian. Amazon first introduced Redshift in November 2012, and made the product generally available in February 2013.

Amazon offers pre-built interfaces to a number of data analytics and business intelligence packages, including Actian, Actuate, Birst, Chartio, Datawatch (announced today), Dundas, Infor, Jaspersoft, JReport, Looker, Microstrategy, Pentaho, Redrock BI, SiSense, and Tableau.

Related Items:

Keeping Tabs on Amazon EMR Performance

Tableau Leverages AWS for Big Data Analytics

Amazon Tames Big Fast Data with Kinesis Pipe