Facebook’s Open Compute Cold Storage on Display

Facebook’s Open Compute Architecture was on display this week when it opened up its newest cold storage facility for a press peek.

The new 62,000 square-foot facility started data migration last week, with the purpose of housing cold storage servers for all of those photo and video albums that its users have buried deep in their profiles. Currently, Facebook estimates that it has more than 240 billion photos on its network, with users uploading approximately 350 million new photos daily.

The new 62,000 square-foot facility started data migration last week, with the purpose of housing cold storage servers for all of those photo and video albums that its users have buried deep in their profiles. Currently, Facebook estimates that it has more than 240 billion photos on its network, with users uploading approximately 350 million new photos daily.

While all that is very interesting, just as interesting is seeing this latest implementation of its open hardware idea, as expressed through the Open Compute Project – a project which what launched by Facebook engineers two years ago aimed at developing hardware using the same model that is traditionally associated with open source software project.

Starting at square one, the engineers involved with the Open Compute project aimed its efforts around maximizing efficiency in virtually every aspect imaginable. This includes such things as

- Using a 480-volt electrical distribution system to reduce energy loss.

- Removing anything in its servers that didn’t contribute to efficiency.

- Reusing hot aisle air in winter to both heat the offices and the outside air flowing into the data center.

- Eliminating the need for a central uninterruptible power supply.

Out of this effort have come such datacenter implements as the Open Rack, built around a “grid to gates” holistic philosophy that considers everything from the power grid to the gates in the motherboard chips, and the Open Vault storage server built to prioritize high density and serviceability. (Note: Taylor Hatmaker of ReadWrite attended the opening and got some shots worth checking out.)

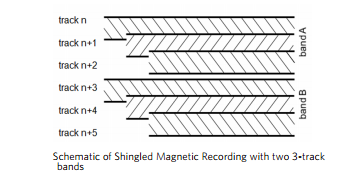

A noteworthy feature of the new cold storage datacenter is that it is an early implementation of the Open Compute Project’s new cold storage specification, which aims to the extremes of high capacity at the cheapest price point – sparing no expense to spare no expense. Central to this specification is the Shingled Magnetic Recording (SMR) HDD, a hard drive technology that has considerable implications for big data systems due to its ability to store up to eight times the amount of data volume as a standard HDD.

Developed by Seagate, and put through paces at Carnegie Mellon’s Parallel Data Laboratory, SMR HDD technology has come a long way very fast. While the technology enables an exponential expansion in the ability to store data through the way it “shingles” the storage tracks, it also comes with its limitations. Included in these limitations is that SMR HDDs are extremely sensitive to vibration, so only one drive of the 15 on an Open Vault tray is able to spin at any given time. While this is not ideal for a “hot” data system, it lends itself to the low use demands of a cold one.

Facebook says that more than 80 percent of its traffic revolves around a mere 9 percent of the total photos being stored on its network, giving the company every reason to cut as much of the fat as possible as it implements its newest cold storage facility.

A week into the operation, Facebook says that the new facility is hosting over 9 petabytes of user data and growing.

Related items:

Facebook Pushes Open Hardware Drive