Tag: Nvidia

IBM: We’re the Red Hat of Deep Learning

IBM today took the wraps off a new release of PowerAI, the prepackaged bundle of deep learning frameworks that debuted last fall. With the addition of Google's TensorFlow framework, the company says its AI business model Read more…

Spark ML Runs 10x Faster on GPUs, Databricks Says

Apache Spark machine learning workloads can run up to 10x faster by moving them to a deep learning paradigm on GPUs, according to Databricks, which today announced that its hosted Spark service on Amazon's new GPU cloud. Read more…

Power8 with NVLink Coming to the Nimbix Cloud

Starting later this month, HPC professionals and data scientists wishing to try out NVLink’d Nvidia Pascal P100 GPUs won’t have to spend upwards of $100,000 on NVIDIA’s DGX-1 server or fork over about half that for Read more…

AWS Beats Azure to K80 General Availability

Amazon Web Services has seeded its cloud with Nvidia Tesla K80 GPUs to meet the growing demand for accelerated computing across an increasingly-diverse range of workloads. The P2 instance family is a welcome addition fo Read more…

IBM Debuts Power8 Chip with NVLink and 3 New Systems

Not long after revealing more details about its next-gen Power9 chip due in 2017, IBM today rolled out three new Power8-based Linux servers and a new version of its Power8 chip featuring on-chip NVLink interconnect. One Read more…

How GPU-Powered Analytics Improves Mail Delivery for USPS

When the United States Postal Service (USPS) set out to buy a system that would allow it to track the location of employees, vehicles, and individual pieces of mail in real time, an in-memory relational database was its Read more…

AI to Surpass Human Perception in 5 to 10 Years, Zuckerberg Says

Machine learning-powered artificial intelligence will match and exceed human capabilities in the areas of computer vision and speech recognition within five to 10 years, Facebook CEO Mark Zuckerberg predicted this week. Read more…

New NVIDIA GPU Drives Launch of Facebook’s ‘Big Sur’ Deep Learning Platform

Facebook continues to pour internet-scale money into Deep Learning and AI, announcing its new “Big Sur” computing platform designed to double the speed for training neural networks of twice the size. NVIDIA’s Read more…

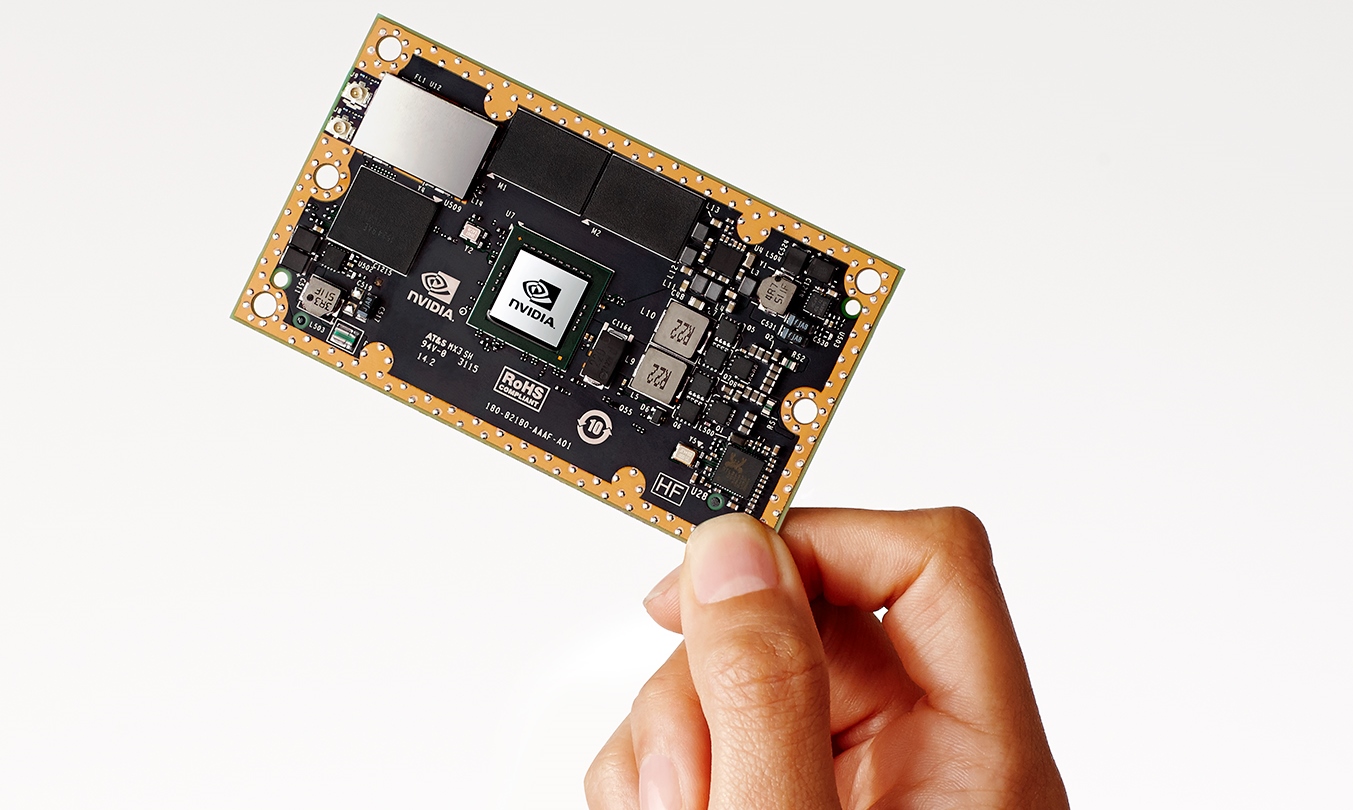

Nvidia Sets Deep Learning Loose with Embeddable GPU

Nvidia (NASDAQ: NVDA) this week unveiled the Jetson TX1, a credit card-sized device that packs a computational wallop for tasks such as machine learning, computer vision, and data analytics. The company envisions custome Read more…

Inside Yahoo’s Super-Sized Deep Learning Cluster

As the ancestral home of Hadoop, Yahoo is a big user of the open source software. In fact, its 32,000-node cluster is the still the largest in the world. Now the Web giant is souping up its massive investment in Hadoop t Read more…

How NVIDIA Is Unlocking the Potential of GPU-Powered Deep Learning

Companies across nearly all industries are exploring how to use GPU-powered deep learning to extract insights from big data. From self-driving cars and voice-directed phones to disease-detecting mirrors and high-speed se Read more…

A Shoebox-Size Data Warehouse Powered by GPUs

When it comes to big data, the size of your computer definitely matters. Running SQL queries on 100 TB of data or joining billions of records, after all, requires horsepower. But organizations with big data aspirations a Read more…

MIT Spinout Exploits GPU Memory for Vast Visualization

An MIT research project turned open source project dubbed the Massively Parallel Database (Map-D) is turning heads for its capability to generate visualizations on the fly from billions of data points. The software—an SQL-based, column-oriented database that runs in the memory of GPUs—can deliver interactive analysis of 10TB datasets with millisecond latencies. For this reason, its creator feels comfortable is calling it "the fastest database in the world." Read more…

This is Your Brain on GPUs

By now, we were told, we’d each have an intelligent robot assistant who would perform all the boring and repetitive tasks for us, freeing us to live a life of leisure. While that Jeston-esque future never quite materialized, recent breakthroughs in machine learning and GPU performance are enhancing our lives in other ways. Read more…

GPUs Push Big Data’s Need for Speed

During his keynote this week at the GPU Technology Conference, NVIDIA CEO, Jen-Hsun Huang provided a few potent examples of how web-driven big data applications are pushing their real-time delivery envelope by adding GPUs into the fray. Among these users are audio recognition service, Shazam, which.... Read more…

Python Wraps Around Big, Fast Data

Python is finding its way into an ever-expanding set of use cases that fall into both the high performance computing and big data buckets. Read more…

Fuzzy Thinking about GPUs for Big Data

There are plenty of companies, big ones and startups alike, that are trying to bridge the gap between big data and fast data. These same vendors are also trying to move analytics from reactive to predictive as well trying to provide the power of supercomputers on a simple desktop. Fuzzy Logix, profiled here by NVIDIA in their startup series, is one of those vendors who are emphasizing GPU-based computing in order to achieve those goals Read more…

The GPU “Sweet Spot” for Big Data

GPUs have stirred some vicious waves in the supercomputing community, and these same performance boosts are being explored for large-scale data mining by a number of enterprise users. During our conversation with NVIDIA's Tesla senior manager for high performance computing, Sumit Gupta, we explored how traditional data... Read more…

GPUs Tackle Massive Data of the Hive Mind

LIVE from GTC12 -- The flock of birds that weaves seamlessly through the sky, propelled forward as one but without a leader. Or the school of shining fish darting through a sea of prey with one mind and lightening-quick collective reactions to stimuli. These are phenomena that one Princeton researcher, armed with Tesla GPU and CUDA.... Read more…