IDC HPC Forum

9/6/16 – 9/8/16

In this challenging new world of massive data growth, rapid data movement, and ever-increasing demand for computational performance, supercomputers have become the engines driving the discoveries that impact all of our lives.

The immense compute power provided by supercomputers allows researchers in government, academia, science, engineering, and industry to extract insight from incredibly large data sets, and produce computationally-intensive models and simulations that help us solve our most complex problems. These high performance computing (HPC) systems are enabling the national research community to break the barriers of traditional computing, and gain the computational power needed to address their most demanding research initiatives.

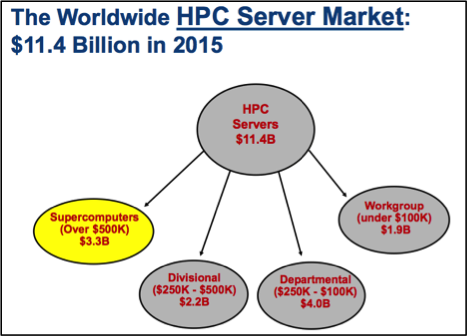

The importance of HPC to scientific, technological, and economic leadership has made it one of the fastest-growing technology sectors over the last 15 years, even during periods of economic turbulence. According to IDC’s annual HPC Market Update, the worldwide HPC server market grew at a staggering rate of 11 percent for all of 2015 to $11.4 billion, with the supercomputers segment (HPC systems costing over $500,000) representing $3.3 billion.

Source: IDC HPC Market Update, 2016

The projected growth of the supercomputing segment, from $3.3 billion in 2015 to $4.5 billion by 2020, demonstrates that companies all over the world are investing in systems with tremendous processing capabilities in order to gain an economic and competitive edge. The growth of Big Data and increased adoption of HPC is driving a fundamental industry shift, one which is making massively parallel processing available to companies of all sizes and disruptive innovation achievable for virtually every enterprise.

Supercomputers are now in widespread use among universities, government agencies, medical research institutions, and large manufacturers. Advanced HPC systems offer these organizations the ability to process data, run systems, and solve problems across a variety of applications at a scale never before envisioned, including quantum mechanics, weather forecasting, climate research, oil and gas exploration, molecular modeling, physical simulations, aerodynamics, cryptanalysis, and more.

Whether working toward the next major scientific breakthrough or trying to build a better, faster widget than the competition, HPC users are forever in search of higher levels of computing performance and speed. However, the massive space and energy requirements of traditional supercomputers can be impediments to the growth of supercomputing power and slow the pace of innovation. A few of the challenges include:

Traditional computing systems quickly run out of space, power, and cooling as organizations move into highly parallel compute-intensive processing environments. Once compute requirements reach the petaflop level, a transition to new purpose built, highly efficient HPC solutions becomes critical if we are to make effective use of these unprecedentedly large data sets.

A trusted compute partner can deliver HPC systems that are easier to implement, manage, and support, helping enterprises achieve computing performance as limitless as the human imagination. Thought leaders that are able to bring next-generation HPC technologies to the mainstream market will provide every customer access to systems that are tremendously efficient and capable of handling many different workloads, and allow users to address their most challenging projects with familiar applications and programming languages.

We live in a world where the clock is always ticking, and the race is on to uncover the answer, find the cure, predict the next earthquake, and create the next new innovation. At HPE, we’re taking the huge leaps forward that will ultimately result in even better availability of supercomputing systems specifically architected to empower data-driven breakthroughs and lead us into the next realm of technological possibilities.