Linux Foundation Promotes Open Source RAG with OPEA Launch

An offshoot of the Linux Foundation today announced the Open Platform for Enterprise AI (OPEA), a new community project intended to drive open source innovation in data and AI. A particular focus of OPEA will be around developing open standards around retrieval augmented generation (RAG), which the group says possesses the capacity “to unlock significant value from existing data repositories.”

The OPEA was created by LF AI & Data Foundation, the Linux Foundation offshoot founded in 2018 to facilitate the development of vendor-neutral, open source AI and data technologies. OPEA fits right into that paradigm with a goal of facilitating the development of flexible, scalable, and open source generative AI technology, particularly around RAG.

RAG is an emerging technique that brings outside data to bear on large language models (LLMs) and other generative AI models. Instead of relying entirely on pre-trained LLMs that are prone to making things up, RAG helps steer the AI model to providing relevant and contextual answers. It’s viewed as a less risky alternative and time-intensive alternative to training one’s own LLM or sharing sensitive data directly with LLMs like GPT-4.

While GenAI and RAG techniques have emerged quickly, it’s also led to “a fragmentation of tools, techniques, and solutions,” the LF AI & AI Foundation says. The group intends to address that fragmentation by working with the industry “to create standardize components including frameworks, architecture blueprints and reference solutions that showcase performance, interoperability, trustworthiness and enterprise-grade readiness.”

The emergence of RAG tools and techniques will be a central focus for OPEA in its quest to create standardized tools and frameworks, says Ibrahim Haddad, the executive director of LF AI & Data.

“We’re thrilled to welcome OPEA to LF AI & Data with the promise to offer open source, standardized, modular and heterogenous [RAG] pipelines for enterprises with a focus on open model development, hardened and optimized support of various compilers and toolchains,” Haddad said in a press release posted to the LF AI & Data website.

“OPEA will unlock new possibilities in AI by creating a detailed, composable framework that stands at the forefront of technology stacks,” Haddad continued. “This initiative is a testament to our mission to drive open source innovation and collaboration within the AI and data communities under a neutral and open governance model.”

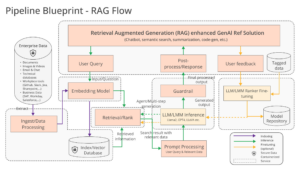

OPEA already has a blueprint for a RAG solution that’s made up of composable building blocks, including data stores, LLMs, and prompt engines. You can see more at the OPEA website at opea.dev.

There’s a familiar cast of vendors joining OPEA as founding members, including: Anyscale, Cloudera, Datastax, Domino Data Lab, Hugging Face, Intel, KX, MariaDB Foundation, Minio, Qdrant, Red Hat, SAS, VMware, Yellowbrick Data, and Zilliz, among others.

“The OPEA initiative is crucial for the future of AI development,” says Minio CEO and cofounder AB Periasamy. “The AI data infrastructure must also be built on these open principles. Only by having open source and open standard solutions, from models to infrastructure and down to the data are we able to create trust, ensure transparency, and promote accountability.”

“We see huge opportunities for core MariaDB users–and users of the related MySQL Server–to build RAG solutions,” said Kaj Arnö, CEO of MariaDB Foundation. “It’s logical to keep the source data, the AI vector data, and the output data in one and the same RDBMS. The OPEA community, as part of LF AI & Data, is an obvious entity to simplify Enterprise GenAI adoption.”

“The power of RAG is undeniable, and its integration into gen AI creates a ballast of truth that enables businesses to confidently tap into their data and use it to grow their business,” said Michael Gilfix, Chief Product and Engineering Officer at KX.

“OPEA, with the support of the broader community, will address critical pain points of RAG adoption and scale today,” said Melissa Evers, Intel’s vice president of software engineering group and general manager of strategy to execution. “It will also define a platform for the next phases of developer innovation that harnesses the potential value generative AI can bring to enterprises and all our lives.”

Related Items:

Vectara Spies RAG As Solution to LLM Fibs and Shannon Theorem Limitations

DataStax Acquires Langflow to Accelerate GenAI App Development

What’s Holding Up the ROI for GenAI?