Anyscale Takes On LLM Challenges with Aviary

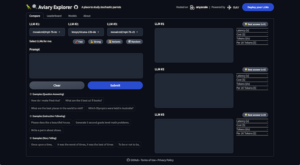

Anyscale has announced a new open source project called Aviary focused on developing applications with open source large language models.

With all the new open source LLMs to choose from, it may be difficult to decide which model is best suited for a particular application. Aviary is designed to help developers simplify the process of selecting and integrating the best LLM for their projects, Anyscale says.

“Our goal is to ensure that any developer can integrate AI into their products and to make it easy to develop, scale, and productionize AI applications without building and managing infrastructure. With the Aviary project, we are giving developers the tools to leverage LLMs in their applications,” said Robert Nishihara, co-founder and CEO of Anyscale, in a release. “AI is moving so rapidly that many companies are finding that their infrastructure choices prevent them from taking advantage of the latest LLM capabilities. They need access to a platform that lets them leverage the entire open source LLM ecosystem in a future-proof, performant, and cost-effective manner.”

Interest in building custom applications based on generative AI is exploding. Recent Gartner research found that by 2026, 75% of newly developed enterprise applications will incorporate AI- or ML-based models, up from less than 5% in 2023.

Anyscale notes that integrating LLM capabilities presents challenges like managing multiple models, customizing models and integrating application logic, upgrading models and ensuring high availability, scaling up or down based on demand, and maximizing GPU utilization to lower costs.

Anyscale notes that integrating LLM capabilities presents challenges like managing multiple models, customizing models and integrating application logic, upgrading models and ensuring high availability, scaling up or down based on demand, and maximizing GPU utilization to lower costs.

To address these challenges, Anyscale is offering Aviary as a fully open source, free, cloud-based LLM-serving infrastructure designed to help developers choose and deploy the right technologies and approach for their LLM-based applications. Aviary enables users to submit test prompts to open source LLMs including CarperAI, Dolly 2.0, Llama, Vicuna, StabilityAI, and Amazon’s LightGPT.

“Using Aviary, application developers can rapidly test, deploy, manage, and scale one or more pre-configured open source LLMs that work out of the box, while maximizing GPU utilization and reducing cloud costs. With Aviary, Anyscale is democratizing LLM technology and putting it in the hands of any application developer who needs it, whether they work at a small startup or a large enterprise,” the company said in a release.

Anyscale is the company behind Ray, an open source unified compute framework that distributes ML training workloads across the multiple CPUs, GPUs, and other hardware components in a system.

Aviary is built on Ray Serve, the company’s open source offering for serving and scaling AI applications, including LLMs. Anyscale says Ray Serve provides production-grade features including fault tolerance, dynamic model deployment, and request batching. Aviary is designed for multi-LLM orchestration and enables continuous testing of A/B models over time. The system partitions models across GPUs and enables model management and autoscaling across heterogeneous compute to drive cost optimization, the company asserts.

Anyscale says Aviary also aids developers on the journey to production deployment of LLMs. It includes libraries, tooling, examples, documentation, and sample code—all available in open source and readily adaptable for small experiments or large evaluations.

“Open source models and infrastructure are a great breakthrough for democratizing LLMs,” said Clem Delangue, CEO of Hugging Face, in a release. “We’ve attracted an enormous community of open source model builders at Hugging Face. Anything that makes it easier for them to develop and deploy is a win, especially something like Aviary coming from the team behind Ray.”

Explore Aviary for yourself at this link and read a company blog post here.

Related Items:

AnyScale Bolsters Ray, the Super-Scalable Framework Used to Train ChatGPT

Anyscale Branches Beyond ML Training with Ray 2.0 and AI Runtime

From Amazon to Uber, Companies Are Adopting Ray