ChatGPT Gives Kinetica a Natural Language Interface for Speedy Analytics Database

(SuPatMaN/Shutterstock)

It would normally take quite a bit of complex SQL to tease a multi-pronged answer out of Kinetica’s high-speed analytics database, which is powered by GPUs but wire-compataible with Postgres. But with the new natural language interface to ChatGPT unveiled today, non-technical users can get answers to complex questions written in plain English.

Kinetica was incubated by the U.S. Army over a decade ago to pour through huge mounds of fast-moving geospatial and temporal data in search of terrorist activity. By leveraging the processing capability of GPUs, the vector database could run full table scans on the data, whereas other databases were forced to winnow down the data with indexes and other techniques (it has since embraced CPUs with Intel’s AVX-512).

With today’s launch of its new Conversational Query feature, Kinetica’s massive processing capability is now within the reach of workers who lack the ability to write complex SQL queries. That democratization of access means executives and others with ad-hoc data questions are now able to leverage the power of Kinetica’s database to get answers.

The vast majority of database queries are planned, which enables organizations to write indexes, de-normalize the data, or pre-compute aggregates to get those queries to run in a performant way, says Kinetica co-founder and CEO Nima Negahban.

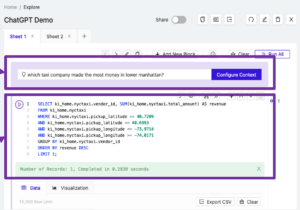

A user can submit a natural langauge query directly on the Kinetica dashboard, which ChatGPT converts to SQL for execution

“With the advent of generative large language models, we think that that mix is going to change to where a lot bigger portion of it’s going be ad hoc queries,” Negahban tells Datanami. “That’s really what we do best, is do that ad hoc, complex query against large datasets, because we have that ability to do large scans and leverage many-core compute devices better than other databases.”

Conversational Query works by converting a user’s natural language query into SQL. That SQL conversion is handled by OpenAI’s ChatGPT large language model (LLM), which proven itself to be a quick learner of language–spoken, computer, and otherwise. OpenAI API then returns the finalized SQL, and users can then choose to execute it against the database directly from the Kinetica dashboard.

Kinetica is leaning on the ChatGPT model to understand the intent of language, which is something that it’s very good at. For example, to answer the question “Where do people hang out the most?” from a massive database of geospatial data of human movement, ChatGPT is smart enough to know that “hang out” is a synonym for “dwell time,” which is how the data is officially identified in the database. (The answer, by the way, is 7-Eleven.)

Kinetica is also doing some work ahead of time to prepare ChatGPT to generate good SQL through its “hydration” process, says Chad Meley, Kinetica’s chief marketing officer.

“We have native analytic functions that are callable through SQL and ChatGPT, through part of the hydration process, becomes aware of that,” Meley says. “So it can use a specific time-series join or spatial join that we make ChatGPT aware of. In that way, we go beyond your typical ANSI SQL functions.”

The SQL generated by ChatGPT isn’t perfect. As many are aware, the LLM is prone to seeing things in the data, the so-called “hallucination” problem. But even though it’s SQL isn’t completely free of defect, ChatGPT is still quite useful at this state, says Negahban, who was a 2018 Datanami Person to Watch.

“I’ve seen that it’s kind of good enough,” he says. “It hasn’t been [wildly] wrong in any queries it generates…I think it will be better with GPT-4.”

In the end analysis, by the time it takes a SQL pro to write the perfect seven-way join and get it over to the database, the opportunity to act on the data may be gone. That’s why the pairing of a “good enough” query generator with a database as powerful as Kinetica can make a different for decision-makers, Negahban says.

“Having an engine like Kinetica that can actually do something with that query without having to do planning beforehand” is the big get, he says. “If you try to do some of these queries with the Snowflake, or insert your database du jour, they really struggle because that’s just not what they’re built for. They’re good at other things. What we’re really good at, as an engine, is to do ad hoc queries no matter the complexity, no matter how many tables are involved. So that really pairs well with this ability for anyone to generate SQL across all their data asking questions about all the data in their enterprise.”

Conversational Query is available now in the cloud and on-prem versions of Kinetica.

Related Items:

ChatGPT Dominates as Top In-Demand Workplace Skill: Udemy Report

Bank Replaces Hundreds of Spark Streaming Nodes with Kinetica

Preventing the Next 9/11 Goal of NORAD’s New Streaming Data Warehouse

April 24, 2024

- AtScale Introduces Developer Community Edition for Semantic Modeling

- Domopalooza 2024 Sets a High Bar for AI in Business Intelligence and Analytics

- BigID Highlights Crucial Security Measures for Generative AI in Latest Industry Report

- Moveworks Showcases the Power of Its Next-Gen Copilot at Moveworks.global 2024

- AtScale Announces Next-Gen Product Innovations to Foster Data-Driven Industry-Wide Collaboration

- New Snorkel Flow Release Empowers Enterprises to Harness Their Data for Custom AI Solutions

- Snowflake Launches Arctic: The Most Open, Enterprise-Grade Large Language Model

- Lenovo Advances Hybrid AI Innovation to Meet the Demands of the Most Compute Intensive Workloads

- NEC Expands AI Offerings with Advanced LLMs for Faster Response Times

- Cribl Wins Fair Use Case in Splunk Lawsuit, Ensuring Continued Interoperability

- Rambus Advances AI 2.0 with GDDR7 Memory Controller IP

April 23, 2024

- G42 Selects Qualcomm to Boost AI Inference Performance

- Veritas Strengthens Cyber Resilience with New AI-Powered Solutions

- CERN’s Edge AI Data Analysis Techniques Used to Detect Marine Plastic Pollution

- Alteryx and DataCamp Partner to Bring Analytics Upskilling to All

- SymphonyAI Announces IRIS Foundry, an AI-powered Industrial Data Ops Platform

April 22, 2024

- Jülich’s New AI Foundation Models Aim to Advance Scientific Applications

- Cognizant and Microsoft Expand Partnership to Deploy Generative AI Across Multiple Industries

- Gulp Data and Datarade Partner to Empower Enterprises to Monetize Data

- Fullstory Launches Data Direct to Enhance Corporate Understanding of Behavioral Data

Most Read Features

Sorry. No data so far.

Most Read News In Brief

Sorry. No data so far.

Most Read This Just In

Sorry. No data so far.

Sponsored Partner Content

-

Get your Data AI Ready – Celebrate One Year of Deep Dish Data Virtual Series!

-

Supercharge Your Data Lake with Spark 3.3

-

Learn How to Build a Custom Chatbot Using a RAG Workflow in Minutes [Hands-on Demo]

-

Overcome ETL Bottlenecks with Metadata-driven Integration for the AI Era [Free Guide]

-

Gartner® Hype Cycle™ for Analytics and Business Intelligence 2023

-

The Art of Mastering Data Quality for AI and Analytics

Sponsored Whitepapers

Contributors

Featured Events

-

AI & Big Data Expo North America 2024

June 5 - June 6Santa Clara CA United States

June 5 - June 6Santa Clara CA United States -

AI Hardware & Edge AI Summit Europe

June 18 - June 19London United Kingdom

June 18 - June 19London United Kingdom -

AI Hardware & Edge AI Summit 2024

September 10 - September 12San Jose CA United States

September 10 - September 12San Jose CA United States -

CDAO Government 2024

September 18 - September 19Washington DC United States

September 18 - September 19Washington DC United States