Organizations Struggle with AI Bias

(GoodIdeas/Shutterstock)

As organizations roll out machine learning and AI models into production, they’re increasing cognizant of the presence of bias in their systems. Not only does this bias potentially lead to poorer decisions on the part of the AI systems, but it can put the organizations running them in legal jeopardy. However, getting on top of this problem is turning out to be tougher than expected for a lot of organizations.

Bias can creep into AI systems across a wide range of industries and use cases. For example, Harvard University and Accenture demonstrated how algorithmic bias can creep into the hiring processes at human resources departments in a report issued last year.

In their 2021 joint report “Hidden Workers: Untapped Talent,” the two organizations show how the combination of outdated job descriptions and automated hiring systems that leans heavily on algorithmic processes for posting of ads for open job and evaluation of resumes can keep otherwise qualified individuals from landing jobs.

“Arguably, today’s practices incorporate the worst of both worlds,” the authors write. “Companies remain wedded to time-honored practices, despite their significant investment in technologies to augment their processes.”

Researchers suggest a popular predictive policing product is biased against ethnic minorities (Supamotion/Shutterstock)

Policing is another area that is prone to the unintended consequences of algorithmic bias. In a December article titled “Crime Prediction Software Promised to Be Free of Biases. New Data Shows It Perpetuates Them,” reporters with The Markup and Gizmodo showed how the predictive policing product from PredPol demonstrated a remarkable correlation between predictions of crime and ethnicity in specific neighborhoods.

“Overall, we found that the fewer White residents who lived in an area—and the more Black and Latino residents who lived there—the more likely PredPol would predict a crime there,” the authors wrote. “The same disparity existed between richer and poorer communities.” PredPol CEO Brian MacDonald disputed the findings.

According to a new DataRobot survey of 350 organizations across industries in the US and the UK, more than half of organiations are deeply concerned about the potential for AI bias to hurt their customers and themselves.

The survey showed that 54% of US respondent reported feeling “very concerned” or “deeply concerned” about the potential harm of AI bias in their organizations. That represents an increase from the 42% who shared this sentiment in a similar study conducted in 2019. Their UK colleagues were even more skeptical about AI bias, with 64% saying they shared this sentiment, the survey says.

Just over one-third (36%) of the DataRobot survey respondents say their organizations have suffered from AI bias, with lost revenue and lost customers being the most common impact (experienced by 62% and 61% of those who have reported one or more instances of reported of actual AI bias, respectively).

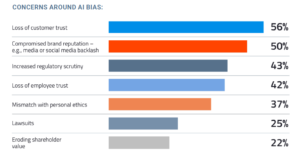

A loss of customer trust is cited as the number one hypothetical risk of AI bias, with 56% of survey respondents citing this risk factor, followed by compromised brand reputation, increased regulatory scrutiny, loss of employee trust, mismatch with personal ethics, lawsuits, and eroding shareholder value.

While three quarters of surveyed organizations report having plans in place to detect AI bias—with about one-quarter of organizations saying they are “extremely confident” in their ability to detect AI bias and another 45% saying they are “very confident”–they report that they struggle to effectively eliminate the bias from their models and algorithms, the DataRobot survey says.

The respondents cited several specific challenges to rooting out biases, including: difficulty understanding why an AI model makes a decision; understanding the patterns between input values and a model’s decision; a lack of trust in algorithms; clarity in the training data; keeping AI models up-to-date; educating stakeholders to identify AI bias; and lack of clarity around what constitutes bias.

So, what can be done about bias in AI? For starters, 81% of survey respondents say they think “government regulation would be helpful in defining and preventing AI bias.” Without government regulation, about one-third are fearful that AI “will hurt protected classes,” the survey says. However, 45% of respondents say they’re afraid government regulation will grow costs and make it more difficult to adopt AI. Only about 23% say they have no fears about government regulation of AI.

Better clarity into how machine learning models work could help prevent bias, DataRobot says (FGC/Shutterstock)

All told, the industry appears to be at a crossroads when it comes to bias in AI. With the adoption of AI increasingly being seen as a must-have for modern companies, there is considerable pressure to adopt the technology. However, companies are increasingly wary about the unintended consequences of AI, especially when it comes to ethics.

“DataRobot’s research shows what many in the artificial intelligence field have long-known to be true: the line of what is and is not ethical when it comes to AI solutions has been too blurry for too long,” said Kay Firth-Butterfield, head of AI and machine learning at the World Economic Forum. “The CIOs, IT directors and managers, data scientists, and development leads polled in this research clearly understand and appreciate the gravity and impact at play when it comes to AI and ethics.”

DataRobot has been at the forefront in trying to understand the concerns that companies and consumers have around bias and AI, and coming up with ways that those concerns can be mitigated. The company has hired dozens of employees to work for Vice President of Trusted AI Ted Kwartler, who has taken concrete steps to thwart bias in models developed with DataRobot’s products.

“The core challenge to eliminate bias is understanding why algorithms arrived at certain decisions in the first place,” Kwartler said in a press release. “Organizations need guidance when it comes to navigating AI bias and the complex issues attached. There has been progress, including the EU proposed AI principles and regulations, but there’s still more to be done to ensure models are fair, trusted, and explainable.”

Related Items:

AI Bias Problem Needs More Academic Rigor, Less Hype

The Maturation of Data Science

AI Bias a Real Concern in Business, Survey Says