From a robotics and information processing perspective, NASA’s missions have grown increasingly ambitious over recent years, most notably through its long-range probes and Mars rovers. But, as Shreyansh Daftry (an AI research scientist for NASA) explained in a recent talk for the 2021 AI Hardware Summit, there’s a long way yet to go – and much of it will (and must) be powered by AI.

“[This is] what I always wanted to do: become a robotics engineer that could build intelligent machines in space,” Daftry said. “But as I started to learn more, I understood that while NASA has been building these really capable machines, they’re not really what I, as a trained roboticist, would call intelligent.” Here, Daftry juxtaposed the 1969 lunar lander and the Curiosity rover, pointing out that they were both human-operated for their landing and operation.

Changing the Human-Led Status Quo

But, he said, that paradigm would need to change – for two main reasons. “First,” Daftry said, “is the difficulty with deep space communications,” which are both severely bandwidth limited and operate with extreme – and, with distance, increasing – latency. With NASA planning for missions to places like Europa, where it would take several hours to receive and respond to a signal, operating predominantly through human control would be prohibitively inefficient.

“The second major reason,” he continued, “is scalability.” He showed a map of all the satellites around the Earth and a diagram of a proposed Mars colony, asking the audience to imagine all of those disparate devices being controlled by their own teams of engineers and scientists. “Sounds crazy, right?” he said. The only way we can scale up the space economy is if we can make our space assets to be self-sustainable, and artificial intelligence is going to be a key ingredient in making that happen.”

Daftry explained that there were a host of areas where AI could make a huge difference: autonomous science and planning, precision landing, dexterous manipulation, human-robot teams, and more. But for the purposes of his talk, he said, he would focus on just one: autonomous navigation.

Leading the Way (Safely) on Mars

“We landed another rover on Mars. Woohoo!” he said. “The Perseverance rover has the most sophisticated autonomous navigation system, ENav, that has ever driven on any extraterrestrial surface.” He showed a video of the system maneuvering around a rocky terrain obstacle course. “However, if you carefully look at the video captions, you’ll notice that the video was sped up fifty times.” The lunar buggy, he said, had done the same thing at a hundred times the speed fifty years ago. “The key difference between what Perseverance does and what was happening here,” he said, “is [that] the intelligence of the rover was powered by the human brain.”

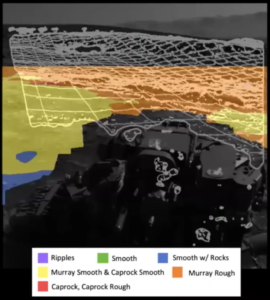

So Daftry and his colleagues have been working on developing autonomous systems that would help the rovers drive more like human drivers. “The current onboard autonomous navigation system running on Curiosity and also on Perseverance uses only geometric information,” he said, noting that the systems fail to capture textural distinctions – which could mean the difference between navigating safely and getting stuck in something like sand. “So we created SPOC, a deep learning-based terrain-aware classifier that can help Mars rovers navigate better.”

“Just like humans, SPOC can tell the terrain types based on textural features,” Daftry continued. The team developed two versions: one that works onboard a rover with six classification types for ground texture and one that operates on orbital images that has 17 classification types. SPOC, Daftry said, was rooted in the “ongoing revolution” in computer vision and deep learning. These deep learning models used, however, were intended for use on Earth – not on Mars.

Stumbling Blocks

“Adapting these models to work reliably in a Martian environment,” Daftry said, “is not trivial.” The team had applied transfer learning to bridge the gap, but there were a few key bottlenecks. First, he said, was data availability.

How SPOC can help rovers see the world, with textural distinctions. Image courtesy of Shreyansh Daftry/NASA.

“We did not have any labeled data to start with,” he explained. “And the fact that we have only about two dozen geologists in the world who have the knowledge to create these labels – as you can imagine, it is really hard to get time from these people to actually do manual labeling.” Even if they had, the cost would have been exorbitant.

So instead, the team started a citizen science project – AI4Mars – that tasked volunteers with labeling images from the rovers instead, successfully labeling more than 200,000 images in just a few months. But the data they started with was also very noisy – so the team turned to synthetic data and simulation.

“We worked with a startup called Bifrost AI who excels at generating perfectly labeled data of complex unstructured environments using 3D graphics,” Daftry said. “The data generated is highly photorealistic and looks just like Mars. In addition, the labels are perfect and noise-free.”

The second bottleneck is hardware limitations, with Daftry comparing the onboard computer on Perseverance to an iMac G3 from the late 1990s. NASA, he said, was looking at a series of solutions to this to enable deep learning models to run onboard rovers, from developing in-house hardware to adapting popular processors like Qualcomm’s Snapdragon to run on another planet.

Third, Daftry said, was system integration and verification. “Once the rover is launched, we do not have the ability to fix things if something goes wrong, so things have to work in one shot.” This was a problem for deep learning. “Deep [convolutional neural network] models, while they work great, are inherently black-box in nature,” he said, “presenting a major bottleneck for system-level validation and proving guaranteed performance.” This, he added, was mostly addressed by “testing the heck out of our system” on Earth in comparable environments.

What’s Next?

“Overall, our long-term vision is to create something like Google Maps on Mars for our rovers,” Daftry said, “so that the human operator can only specify the destination they need the rover to go and the rover uses the software to find its path.” Beyond that, NASA was looking at autonomous navigation for various other kinds of rovers, from deep sea rovers to cliff-scaling rovers.

April 23, 2024

- G42 Selects Qualcomm to Boost AI Inference Performance

- Veritas Strengthens Cyber Resilience with New AI-Powered Solutions

- CERN’s Edge AI Data Analysis Techniques Used to Detect Marine Plastic Pollution

- Alteryx and DataCamp Partner to Bring Analytics Upskilling to All

- SymphonyAI Announces IRIS Foundry, an AI-powered Industrial Data Ops Platform

April 22, 2024

- Jülich’s New AI Foundation Models Aim to Advance Scientific Applications

- Cognizant and Microsoft Expand Partnership to Deploy Generative AI Across Multiple Industries

- Gulp Data and Datarade Partner to Empower Enterprises to Monetize Data

- Fullstory Launches Data Direct to Enhance Corporate Understanding of Behavioral Data

April 19, 2024

- Carahsoft to Showcase Cutting-Edge Solutions with 70+ Partners at GEOINT 2024

- BrainChip Highlights the 2nd Generation Akida at tinyML Summit 2024

- MathCo Named Microsoft Solutions Partner for Data and AI

- Salesforce Survey: Data Will Make or Break Workers’ Trust in AI

- Weights & Biases Announces Expanded Integration with NVIDIA NIM

- Dataminr Introduces ReGenAI to Enhance Real-Time Event Monitoring

- Cisco Reimagines Security for Data Centers and Clouds in Era of AI

- Gurucul Enhances Federated Search Capabilities Across Multiple Data Sources

- SAS-Sponsored Study Highlights Talent Shortages and Strategic Gaps in GenAI Adoption

- Redgate Launches Enterprise Edition of Redgate Monitor for Large-Scale Databases

April 18, 2024

Most Read Features

Sorry. No data so far.

Most Read News In Brief

Sorry. No data so far.

Most Read This Just In

Sorry. No data so far.

Sponsored Partner Content

-

Get your Data AI Ready – Celebrate One Year of Deep Dish Data Virtual Series!

-

Supercharge Your Data Lake with Spark 3.3

-

Learn How to Build a Custom Chatbot Using a RAG Workflow in Minutes [Hands-on Demo]

-

Overcome ETL Bottlenecks with Metadata-driven Integration for the AI Era [Free Guide]

-

Gartner® Hype Cycle™ for Analytics and Business Intelligence 2023

-

The Art of Mastering Data Quality for AI and Analytics

Sponsored Whitepapers

Contributors

Featured Events

-

Call & Contact Center Expo

April 24 - April 25Las Vegas NV United States

April 24 - April 25Las Vegas NV United States -

AI & Big Data Expo North America 2024

June 5 - June 6Santa Clara CA United States

June 5 - June 6Santa Clara CA United States -

AI Hardware & Edge AI Summit Europe

June 18 - June 19London United Kingdom

June 18 - June 19London United Kingdom -

AI Hardware & Edge AI Summit 2024

September 10 - September 12San Jose CA United States

September 10 - September 12San Jose CA United States -

CDAO Government 2024

September 18 - September 19Washington DC United States

September 18 - September 19Washington DC United States