From a robotics and information processing perspective, NASA’s missions have grown increasingly ambitious over recent years, most notably through its long-range probes and Mars rovers. But, as Shreyansh Daftry (an AI research scientist for NASA) explained in a recent talk for the 2021 AI Hardware Summit, there’s a long way yet to go – and much of it will (and must) be powered by AI.

“[This is] what I always wanted to do: become a robotics engineer that could build intelligent machines in space,” Daftry said. “But as I started to learn more, I understood that while NASA has been building these really capable machines, they’re not really what I, as a trained roboticist, would call intelligent.” Here, Daftry juxtaposed the 1969 lunar lander and the Curiosity rover, pointing out that they were both human-operated for their landing and operation.

Changing the Human-Led Status Quo

But, he said, that paradigm would need to change – for two main reasons. “First,” Daftry said, “is the difficulty with deep space communications,” which are both severely bandwidth limited and operate with extreme – and, with distance, increasing – latency. With NASA planning for missions to places like Europa, where it would take several hours to receive and respond to a signal, operating predominantly through human control would be prohibitively inefficient.

“The second major reason,” he continued, “is scalability.” He showed a map of all the satellites around the Earth and a diagram of a proposed Mars colony, asking the audience to imagine all of those disparate devices being controlled by their own teams of engineers and scientists. “Sounds crazy, right?” he said. The only way we can scale up the space economy is if we can make our space assets to be self-sustainable, and artificial intelligence is going to be a key ingredient in making that happen.”

Daftry explained that there were a host of areas where AI could make a huge difference: autonomous science and planning, precision landing, dexterous manipulation, human-robot teams, and more. But for the purposes of his talk, he said, he would focus on just one: autonomous navigation.

Leading the Way (Safely) on Mars

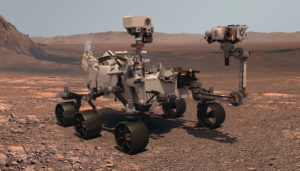

“We landed another rover on Mars. Woohoo!” he said. “The Perseverance rover has the most sophisticated autonomous navigation system, ENav, that has ever driven on any extraterrestrial surface.” He showed a video of the system maneuvering around a rocky terrain obstacle course. “However, if you carefully look at the video captions, you’ll notice that the video was sped up fifty times.” The lunar buggy, he said, had done the same thing at a hundred times the speed fifty years ago. “The key difference between what Perseverance does and what was happening here,” he said, “is [that] the intelligence of the rover was powered by the human brain.”

So Daftry and his colleagues have been working on developing autonomous systems that would help the rovers drive more like human drivers. “The current onboard autonomous navigation system running on Curiosity and also on Perseverance uses only geometric information,” he said, noting that the systems fail to capture textural distinctions – which could mean the difference between navigating safely and getting stuck in something like sand. “So we created SPOC, a deep learning-based terrain-aware classifier that can help Mars rovers navigate better.”

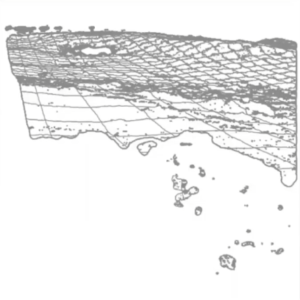

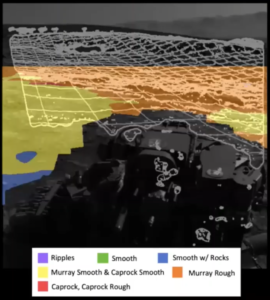

“Just like humans, SPOC can tell the terrain types based on textural features,” Daftry continued. The team developed two versions: one that works onboard a rover with six classification types for ground texture and one that operates on orbital images that has 17 classification types. SPOC, Daftry said, was rooted in the “ongoing revolution” in computer vision and deep learning. These deep learning models used, however, were intended for use on Earth – not on Mars.

Stumbling Blocks

“Adapting these models to work reliably in a Martian environment,” Daftry said, “is not trivial.” The team had applied transfer learning to bridge the gap, but there were a few key bottlenecks. First, he said, was data availability.

How SPOC can help rovers see the world, with textural distinctions. Image courtesy of Shreyansh Daftry/NASA.

“We did not have any labeled data to start with,” he explained. “And the fact that we have only about two dozen geologists in the world who have the knowledge to create these labels – as you can imagine, it is really hard to get time from these people to actually do manual labeling.” Even if they had, the cost would have been exorbitant.

So instead, the team started a citizen science project – AI4Mars – that tasked volunteers with labeling images from the rovers instead, successfully labeling more than 200,000 images in just a few months. But the data they started with was also very noisy – so the team turned to synthetic data and simulation.

“We worked with a startup called Bifrost AI who excels at generating perfectly labeled data of complex unstructured environments using 3D graphics,” Daftry said. “The data generated is highly photorealistic and looks just like Mars. In addition, the labels are perfect and noise-free.”

The second bottleneck is hardware limitations, with Daftry comparing the onboard computer on Perseverance to an iMac G3 from the late 1990s. NASA, he said, was looking at a series of solutions to this to enable deep learning models to run onboard rovers, from developing in-house hardware to adapting popular processors like Qualcomm’s Snapdragon to run on another planet.

Third, Daftry said, was system integration and verification. “Once the rover is launched, we do not have the ability to fix things if something goes wrong, so things have to work in one shot.” This was a problem for deep learning. “Deep [convolutional neural network] models, while they work great, are inherently black-box in nature,” he said, “presenting a major bottleneck for system-level validation and proving guaranteed performance.” This, he added, was mostly addressed by “testing the heck out of our system” on Earth in comparable environments.

What’s Next?

“Overall, our long-term vision is to create something like Google Maps on Mars for our rovers,” Daftry said, “so that the human operator can only specify the destination they need the rover to go and the rover uses the software to find its path.” Beyond that, NASA was looking at autonomous navigation for various other kinds of rovers, from deep sea rovers to cliff-scaling rovers.

August 18, 2025

- MSU: Decades of Data Point to Widespread Butterfly Loss in Midwest

- Zilliz Expands Enterprise Security and Compliance for AI Deployments

August 15, 2025

- SETI Institute Awards Davie Postdoctoral Fellowship for AI/ML-Driven Exoplanet Discovery

- Los Alamos Sensor Data Sheds Light on Powerful Lightning Within Clouds

- Anaconda Report Reveals Need for Stronger Governance is Slowing AI Adoption

August 14, 2025

- EDB Accelerates Enterprise AI Adoption with NVIDIA

- Oracle to Offer Google Cloud’s Gemini AI Models Through OCI Generative AI

- Grafana Labs Launches Public Preview of AI Assistant for Observability and Monitoring

- Striim Launches 5.2 with New AI Agents for Real-Time Predictive Analytics and Vector Embedding

- G42 Launches OpenAI GPT-OSS Globally on Core42’s AI Cloud

- SuperOps Launches Agentic AI Marketplace Partnering with AWS

- MinIO Launches MinIO Academy as AI Adoption Drives Demand for Object Storage Expertise

- DataBank Reports 60% of Enterprises Already Seeing AI ROI or Expect to Within 12 Months

- SnapLogic Surpasses $100M ARR as Founder Gaurav Dhillon Retires

August 13, 2025

- KIOXIA Advances AI Server Infrastructure Scalability, Accelerating Storage Performance and Density

- Qubrid AI Debuts 2-Step No-Code Platform to Chat Directly with Proprietary Data

- Redpanda Provides Kafka-Compatible Streaming for NYSE Cloud Services

- Treasure Data Introduces ‘No Compute’ Pricing, Delivering Predictable Economics with Hybrid CDP Architecture

- Couchbase: New Enterprise Analytics Brings Next-Gen JSON Analytics to Self-Managed Deployments

- Aerospike’s New Expression Indexes Simplify Developer Code and Reduce Memory Overhead for Application and AI Queries

- Top 10 Big Data Technologies to Watch in the Second Half of 2025

- Rethinking Risk: The Role of Selective Retrieval in Data Lake Strategies

- LinkedIn Introduces Northguard, Its Replacement for Kafka

- Apache Sedona: Putting the ‘Where’ In Big Data

- What Are Reasoning Models and Why You Should Care

- Scaling the Knowledge Graph Behind Wikipedia

- Why Metadata Is the New Interface Between IT and AI

- Why OpenAI’s New Open Weight Models Are a Big Deal

- LakeFS Nabs $20M to Build ‘Git for Big Data’

- Doing More With Your Existing Kafka

- More Features…

- Mathematica Helps Crack Zodiac Killer’s Code

- Promethium Wants to Make Self Service Data Work at AI Scale

- BigDATAwire Exclusive Interview: DataPelago CEO on Launching the Spark Accelerator

- The Top Five Data Labeling Firms According to Everest Group

- McKinsey Dishes the Goods on Latest Tech Trends

- Supabase’s $200M Raise Signals Big Ambitions

- Solidigm Celebrates World’s Largest SSD with ‘122 Day’

- AI Skills Are in High Demand, But AI Education Is Not Keeping Up

- Google Pushes AI Agents Into Everyday Data Tasks

- Collate Focuses on Metadata Readiness with $10M Series A Funding

- More News In Brief…

- Seagate Unveils IronWolf Pro 24TB Hard Drive for SMBs and Enterprises

- OpenText Launches Cloud Editions 25.3 with AI, Cloud, and Cybersecurity Enhancements

- Gartner Predicts 40% of Generative AI Solutions Will Be Multimodal By 2027

- StarTree Adds Real-Time Iceberg Support for AI and Customer Apps

- Gathr.ai Unveils Data Warehouse Intelligence

- Deloitte Survey Finds AI Use and Tech Investments Top Priorities for Private Companies in 2024

- LF AI & Data Foundation Hosts Vortex Project to Power High Performance Data Access for AI and Analytics

- Dell Unveils Updates to Dell AI Data Platform

- Zscaler Unveils Business Insights with Advanced Analytics for Smarter SaaS Spend and Resource Allocation

- Collibra Acquires Deasy Labs to Extend Unified Governance Platform to Unstructured Data

- More This Just In…