A Hybrid AI Approach to Optimizing Oil Field Planning

(pan-demin/Shutterstock)

What’s the best way to arrange wells in an oil or gas field? It’s a simple enough question, but the answer can be very complex. Now a Cal Tech/JPL spinoff is developing a new approach that blends traditional HPC simulation with deep reinforcement learning running on GPUs to optimize energy extraction.

The well placement game is a familiar one to oil and gas companies. For years, they have been using simulators running atop HPC systems to model underground reservoirs. Atop that model, they use some sort of optimizer to drive iterations of the model, with the goal of coming up with the optimal number, type, and placement of wells for a given field.

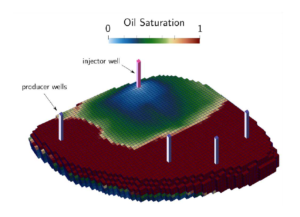

The possible combinations of number, type, and placement quickly becomes a challenging math problem, according to Beyond Limits’ Chief Technology Officer for Industrial AI Shahram Farhadi. Even with a “fairly simple” model with a million grids and five wells (one injector well and four producer wells) the number of possible moves is on the order of 10 to the 20th power, he says. By comparison, there are 5 million possible combinations of chess pieces in five moves, and 10 to the 12th possible combinations five moves into Go.

The placement of oil wells in a 3-D grid quickly becomes a challenging combinatorial problem (image courtesy Beyond Limits)

“The optimization problem is combinatoric, and it explodes really fast,” Farhadi says. “In that sense, you have an optimization that is intractable, if your only tool is brute search.”

The optimizers that energy companies currently rely on include things like genetic algorithms and particle swarm algorithms, Farhadi says. “They are all well and good,” he says, “but they are optimizers in the simplest sense.”

At Beyond Limits, Farhadi has spearheaded a new approach to optimizer development that leverages some of the latest breakthroughs in reinforcement learning and deep convolutional neural networks.

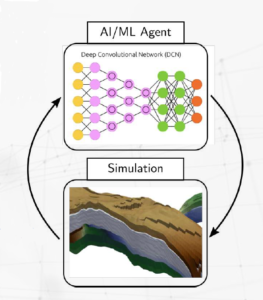

Deep learning approaches are able to work with, and learn from, much larger pools of data than traditional machine learning algorithms. The radar tomography data that is fed into traditional physics simulators is one piece of the puzzle in the well placemeng game. But in this case, the results from each successive run of the simulator are really what the deep learning appraoch builds upon. By pairing its new AI-based field planning agent with a traditional physics simuilator, Beyond Limits is pushing the state-of-the-art in oil well field planning.

According to Farhadi, the new field planning agent is able to learn from subsequent iterations of the simulator. “Winning” is defined as a high net present value (NPV) score, which is basically the predicted overall oil or gas recovery minus the costs. The HPC model represents the physics of multi-phase flow and its unique patterns, which informs well spacing (if you place wells too closely, they will draw from each other).

Beyond Limits’ field planning agent interacts with traditional physics simulator (image courtesy Beyond Limits)

“The reinforcement learning tries to learn by combining this representation of the states and what happened,” Farhadi tells Datanami.” says. “Think of a sequence of actions, and then the reward of, did we lose or win. And then that reward is fed back through the system so that the system learns to only take actions that are rewarding.”

The approach essentially codifies advances in expert systems (this IP was licensed from CalTech/JPL) into a deep learning model that’s composed of perceivers and reasoners. The perceivers will create labels from the pixels, and then the reasoner will make sense of the labels to make a determination about the world, Farhadi says.

The trick that Farhadi and his team brought to bear was how to map the three-dimensional radar tomography data into winning and losing arguments that the deep learning model could act upon. The system essentially is remembering what worked in previous iterations, which are written into the layers of the neural network, and incorporating that knowledge for each subsequent round until the improvements stop accruing and it converges at the optimal answer.

“The reinforcement learning paradigm [works]… in a way that you kind of try to memorize the states that are image-like, in this case 3D images,” Farhadi says. “It’s fairly new. So we are actually the first to set it out. And we had to modify the algorithms quite a bit to enable them to, let’s say, go from learning a game to learning this game. The NPV is more on the continuum space. It’s not only to win.”

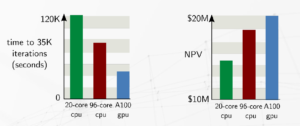

Once Farhadi and his crew developed the new field planning agent, the next step was getting it to scale to the limits that oil companies will need. Beyond Limits, which was founded in Glendale, California in 2014, benchmarked the new model on three different setups, including a 20-core CPU system, a 96-core CPU system, and a single core Nvidia A100 GPU system.

Not surprisingly, the GPU-based system showed the highest performance on the benchmark tests, both in terms of iteration time and higher NPV (see figure). The company has already partnered with one oil company, which realized $50 million in production value from the project, the company says.

The hybrid AI approach delivered a 184% peak increase in processing speed compared to standard operations, Beyond Limits says. The field planning agent delivered a 15% improvement in the number of simulations run compared to other optimization techniques. What’s more, the environmental impact was minimized, as the company was able to reduce the number of wells to water injector and four oil producers, compared to eight to 12 injector wells and four producers in the standard configuration, the company says.

“This decreases the amount of drilling that is needed,” Fahradi says. “But also I think it’s important to abstract this way a little bit and think of the simulation as any other industrial simulator. We [foresee] similar thing with power plants and with refineries.”

Related Items:

Digital Twins and AI Keep Industry Flexible During COVID-19

Texas A&M Reinforcement Learning Algorithm Automates Oil and Gas Reserve Forecasting

Accelerate Exploration and Discovery with Remote Visualization