Why AI Has Yet to Deliver on Promises in Healthcare

(Andrey_Popov/Shutterstock)

We’ve reached the point where computer vision and natural language processing (NLP) programs can match, and in some cases exceed, the capabilities of humans. In some medical specialties, such as radiology, AI stands ready to provide the diagnostic expertise that medical schools cannot. But when you look at the overall picture in healthcare, AI has yet to live up to the hype.

The age of big data was supposed to give us the capabilities to crunch massive amounts of historical and real-time medical data to come up with optimal treatments for all human diseases and ailments. With the right machine learning tools running against our personal medical histories–freshly digitized in pricey electronic medical record (EMR) systems–the era of precision medicine would soon be available to all individuals.

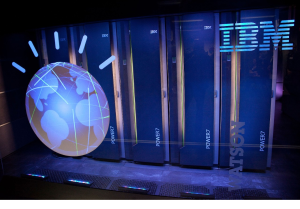

Back in 2011, just days after IBM’s Watson supercomputer became the champion of Jeopardy!, IBM announced that it Watson was going back to school to become an AI doctor. In 2012, IBM partnered with Memorial Sloan Kettering Cancer Center to co-develop a product called Watson for Oncology.

By training Watson for Oncology on all of the best textbooks, clinical reports, and any other medical information available, the AI would be able to identify even the rarest forms of cancer, and avoid the kind of cognitive bias that afflicted human doctors.

At least that was the hope. But after the cancer clinic spent a reported $62 million developing the AI system with IBM, it has yet to have the kind of major impact that was promised. While Watson could scan thousands of pages of medical text and find correlations among conditions, treatments, and outcomes, the AI was not learning as a real cancer doctor would.

“The information that physicians extract from an article, that they use to change their care, may not be the major point of the study,” Memorial Sloan Kettering’s Mark Kris, who led the deployment, told IEEE Spectrum in 2019. Watson can find statistical correlations. “But doctors don’t work that way,” Kris said. (Despite these challenges, Watson for Oncology was still being used at Memorial Sloan Kettering as of 2019.)

Watson isn’t the only medical AI to run into the buzz saw of the real world. The space is rife with projects that failed outright, as well as projects that have yet to life up to the hype (like Watson).

There are numerous reasons why this is the case. In the Nature paper “The ‘inconvenient truth’ about AI in healthcare,” a trio of Harvard and MIT professors argue that “the algorithms that feature prominently in research literature are in fact not, for the most part, executable at the frontlines of clinical practice.”

There are two main reasons for this, the researchers say. First, the AI programs do not fit in well with the status quo in the healthcare environment. “Simply adding AI applications to a fragmented system will not create sustainable change,” they write.

Secondly, the data infrastructure in most healthcare organizations is inadequate to support the level of localization required to get a good “fit” wit the AI system. There’s also not enough data to train all the different cohorts that the AI system will encounter in the real world, which diminishes their usefulness.

Despite the challenges of getting AI into real-world healthcare practices, the potential benefits of using AI are enormous. The world spends tens of trillions of dollars annually on healthcare, and yet millions still suffer from conditions and diseases that are quite preventable. The possibility to save huge sums, not to mention saving many lives, keeps human AI researchers and vendors moving forward.

One person who’s optimistic about the potential to achieve better results with AI in healthcare is David Talby. As the CTO of John Snow Labs, Talby helps lead the development of Spark NLP, which is the most widely used NLP library in the world, according to Ben Lorica’s NLP Industry Survey.

“First of all, the technology fundamentally works,” Talby tells Datanami in a recent interview. “Right now, for most tasks, you can match or exceed human level of accuracy. I can say this about NLP, about text, but really we’re getting similar results on image processing.”

Don’t underestimate the importance of that, Talby says, because it means that specific AI programs have essentially the same level of skill as a radiologist or an ophthalmologist with a decade of training.

“And if we have an algorithm that, out of the box, can be as good as a 10- or 15-year specialist, it’s much better in terms of the amount of suffering and death that’s preventable,” he says. “In most of the world, if you need a radiologist or endocrinologist or even a psychiatrist, you’re not going to see them. In the U.S., we have a problem with access to specialists.”

John Snow Labs’ SparkNLP library excels at finding patterns and correlations buried in medical records (val lawless/Shutterstock)

But that doesn’t mean we have cracked the code on medical AI. The deeper you get into it, the more problems you find.

As usual, some of the most challenging problems have to do with managing the data, not with performing the machine learning itself. In John Snow Labs’ case, most of the interesting data exists in free text in the notes that doctors and nurses enter into the EMR system. If the organization wants to train an oncology model, it would be nice if there was a box for “small cell” and “non-small” cancer, as well as for “Stage 1, Stage 2,” and so on. But that’s not the case, and Spark NLP typically must infer what type of cancer the patient has, as well as what the cancer stage is, Talby says.

In some cases, healthcare organizations do take the time to collect and structure the type of detailed data that could be useful for a machine learning model. There is a national registry of such data for cardiology, in which nurses painstakingly fill out forms detailing much of the data around heart problems.

“The problem is that it’s an extremely expensive and extremely slow way to do things, which is why you only do it for things like cardiology, where either the government pays you to do it, or there’s enough money in the patients who want it,” Talby says.

Even if the AI is generating great recommendations, it will never be perfect, because the field of healthcare is open ended, to some extent, and there are not always “correct answers,” Talby says.

“That’s just the way it is,” he says. “Especially with complex disease like cancer, you should go and get a second opinion. People will treat you differently because there are very large differences in what will happen to you, in your life, and your outcome.”

And even if you did manage to create a “perfect AI,” there’s no guarantee that anybody would use it. Doctors and nurses are already suffering from information overload, and the last thing they need is being forced to check another screen before treating a patient.

And top it off, once the AIO starts being used, it changes the conditions that the model was trained with, thereby invalidating the starting point. “In a sense, the system breaks itself, because a month later, the highest risk people will be another group, because now you’re treating patients differently,” Talby says.

Despite all those challenges, progress is being made, slowly but surely. Just as it’s taken a century to get automobiles to the level of safety we enjoy today, it will take a while to work out all the challenges in implementing healthcare AI.

“It’s going to take a long time,” he says. “It’s slow. It’s hard. It’s complex. But the nice thing about healthcare is you actually save lives once in a while.”

Related Items:

Localized Models Give Hospitals Flexibility in COVID-19 Response

What Will AI’s Biggest Contribution to Healthcare Be?

How Big Data Can Save Lives at the Hospital