AWS Bolsters SageMaker with Data Prep, a Feature Store, and Pipelines

Organizations that are using Amazon SageMaker to build machine learning models got a few new features to play with Tuesday, including options for data preparation, building ML pipelines, and a feature store.

One of the most requested features from SageMaker customers is the ability to perform data preparation in the SageMaker Studio IDE, said AWS CEO Andy Jassy during his keynote at AWS re:Invent earlier today.

“The topic that seems to come up first and foremast almost every time is how can we make data preparation for machine learning easier,” Jassy said. “Data preparation is hard.”

Data scientists routines spend 70% or more of their time cleaning, preparing, and wrangling their data into a state where it’s suitable to train machine learning algorithms against the data. “It’s just a lot of work, and people say there must be an easier way,” Jassy said.

AWS hopes that its new offering, Amazon SageMaker Data Wrangler, is that better way. According to Jassy, the new offering uses machine learning to recognize the type of data that a user has, and recommends one (or more) of more than 300 pre-built conversations or transformations to apply to the data.

“You can preview very easily these transformation in SageMaker Studio, and then, if you like what you see, you simply apply those transformations to the whole dataset and Data Wrangler manages all the infrastructure, all that work under the covers,” Jassy said. “It’s a total gamechanger for the time it take to do data prep for machine learning.”

The approach is not all that different than software companies like Trifacta are taking to automate data transformation for machine learning and help to alleviate data scientists of the burden of data preparation. (In fact, Trifacta even uses the name “Wrangler” for the free version of its tool.)

AWS didn’t stop there. The company is taking the next step in automating the day-to-day workflow of data scientists and rolling out a place to store machine learning features that are generated by Data Wrangler.

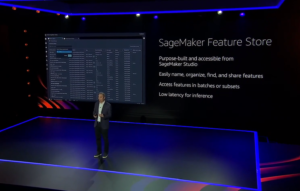

SageMaker Feature Store provides a place for data scientists (or other SageMaker users) to name, organize, find, and share the features that are used in machine learning models. The software does the difficult bit of keeping track of all the ways in which different features are used by different groups in different machine learning models.

The problem, Jassy said, is that features are hardly ever mapped to just one model. In fact, once a data scientist or machine learning engineer has done the work to construct a machine learning feature, it’s often consumed by multiple machine learning models.

“Sometimes you’ve got subsets in that set of features that want to be their own set of features,” he said. “And then you have multiple people that want to access those features and a different set with multiple models. And pretty quickly, it becomes really complicated and hard to keep track of it.”

Running next to SageMaker Studio, the new Feature Store also helps to manage features used in inference, which is a different use case from training machine learning models.

“When you’re training a model, you’ll use all your data in a particular feature to be able to get the best possible model to make predictions,” Jassy said. “But when you’re making predictions, you’ll often just take the last five data points in that particular feature. But they have to be stored the same way and they’ve got to be accessible.”

Finishing that last mile in the machine learning process can be tough. There are a lot of steps that have to happen sequentially, or sometimes they need to happen in parallel, Jassy said. In the wider IT world, developers have adopted continue integration/continuous deployment (CI/CD) pipelines to cope with the increased complexity and pace of development.

“But in machine learning, there is no CI/CD,” Jassy said. “None of them exist pervasively. People have tried to build their own. They’re trying to do it homegrown. It hasn’t been that scalable. It hasn’t worked the way they wanted to.”

That led Jassy into the third major upgrade for SageMaker: a new CI/CD service called Pipelines.

“With Pipelines, you can quickly create machine learning workflows with our Pipelines SDK. And then you can automate a bunch of these steps, from the number of the things you have to do from data preparation in Data Wrangler to moving the data from Data Wrangler to the Feature Store and some of the activities you want to take when it’s in the Feature Store, to training, to tuning, to hosting your models.”

All of those processes can be scripted in Pipelines, and then Pipelines will make sure the processes are executed in an automated manner, while adhering to all the necessary dependencies between each of the steps, and maintaining an audit trail of all the changes that have been made.

AWS has provided pre-built templates that customers can use as-is to roll out ready-made ML pipelines, or they can tweak the templates as needed. They can also build their own pipelines from scratch.

These new offerings bolster the 50 new features that AWS added to SageMaker in 2019. According to Jassy, there is more innovation on the way for the “tens of thousands” of organizations that are using SageMaker.

“SageMaker has completely changed the game for everyday development of data science,” he said. “People have flocked to SageMaker not only because there’s nothing else like it, but also because of the relentless iteration, the innovation that you continue to see us apply into SageMaker.”

Related Items:

AWS Unveils Batch of Analytics, Database Tools