Google Launches TensorFlow Quantum

The worlds of quantum computing and machine learning are coming together with TensorFlow Quantum (TFQ), a new library unveiled today by Google.

Google has been one of the leaders in the emerging field of quantum computing, where computers are able to manipulate multiple qubits, compared to the binary bits that regular computers can use. The Mountain View, California tech giant declared “quantum supremacy” last year as a result of its progress in the field.

But for all advancement that’s been made, it found that “there’s been a lack of research tools to discover useful quantum ML models that can process quantum data and execute on quantum computers available today,” the company says.

The launch of TFQ, an open source library designed for the rapid prototyping of quantum ML models, hopefully addresses that lack of tools. According to Google, the library integrates TensorFlow along with Cirq, which is an open source framework unveiled in 2018 for working with Noisy Intermediate Scale Quantum (NISQ) computers, which are defined as devices with 50 – 100 qubits and high fidelity quantum gates.

Two Google Research leaders, Alan Ho and Masoud Mohseni, discuss how TFQ fits into quantum computing in a Google blog post published today. They state that a quantum ML model, such as the type that TFQ is designed to develop, has the “the ability to present and generalize data with a quantum mechanical origin.”

Examples of quantum data include data generated (or simulated) from quantum processors, quantum sensors, or quantum networks. That could include quantum matter, quantum control, quantum communication networks, or quantum metrology.

“A technical, but key, insight is that quantum data generated by NISQ processors are noisy and are typically entangled just before the measurement occurs,” Ho and Mohseni. “However, applying quantum machine learning to noisy entangled quantum data can maximize extraction of useful classical information. Inspired by these techniques, the TFQ library provides primitives for the development of models that disentangle and generalize correlations in quantum data, opening up opportunities to improve existing quantum algorithms or discover new quantum algorithms.”

TFQ contains the basic structures, like qubits, gates, circuits, and measurement operators, that are required for specifying quantum computation, the pair write. “User-specified quantum computations can then be executed in simulation or on real hardware,” they say.

The researchers say they’ve used TFQ for a variety of quantum ML experiments, including creating hybrid quantum-classical convolutional neural networks, classical ML, layer-wise learning for quantum neural networks, quantum dynamics learning, and generative modeling of mixed quantum states.

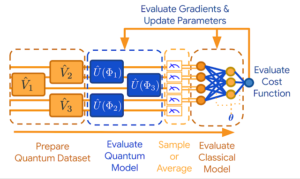

Just as data scientists today must follow a series of steps to train their machine learning models, quantum researchers must follow similar steps. The first step involves prepare a TFQ model on quantum data, which are typically loaded as tensors. Next the researchers use Cirq to select a quantum neural network model, and executes the model to extract information hidden in the entangled state.

After sampling or averaging the data from several of the runs, the researcher eneters the “post-processing” stage. Once the researchers has “classical” data to work with, they run it through a “classical” neural network model. They may perform cost functions to determine how accurate the model is in the post-processing stage, and then update the parameters to dial in the model.

TFQ is primarily designed today to execute quantum circuits on classical quantum circuit simulators, the researchers write. “In the future, TFQ will be able to execute quantum circuits on actual quantum processors that are supported by Cirq, including Google’s own processor Sycamore,” they say.

To read Google’s blog post on TFQ, go to https://ai.googleblog.com/2020/03/announcing-tensorflow-quantum-open.html.

Related Items:

New Library Adds Causality to ML Models

Quantum Researchers Eye AI Advances