Google Launches Transfer Service for On-Prem Data

(emojoez/Shutterstock)

Google today unveiled a new service for uploading data from customer’s on-premise data centers into the Google Cloud. The new offering is aimed at removing the technical complexity inherent with moving petabyte’s worth of data to the cloud.

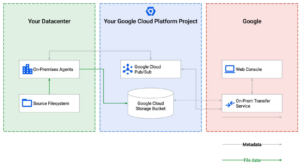

Transfer Service for on-premises data is a free Google Cloud service that’s intended to streamline the process of uploading data into Google Cloud Storage buckets. The service, which is currently in beta, utilizes one or more Docker-based software agent that install on the customer’s computer that houses the data. The customer selects which files he wants to upload from his NFS file system, and initiates the transfer from a Web-based console.

Google says the service provides an efficient way to move billions of files occupying petabytes worth of storage. The service can be used with relatively slow Internet connections, and users can also scale it up to utilize tens of Gbps worth of bandwidth, and use multiple agents to handle the transfer. Files weighing up to 100TB can be uploaded with a single transfer, Google says.

Customers can track the progress of the data transfers from their Web console. They also have access to logs that provide a transaction of the transfers. As the files are uploaded, Google automatically checks the integrity of the files, and alerts the consumer if there is a problem, the company says. In addition to transferring the contents of on-prem files into Google Cloud Storage buckets, it also uses Pub/Sub to move and track the metadata associated with those files.

Transfer Service for on-premises data (which appears to be the proper name of the service, even though it lacks capital letters) is a managed application, which means the customer isn’t responsible for maintaining the application or messing with any code, the company says. That’s a bonus for customers, according to Enterprise Strategy Group Senior Analyst Scott Sinclair.

Google will help you move petabytes of data into its cloud with the new Transfer Services for on-premises data (beta) (Image courtesy Google)

“I see enterprises default to making their own custom solutions, which is a slippery slope as they can’t anticipate the costs and long-term resourcing,” Sinclair says in a Google blog post today. “With Transfer Service for on-premises data (beta), enterprises can optimize for TCO and reduce the friction that often comes with data transfers. This solution is a great fit for enterprises moving data for business-critical use cases like archive and disaster recovery, lift and shift, and analytics and machine learning.”

Transfer Service for on-premises data is a new solution. It augments an existing Google offering, called Google Transfer Service, that is intended for moving data from other cloud repositories into Google Cloud.

Google also offers a Transfer Appliance that customers can use to move large amounts of data from on-prem sources into the Google Cloud. The on-prem appliance is a rack-mounted storage server that offers either 100 TB or 480TB of raw capacity.

However, Google now recommends using Transfer Service for on-premises data when transferring more than 1TB of data. For transfers of less than 1TB, it recommends using the gsutil utility.

For more information, see today’s Google Cloud blog post or Google’s documentation for the new service.

Related Items:

Google Cloud Unveils Slew of New Data Management and Analytics Services

Azure Data Share Seeks to Streamline Big Data Sharing

Exabytes Hit the Road with AWS Snowmobile