AI Can See. Can We Teach It To Feel?

(Nito/iStock)

We’ve made great strides in the field of computer vision, to the point where self-driving cars equipped with artificial intelligence (AI) can effectively “see” their surroundings. But can we teach AI to “feel” something about what it sees? The folks at Getty Images think we can.

At first blush, the idea that AI could “feel” something would seem to be pretty far-fetched. Feelings in general are closely intertwined with our human identities. How any one person feels about something is bound to be different than how another feels. Feelings are, by definition, subjective. In fact, it’s tough to find a more subjective topic than “feelings.” So how does that mesh with the objective functionality of computers?

The solution is relatively straightforward, according to Andrea Gagliano, a senior data scientist with Getty Images.

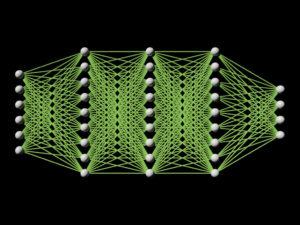

Just as a computer vision program attacks the identification problem by breaking an image down into constituent features (or vectors) and comparing them to known entities, Getty is training deep neural network algorithms to be on the lookout for certain elements in the images themselves to ascertain clues about the emotions that humans will attach to them.

The trick, Gagliano says, is encoding those various human emotions into vectors that are associated with the images. For each emotion or feeling that Getty’s data scientists select, such as “authentic” or “in the moment,” the company will assemble a collection of images that in some way represent that feeling or emotion. Each image has anywhere from 100 to 1,000 features or variables that go into building that vector, which can then be used to infer new images.

Once built, the models can be used to generate better keywords that will help users find the images, Gagliano says.

“From a computer vision perspective, we’re building out more rich metadata, both as a mathematical representation of different parts of our images, whether that is semantic understanding of the image or things around the people,” she says.

In addition to generating better keywords, the vectors can be used to create different groups of images that correspond with certain feelings or moods. For example, the company is looking to use high dimensional features to represent feelings like “authentic” or “in the moment,” Gagliano says.

“We’re doing a lot in the areas of customer [requests] for more ‘authentic’ and ‘less stocky’ imagery,” she says. “So we’re deconstructing, what does that that really mean? Does that mean that people are not smiling, or they’re not looking at the camera? There’s maybe a warm human relationship, or maybe they’re in the moment, they’re talking, or their hands are up. So what are the pixel elements of ‘authentic imagery’ that we can build computer vision around to supplement the language data around our imagery to be able to serve up those images better in search.”

Whichever way that users interact with the images — through traditional keywords or through the high-dimensional features behind the scene — the goal is giving Getty Images users new ways of interacting with the 300 million images in the company’s catalog, Gagliano says.

“Our search historically has been very focused on language and labels,” she says “Oftentimes customers come to the site and they don’t exactly know how to describe what they’re looking for. Especially on the creative side, they’re finding they don’t know how to write the query that they want. So there’s a disconnect between what our customers are looking for and the type of data that we have on the imagery.”

Things get a little fuzzy when talking about human emotion and feelings, which isn’t surprising. Choosing the right words to describe the attributes of “authenticity” is a subjective exercise that is hit and miss. But Getty has done its best to battle through the fuzziness and get to something a little more concrete.

“If you really drill down, there’s about 15 different dimensions by which you can think of an image as being authentic,” Gagliano says. “So doing that drilling down has really helped refine how we think about the machine learning model that we build as a result. In that sense, I think it’s harder to understand what the customer wants. Then it’s a lot easier build a machine learning model.”

Getty Images has been working on this effort to create “AI that feels” for about two years, according to Gagliano, who leads this effort. The company has already rolled out some of these features to select customers, and is working on making it more broadly available.

The effort consumes quite a bit of human and computer time. On the human side, Gagliano and her colleagues must define the feelings that they’re going to target. Then they must assemble imagery that represents that feeling, ensuring that it’s properly labeled. The data scientists must define the individual, pixel-level attributes that correspond with that emotion. And then of course they must write the machine learning code, using Python, TensorFlow, and a version of the ResNet convolutional neural network algorithm, to identify the attributes and link it to the human emotion.

“Often times those will be some sort subjective things. So they’re looking at natural light. What is natural light? Is it indoors? Outdoors? When there’s not a flare? Is it when there is no effect that has been applied?” Gagliano says.

“Creative researchers have always had their lens in which they look at their worlds from a photograph perspective,” she continues. “But then me, as a data scientist, I know computer vision will see pixels in certain ways and be able to differentiate an image in a certain way. And so it’s a constant iterative process of getting those training sets set up right, because we are working in a space with lack of clear language and clear buckets by which to separate those types of images.”

If “authentic” or “natural light” or “less stocky” sound hard to define, then how about concepts like beauty? The discussion could quickly turn into philosophical arguments on our perception of the world. Needless to say, Getty Images does its best to navigate these harried waters.

“We’ve had customers say that authentic images are people who are not overly beautiful,” Gagliano says. “Or it doesn’t look like they have too much makeup on. Those are really hard computer vision problems because it’s a very subjective task [to define] beauty, or basically impossible to figure out. It changes so drastically community to community, culture to culture, and even over time.”

Getty does its best to get around these tough questions by segmenting its population. So it will have different models depending on where the user is located around the world.

It also takes quite a bit of processing power to train the models, and then to score the entire corpus of 300 million images. With 100 to 1,000 “human feeling” attributes for each image, the processing time adds up. Getty Images uses cloud and on-prem systems that include GPUs, as well as CPUs.

But currently, the biggest bottleneck is human. Getty doesn’t’ rely on machine learning entirely, and still leans heavily on editors to make key decisions when it comes to identifying aspects of images tied to human emotion. Gagliano’s team is looking for ways to use data science to accelerate the pace of workflow when it comes to the human editors.

Deep learning is making machines better at identifying human emotion. But in the end, it’s still largely a human endeavor, which perhaps will never change.

Related Items:

What’s the Difference Between AI, ML, Deep Learning, and Active Learning?

AI, You’ve Got Some Explaining To Do