Inside OmniSci’s Plans for Data Analytics Convergence

OmniSci CEO Todd Mostak

You may know OmniSci as the provider of a fast SQL-based database that runs on GPUs. But the company formerly known as MapD is moving beyond its GPU roots and is building a data platform that runs on CPUs and does machine learning too–a vision that it shared at its inaugural Converge conference in Silicon Valley this week.

“The original business of OmniSci was not just to build a visual analytics platform or visual database,” OmniSci co-founder and CEO Todd Mostak said during yesterday’s keynote address at the Computer History Museum in Mountain View, California, the site of the two-day Convergence conference. “It was to build a real-time analytics experience.”

Some people may differentiate between analytics and data science. “But I think they’re becoming the same thing,” the graduate of MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) said. “Analytics and data science are converging. In fact, that’s where we came up with the name of the conference. We fundamentally believe that people just want understanding, they want insight, right?”

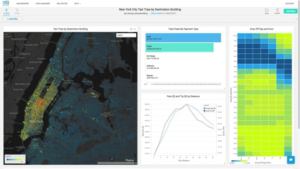

Sometimes, that means just running SQL queries. Sometimes it means powering geo-temporal workloads, a favorite of OmniSci’s early telecom customers, such as Verizon Wireless. Or sometimes it means rendering interactive visualizations against tens of billions of rows of data, which OmniSci does through Immerse, its proprietary GUI (the core database is open source).

During Converge, OmniSci was not shy about running live demos on billion-row samples in Immerse, its interactive GUI

And sometimes, it means something entirely different. “Many times it means harnessing…the incredible power of our hardware to actually run these algorithms that find the insight, the needles in the haystack, that even the best subject matter experts, the analysts and data scientists, couldn’t find on their own,” Mostak said.

“One of the things we’re excited about in our future path is combining these things,” said Mostak, a 2017 Datanami Person to Watch. “We think that they’re not three distinct things. We feel we’re in a little bit of a unique position to bring these things together complete at scale–to be able to take billions of rows that data scientists are interacting with it, finding the anomalies….And now they want to start training and building models.”

A Predictive Forecast

Those machine learning capabilities are on their way with OmniSci 5.0, which the company announced yesterday at the show. The new release should be available before the end of the month, Mostak said.

Machine learning presents a new twist on OmniSci’s core historical benefit, which is data exploration. While the GPU-powered, column-oriented database at the heart of OmniSci allows the product to deliver very fast response times for interactive SQL-oriented workloads, the inclusion of machine learning algorithms will allow customers to do other tasks, such as making predictions or forecasts based on data housed in the OmniSci database.

During this morning’s Converge demo, billions of rows of bus route data from the San Francisco Bay Area was loaded into OmniSci DB. Rachel Wang, the company’s director of product management, showed how a user can slice and side the data in Immerse, and how new features in 5.0, such as cohort analysis and filter sets, can give the user new insights hidden in the data.

The inclusion of machine learning features (which are just starting to be delivered in 5.0) would take that experience to the next level by allowing the user to forecast what those bus routes would look like in the future.

An OmniSci customer quote, as presented by Venkat Krishnamurthy, OmniSci’s vice president of product management

“With machine learning, you’ll be able to do things you can’t do with SQL, like forecasts,” said Venkat Krishnamurthy, OmniSci’s vice president of product management during the keynote this morning.

Today, OmniSci’s database is deployed atop GPU-enabled computers, whether it’s a laptop or (even better) a clustered group of servers. Having all that processing power available for machine learning algorithms is an enticing option, and something that will be brought forth with version 5.0.

With version 5.0, OmniSci has added support for user defined functions at the row and column level, which will let users add machine learning functions to the query engine. Work that OmniSci is doing with Travis Oliphant’s new startup Quansight will enable Python-based libraries to be supported, and for JupyterLab to be integrated with Immerse. Oliphant, who is also a Datanami 2017 Person to Watch, also delivered a keynote today.

Owing to its longstanding partnership with GPU leader NVidia, OmniSci will support the RAPIDS library of machine learning algorithms with its database. “The fundamental framework will be coming out in the next release,” Mostak said. “We’re doing a lot of work in tooling to provide basic algorithms.”

Need for Speed

As OmniSci widens its use cases from high-speed interactive analytics into data science, it’s also widening the types of hardware that it can run atop, and widening the sources of data on which it can run.

OmniSci is no longer limiting itself to running on GPUs. The company yesterday announced that, as part of its new partnership with Intel, that it can run atop CPUs as well as GPUs, which will give it more options for scaling the database up or down, depending on the workload.

As capable as GPUs are, it’s not always the best place to run OmniSci, Mostak said. “Customers can’t always get access to these things or they’re timed,” he said. “Or there are problems that don’t necessarily need the speed and power of GPUs.”

If the data is too small, it doesn’t necessarily need the power of a GPU, he said. Likewise, if the data exceeds the amount of RAM that a GPU can provide, then it makes sense to use a general CPU.

“I think these two things complement each other,” Mostak said. “It’s really a testament to our engineers’ dedication on performance optimization across the board. We really do believe in the fastest software for the fastest hardware, and that hardware can be context dependent.”

OmniSci invited Wei Li, Intel’s vice president and general manager of machine learning onto the stage to chat about the new support for Intel CPUs and the future of big data analytics.

With the sizes of today’s data sets, it would be shame to limit OmniSci’s capability to whatever data can fit into GPU database, Li said.

“If you have to analyze data from your disk, that’s just impossible to do, so you must be able to get all the data in memory before you can analyze,” Li said. And with OmniSci’s fast database software and Intel’s fast hardware and large memory capacities, “we can do some crazy things,” Li said.

As if support for machine learning and CPUs wasn’t enough, OmniSci has even more in version 5.0, including a new Data Fusion features and support for foreign data sources. The mechanism for supporting outside data is through the product’s support for Apache Arrow, the in-memory data format that spearheaded by Wes McKinney (a 2018 Datanami Person to Watch) to become a data standard that simplifies data flows involving mulitiple backends, including Hadoop, Spark, NoSQL, and SQL databases.

McKinney, who is also one of the founders of Dremio, also presented at OmniSci’s annual user conference, which attracted about 250 users, prospects, partners, and press.

Related Items:

MapD’s GPU-Powered Database Now Available in Google Cloud’s Marketplace

Why America’s Spy Agencies Are Investing In MapD

MIT Spinout Exploits GPU Memory for Vast Visualization