Making ML Explainable Again

(amasterphotographer/Shutterstock)

Machine learning may seem like a mysterious creation to the average consumer, but the truth is we’re surrounded by it every day. ML algorithms power search results, monitor medical data, and impact our admission to schools, jobs, and even jail. Despite our proximity to machine learning algorithms, explaining how they work can be a difficult task, even for the experts who designed them.

In the early days of machine learning, algorithms were relatively straightforward, if not always as accurate as we’d like them to be. As research into machine learning progressed over the decades, the accuracy increased, and so did the complexity. Since the techniques were largely confined to academic research and some areas of industrial automation, it didn’t impact the average Joe very much.

But in the past decade, machine learning has exploded into the real world. Armed with huge amounts of consumer-generated data from the Web and mobile devices, organizations are flush with information describing where we go, what we do, and how we do it — both in the physical and digital worlds.

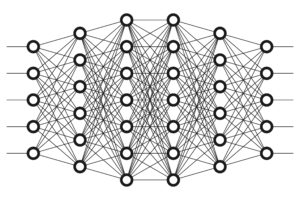

At the same time, the advent of deep learning is giving us unparalleled accuracy for some types of inference problems, such as identifying objects in images and understanding linguistic connections. But deep learning has also brought higher levels of complexity, and that – combined with growing concerns about the privacy and security of consumer data – is giving some practitioners pause as they roll out or expand the use of machine learning technology.

Modeled after the human brain, neural networks can be incredibly complex (all_is_magic/Shutterstock)

Organizations must carefully weigh the advantages and disadvantages of using “black box” deep learning approaches to make predictions on data, says Ryohei Fujimaki, Ph.D., who worked on more than 100 data science projects as a research fellow for Japanese computer giant NEC before NEDC spun Fujimaki and his team last year as an independent software vendor called dotData.

“It’s really up to the customer,” says Fujimaki, who is the CEO and founder of dotData, which is based in Cupertino, California. “There are still some areas that 1% or 2% make a huge difference in return. On the other hand, there are areas — and in particular I believe this is a majority of areas of enterprise data science – where transparency is more important, because at the end of the day, this has to be consumed by a business user and business user always required to understand what is happening behind the scenes.”

White Boxes

dotData develops a “white box” data science platform that it claims can automate a good chunk of the machine learning pipeline, from collecting the data and training models, to model selection and putting the model into production. The software, which runs in Hadoop and uses Spark, leverages supervised and unsupervised machine learning algorithms.

Fujimake says the dotData Platform’s big differentiator is the way it automates feature engineering. Fujimaki helped develop dotData’s proprietary algorithm that automatically selects the data features to be used for downstream machine learning processes. The software also generates a description, in plain English, that explains why it picked those particular features.

“We are the only platform that can automate this end-to-end process on raw data, to prepare data for feature engineering in machine learning,” he tells Datanami. “Our features are very transparent and easy to understand by domain experts. This is the key of our automated feature engineering.”

The enterprise data that companies want to feed into machine learning models are typically collections of complex, related tables, Fujimaki says. Data scientists will look at the data and pick out a handful of the tables, and turn them into vectors to be processed by the machine learning models.

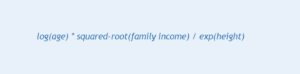

However, the vectors that data scientists select will often be convoluted equations, such as the log of a person’s age times the square root of the family income divided by the number of family members, Fujimaki says.

“Well, this doesn’t mean anything from the domain point of view,” he says. “It may be highly correlated to something you want to predict, but business users cannot get insight from the feature. On the other hand, our features are very transparent, and thanks to the natural language explanation, business users can easily consume our features of insight. That’s why our customers really like our features.”

Explainable AI

dotData’s software has been adopted by companies in the financial services, telecom, retail, pharmaceutical, transportation, and airline industries. One of its early customers is Japan Airlines, which used dotData’s software as part of a promotional campaign for trips to Hawaii, a popular destination in the summer for Japanese citizens.

Fujimaki explains how his software helped Japan Airlines analyze upwards of 20 customer data points, such as the customers’ flight history, point history, use of credit cards, and Web site access history, as part of that campaign. “We put everything in the machine, and asked it ‘I want to predict who’s going to Hawaii,'” Fujimaki says. “In the end, the machine generates a lot of interesting features.”

But one particular piece of data stood out, and the dotData Platform created a new feature based on the data, which Japan Airlines used successfully with its campaign. “After they found this feature with our engine, they could give a clear business interpretation about why this customer is going to Hawaii,” Fujimaki says.

Naomasa Shibuya, a data scientist with Japan Airlines, agrees. “dotData’s automated feature engineering works exceptionally well with our massive transactional datasets, continuously giving JAL deeper business insights than we previously thought possible,” Shibuya says.

Accuracy is important in data science and machine learning. After all, improving recommendations and predictions is the name of the game. But another factor that comes into play is the explainability of the predictions. While deep learning may provide better accuracy than traditional linear algorithms used by dotData, deep learning’s explainabilty challenges will limit its use in the enterprise, according to Fujimaki.

“Of course accurate predictions is important,” he says. “But customers can find a lot of new insight in a transparent way, so we can clearly explain ‘What does this feature mean and how this is created,’ and that transparency gives customers more confident to take some action based on this insight.”

Related Items:

AI, You’ve Got Some Explaining To Do

Bright Skies, Black Boxes, and AI

Enterprise AI and the Paradox of Accuracy