Confluent Strengthens IoT Integration for Enterprise Kafka

A new release of Confluent’s enterprise Kafka platform unveiled Tuesday should make it easier for developers to integrate streaming Internet of Things (IoT) data into their analytics infrastructure. The company’s release of Confluent Platform 5.0 also brings security updates, new KSQL features, and better overall management of Kafka operations.

While Confluent is the major developer of the open source Apache Kafka project, the company also develops and sells a full-featured Kafka-based systems dubbed the Confluent Platform that brings an array of enterprise features demanded by the largest Kafka users.

In addition to including Apache Kafka 2.0, Confluent Platform 5.0 brings an array of other new features, including IoT integration enhancements. Specifically, Confluent has bolstered support for IoT use cases by adding support for a standard protocol called Message Queing Telemetry Transport (MQTT).

Confluent selected MQTT for a few reasons, says Joanna Schloss, director of product marketing for the Palo Alto, California company. For starters, it provides lightweight, payload agnostic transport layer that can also support hierarchical data. MQTT also provides message delivery guarantees that will serve the different quality of service demands of customers.

Prior to this release, IoT developers had to insert an MQTT broker into the middle of their data pipelines before building a custom ingestion pipeline into Kafka, Confluent says. With the prebuilt MQTT Proxy, Confluent Platform users can eliminate that step, and avoid the extra hassle, complexity, and performance drag that accompanies it.

IoT use cases are rising among Confluent users, Schloss says. “We’re seeing demand across all different verticals, from digital natives to traditional brick and mortar companies adopting Apache Kafka,” Schloss tells Datanami via email. “We’re also seeing demand to deliver on different business applications, from fleet logistics optimization to healthcare monitoring applications to connected car initiatives.”

Organizations with IoT initiatives are adopting Confluent Platform to harness streaming event data to achieve operational advantages, she says. “For example, Confluent Platform allows a truck manufacturing organization to use their fuel sensors to predict fuel consumption, schedule pit stops with routes, and have fueling stations already identified for their driver,” Schloss says.

Other organizations are using the enterprise Kafka platform to integrate IoT and predictive analytics capabilities in areas like preventative maintenance, operational optimization (such as turning off the AC in vacant rooms), generating recommendation, and detecting fraud from mobile/ATM devices, she says.

Confluent Platform 5.0 also brings enhancements to KSQL, the new SQL-based stream processing engine that it added with a previous releases of its platform. With version 5.0, it’s bolstering KSQL with several new features, including:

- A new graphical development zone in the Control Center that will help developers get more out of KSQL. “Developers can create streams and tables from topics, experiment with transient queries, and run persistent queries to filter and enrich data,” Confluent says in its blog. “What’s more, they can do all this with the power of autocompletion, which lowers the bar to getting started with stream processing.”

- Support for nested data, which will let users work with data in Avro and JSON formats

- Support for user-defined functions (UDFs) and user-defined aggregate functions (UDAFs) to augment KSQL’s existing scalar and aggregation functions. Confluent says these features will make it easier for users to build machine learning applications, for example.

- Support for stream-stream, table-table, stream-table joins, inner and outer joins, and left joins “where appropriate for the joined entities.”

On the security front, Confluent developed a new LDAP authorizer plug-in that makes it easier for IT managers to integrate Kafka into their security operations, which will help to ensure that unauthorized personnel do not gain access to sensitive data. The new feature supports established LDAP or Active Directory implementations, and lets managers control access via user- and group-based access control lists (ACLs).

Confluent has also bolstered the security capabilities of Control Center by giving managers better control over who has access to sensitive features in the Confluent Platform, including topic inspection (see below), schemas, and KSQL. “When customers restrict access to a feature via the configuration file, Control Center’s UI will reflect this change upon startup, and users cannot circumvent these protections in any way,” the company writes in a blog post.

Confluent already delivered disaster recovery capabilities in its platform by way of its Replicator feature, which ensures that topic messages and metadata are replicated from a primary to a secondary cluster.

With Confluent Platform 5.0, the company is ensuring that any client applications built on Replicator are also replicated. Specifically, the company is making sure that when failovers occur, that the “consumer offset translation” is handled correctly so the client can pick up processing data near where they stopped processing on the primary cluster.

This release also brings a new view in the Control Center management console that will let managers view and compare specific broker configurations across multiple Kafka clusters. This will help ensure that things like download and security configurations are the same, minimizing the chance of problems.

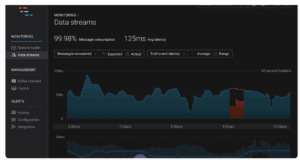

Another new Control Center feature helps managers monitor for consumer lag. According to Confluent, this helps managers to understand how Kafka consumers are performing based on the offset, and also to spot potential issues so they can take proactive steps to keep performance running high.

The new topic inspection feature in Control Center helps users gain insight into the actual data flowing by them in Kafka topics. According to Confluent, users can see the streaming messages in topics and read key, header, and value data for each message.

Lastly, the Confluent Schema Registry helps Kafka users understand how data is organized. With this release, Confluent has integrated the Control Center with the registry, which the company says will help developers build better applications.

Related Items:

Want Kafka on Kubernetes? Confluent Has It Made

Confluent Adds KSQL Support to Kafka Platform

A Peek Inside Kafka’s New ‘Exactly Once’ Feature