How Erasure Coding Changes Hadoop Storage Economics

(Timofeev Vladimir/Shutterstock)

The introduction of erasure coding with Hadoop 3.0 will allow users to cram up to 50% more data into the same cluster. That’s great news for users who are struggling to store all their data. However, that storage boost comes at the expense of greater CPU and network overhead. Balancing the demands of cost and performance will become a more important exercise for Hadoop users, experts say.

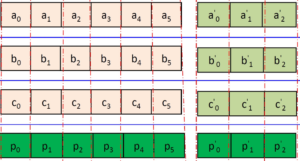

Erasure coding is a data protection technique that essentially breaks up a given piece of data into separate pieces and then spreads those “parity bits” out across multiple drives in a cluster. With this approach, the parity bits ensure that data can still be recovered even if multiple drives fail.

Hadoop has adopted erasure coding with version 3.0, which the Apache Software Foundation released in December. This marks a major change for the Hadoop Distributed File System (HDFS), which up to this point always made three copies of data to protect against data loss. Compared to that 3x data replication overhead, erasure coding is expected to bring a data replication factor of about 1.5X, which translates to a net 50% boost in storage efficiency.

While erasure coding boosts storage, it takes a bigger hit on networking, since all disk reads will travel over the network. There is also some extra CPU overhead associated with erasure coding. This is a big change from the traditional triple replication scheme, where the concept of “data locality” sprung up to help avoid the need for HDFS to pull data over the network. (All writes in HDFS have always traveled across the network, but reads didn’t necessarily have to).

Redistribution of Data

Over the years, networking gear improved faster than storage. 10Gbps Ethernet networks are the standard, and 40Gbps networks aren’t uncommon.

Networking and compute capacity has improved relative to storage in many Hadoop clusters (Inara Prusakova/Shutterstock)

The result is that many Hadoop users are sitting pretty well with network capacity, but are still struggling to store all their data. Since the only way to add storage was to add more nodes, compute capacity also grew. Implementing erasure coding is one way to transfer that extra network and compute capacity into storage capacity.

“It’s good news in a way because you’re trading a seemingly endless supply of CPU clock-ticks for what tends to be a finite supply of storage space in today’s world,” says Hortonworks CTO Scott Gnau. “I’ve never heard anybody saying, ‘Gee, I don’t have a problem storing all the data that I want to keep.'”

Gnau sees Hortonworks customers adopting erasure coding gradually, and carefully matching the new storage technology to specific use cases. “I don’t see this as an across-the-board change,” he says. “I think there is some data and there are some use cases that will fit really well, and there’s some data where [erasure coding] just doesn’t make any sense.”

Like Hortonworks, MapR plans to add erasure coding to its big data platform, which includes Hadoop, NoSQL storage, and real-time data processing, sometime around the middle of the year.

“It’s a completely brand new way of storing the data,” says Vikram Gupta, senior director of product management at MapR. “When you create the volume and you want to store data, you have to pick either replication or erasure coding.”

Gupta says erasure coding could bring upwards of a 50% performance penalty, which could be manageable for customers who have extra CPU capacity. “The tradeoff here is you’re reducing cost versus performance,” he says.

Cloudera has benchmarked erasure coding on a small 10-node cluster that had plenty of network bandwidth and found that erasure coding actually boosted overall performance, despite the increased network traffic and CPU overhead, says Doug Cutting, Cloudera chief architect and co-creator of Apache Hadoop.

“That was nice to see the result,” Cutting tells Datanami. “I think there’s a little more hardware acceleration for some of the operations, so there are cases where it gets faster, as well as smaller. But nobody has deployed it yet in really large scale, at least that I’ve talked to. As you start to scale up, there could be cases where it’s slower.”

Adopting Erasure Coding

Smaller clusters in general will be more suited to using erasure coding from a network-bandwidth-availability perspective, Cutting says. “The bulk of clusters are 10 to 50 nodes, and most of those are using a single switch and they’re going to have plenty of network bandwidth from node to node,” he says.

Erasure coding is often considered an advanced file-level RAID technique (image courtesy Nanyang Technological University)

Organizations that want to use erasure coding — particularly those running across larger clusters of 100-plus nodes that span multiple racks – will need to pick and choose their use cases more carefully, he says.

“It depends on the computation,” Cutting says. “If you’re doing machine learning computation, a lot of those are going to be compute-intensive. If you’re doing standard data processing things, and certain classic queries, then they are going to be I/O intensive.”

Classic Hadoop applications, such as MapReduce, Impala, and even Spark, have always had a “fair amount” of network traffic to begin with, Cutting says. That’s largely due to MapReduce’s “shuffle” phase, which always included touching all the nodes and thus moving data through the switches. These applications are more likely to be I/O bound, and thus more likely to benefit from keeping data locality and less likely to benefit from the storage boost that EC brings.

“As we move to erasure coding, at least for small clusters, keeping inputs local isn’t probably going to give you a noticeable performance improvement,” he says. “And if you move to a large cluster, then erasure coding may have some performance impact on reads, but maybe that’s acceptable given the other advantages.

“There are a lot of variables that come into play,” he continues, “and anybody who’s going to be switching to erasure coding is going to need to keep those things in mind.”

New Paradigm

Tom Phelan, co-founder of big data virtualization software vendor BlueData, says the advent of erasure coding marks the beginning of a new era for Hadoop – one that embraces the separation of compute and storage.

“For quite a while the Hadoop community was consistently putting forth the message that the only way to deliver high-performance big data clusters was to co-locate compute and storage,” he tells Datanami. “We’ve watched that community and the big data industry in general evolve that message closer now to what BlueData has always been saying, which is that you can effectively have high-quality big data analytics without co-locating those two resources.

“I think this is tremendous progress,” he continues. “It flips the whole paradigm.”

As Cloudera gets closer to shipping its Hadoop 3.x distribution – expected in the first half of the year – the company plans to offer more resources to help the Hadoop community understand the costs and benefits that are associated with erasure coding.

Hortonworks also expects to offer performance tools to help customers understand whether their bottlenecks are and predict how erasure coding could impact it.

MapR, for its part, plans to add new features to its data fabric that automatically stores hot data using the triple-replicated method (which boosts data locality) and then uses erasure coding to store colder data.

In the end, erasure coding provides another choice, which is good for customers, says Hortonworks’ Gnau. “It’s cluster size, configuration and use case dependent and depending on each of those three requirements, you’ll end up with a different case,” he says. “It’s not one-size-fits-all.”

Related Items:

Hadoop 3.0 Likely to Arrive Before Christmas

Tracking the Ever-Shifting Big Data Bottleneck