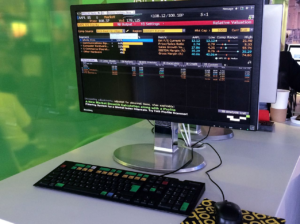

The Data Science Inside the Bloomberg Terminal

(Courtesy: Wikipedia)

When it comes to financial data, you can’t find a more recognizable product than the Bloomberg Terminal, which is used by hundreds of thousands of financial professionals around the world. While its amber-on-black text interface evokes a 1990s-era Unix vibe, the data science behind the scenes is definitely cutting edge.

The Bloomberg Terminal is no ordinary data product. Since Michael Bloomberg launched it back in 1982, the terminal has become a must-have tool for investors who need to track news, stocks, and market-changing events in real time. With about 325,000 users globally and subscriptions that reportedly cost about $20,000 per year, the product generates enough revenue to keep thousands of programmers and data scientists busy building ever more intelligence into the system.

Bloomberg’s Head of Data Science, Gideon Mann, recently talked with Datanami about the data science technologies and techniques that go into keeping the terminal on the cutting edge of information delivery in the big data era.

“There are so many things that happen in the real world that are relevant to our customers that machine learning is increasingly a phenomenal tool for structuring and normalizing that information,” Mann says.

Data Explosion

Courtesy: Bloomberg

Two big things have changed over the last five years that have impacted how Bloomberg builds its terminal. First, the amount of data that’s accessible has increased exponentially, Mann says.

“It would have been feasible to just scan all the relevant newspaper headlines, but they can’t anymore,” he says. “If we hadn’t kept pace with the growth of information, then our clients would be feeling even more behind where they needed to be.”

The second driver is the changing face of finance. “Finance as a whole is becoming more programmatic, more machine-driven, less about working from your gut, more about very careful data analysis,” he says. “We’re enabling our clients to interact with our data in a quantitative kind of way.”

Whether that data includes foot-traffic in retail outlets collected from FourSquare or the path of merchant ships collected from satellite, it all needs to come together in an accessible manner via the Bloomberg interface.

‘Fanatical’ About Information

Today, the Bloomberg Terminal uses an array of data science tools and techniques – including machine learning, deep learning, and natural language processing (NLP) — to separate signals from noise and surface valuable insights to financial professionals.

“One of the big uses of machine learning for us is simply processing and organizing and collating and making sense of the data coming in,” Mann says. “Some of that is news data, both our news and news we aggregate, but a lot of it is also financial analytics and financial data and reports.”

While the number of journalists employed by newspapers might be going down, the amount of potentially relevant information flowing around the world is going up. Processing the huge amount of data that’s generated on a daily basis is a monumental task that could not be accomplished without machine learning, Mann says.

“There’s so much news data, but it’s not systematic, so you can’t really understand it by poring over it manually,” he says. “But when you start to think about the world as being connected among all of these events and all these news stories, then you have to process it by stringing together these relationships. As you start to string together these relationships, you can only do that algorithmically.”

Twitter, for example, is a source of “an immense amount” of new data, Mann says. “All day long people are tweeting about various things. Some of it is very material,” he says. “It’s not really possible to filter all of that to see what necessarily is relevant. But we do a lot of meta-analysis. We’ll do a sentiment analysis in real time for particular companies, or filters for breaking news or events that are happening.”

Data Mining

There is also a lot of relevant data hidden in graphs and tables in the quarterly reports that are submitted by publicly traded companies. The challenge is that this data can’t be easily manipulated or communicated outside of its original state.

“It sounds boring. Tables are boring,” Mann says. “But there’s a huge amount of value in them.”

Subscriptions to Bloomberg Terminals reportedly cost $20,000 per year.

Back in the 80s, Bloomberg employed an army of people to physically read the paper document and re-type the information into Bloomberg’s systems, where it could be electronically distributed via the terminals. That approach has thankfully given way to more automated approaches over the years, and is to the point where machine learning algorithms can basically reverse engineer the table and extract the relevant pieces almost entirely automatically.

“Over the last year or so, we’ve delivered very sophisticated machine learning and image recognition to look at images of the table, recognize the table, segment the table, and ingest that table in what they call straight-thorough processing, without human intervention, right into the database,” Mann says.

This type of data mining may sound pedestrian, but it’s actually quite difficult and requires a considerable technical investment to do it accurately and at scale. For Bloomberg, the effort required extensive investment in building and running machine learning models, including deep learning.

“As a result we’ve extended the reach of the amount of data we can provide to our clients,” Mann says. “We’re kind of fanatical about that kind of information extraction.”

In addition to providing the news and stock data to subscribers, Bloomberg also provides analytic tools to help investors model their portfolios. These are complex statistical tools that also benefit from new analytic approaches.

Exploring New Tech

The company is constantly experimenting with new technologies and looking for ways they can improve the product. It tries to use open source products where it can, but it will buy a proprietary tool if equivalent functionality is not in the open realm.

Gideon Mann is the Head of Data Science in Bloomberg’s Office of the CTO

Deep learning is currently one of the areas that Bloomberg’s R&D efforts are focusing on. The company has invested in software frameworks like TensorFlow and has high-powered GPU clusters to train and execute the models. The deep learning technology looks promising, but it’s not yet clear how it will be used.

“We’re all focused on trying to understand how deep learning can make a difference,” Mann says. “We’ve gotten very familiar with the standard machine learning tools. I think a real big question for us is what new [capabilities] do the deep nets offer.”

Graph databases are also being looked at, but haven’t caught a lot of traction yet within Bloomberg. “We’ve been exploring graph database a bunch, but again, that’s one of the places where we’re still not totally sure how it fits in,” Mann says.

Building a Culture

Mann, who works in the Office of the CTO and comes out of the financial sector, is involved in many aspects of Bloomberg’s data science initiatives beyond just helping to guide development of new features in the terminal.

“We spend a lot of effort on recruiting — a huge amount,” Mann says. “We have a lot of academic outreach and we have a faculty speaker series and a faculty grant program. We go to a lot of conferences and we publish a fair amount of research.”

Like many companies that are leaders within their industries, Bloomberg works closely with the open source community. For example, its developers have committed back a large number of fixes and features for the Apache Solr search engine. “We have a lot of relationships with Solr/Lucene community,” Mann says. “It goes back and forth. That kind of a model feels right.”

Bloomberg has contributed back to other open source projects, too, including the Jupyter data science notebook, which is central to its Python data science initiatives. The company develops much of the production code that goes into the terminal using the C++ language for latency reasons; JavaScript is the favored language for general purpose application development.

“There’s a lot that we borrow,” Mann says. “I don’t want to build anything that anybody else has built better and is central to somebody else’s job. We’ve integrated a lot of Tensorflow. We do lot of Kubernetes and Spark. We’ve started dipping our toe into Airflow.” Hadoop and HDFS are also widely used.

Mann is a founding member of the Data for Good Exchange (D4GX), a group Bloomberg founded to explore and promote the use of data science to solve societal problems. The fourth annual D4GX meeting occurs this Sunday September 24 in New York City.

The Bloomberg Terminal has changed considerably over the years, and it will likely continue to evolve as the big data boom continues its expansion, and as technology improvements make it possible to process ever-larger amounts of data and surface the insights to wider audiences. It’s a steep curve, but Mann is eager to keep Bloomberg on top of it.

“The research isn’t standing still,” he says. “Not to sound too wild-eyed, but over the next decade or so, [the amount of change] is going to be immense. It’s going to change things really fundamentally. I imagine the world will still be mostly recognizable, but I think there will be immense change.”

Related Items:

Why Spark Is Proving So Valuable for Data Science in the Enterprise

How Machine Learning Is Eating the Software World