The Network is the New Storage Bottleneck

Shining world map on black background

Along with the data center storage capacity explosion and the major shift in storage architecture from scale-up to scale out, there is one more trend which is not only effecting storage but also networking.

This third storage trend is the accelerating shift from Hard Disk Drives (HDDs) to Solid State Drives (SSDs) and it is happening for two reasons. First, the moving parts of HDDs (motors, heads, etc.) break down over time, take a lot of power to run and require a lot of cooling. Because of this, even though there is not price parity between HDDs and SSDs yet, when the Total Cost of Ownership (TCO) is considered, their costs are much closer.

Second, for many smaller IT organizations, the process of matching application performance needs to the required storage capabilities (slower HDD vs. faster SSD), is too dynamic and resource consuming. It is easier to just go with all SSDs in their data centers and remove the issue entirely.

Figure 2: Storage Arrays or Scale-up Architecture is being replaced by clusters of commodity servers running Software Defined Storage (SNS) applications also called Scale-out Architecture.

Although the growing shift to SSDs has many benefits, it is causing trouble at the network level. This is because SSDs are so much faster than HDDs on all levels of storage performance measurement: latency, bandwidth and IOPs. As a result, SSDs can quickly overflow the capacity of traditional network connections.

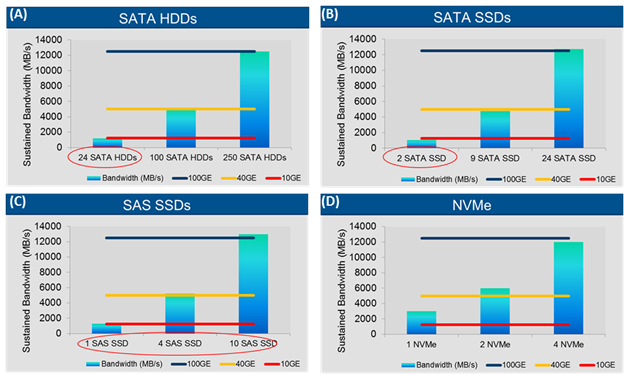

Here is an example of what I mean: In figure 4(A) you can see a comparison of various quantities of SATA HDDs and different Ethernet speeds from 10 to 100Gb/s. In figure 4(B) you see the same disk interface technology, SATA, now interfacing to a SSD, and the results are dramatic.

Figure 4: Comparison of HDD and SSD bandwidth vs. Ethernet speeds

As you can see in figures 4(C) and 4(D), which compare those same Ethernet speeds with faster performing SSDs interface types, it takes less and less of drives to fill the Ethernet network port bandwidth capacity. Until finally, with NVMe SSDs, it takes only four to fill a 100Gb/s Ethernet port. The message here is obvious: faster storage needs faster networks.

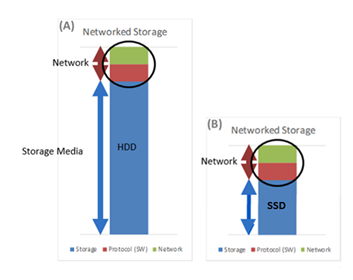

Figure 5: Comparison of HDD(A) and SSD(B) data access vs. network protocol and hardware times

The reason seems simple, but it’s more complex when you look a little deeper.

Looking at figure 5, the obvious point is that the network wire speed is only half the problem when increasing network performance. The other half is the protocol stack. This needs to be addressed with new advanced modern protocols like RDMA over Converged Ethernet (RoCE) and NVMe over Fabrics (NVMe-oF).

NVMe-oF allows the new high performance SSD interface, Non-Volatile Memory Express (NVMe), to be connected across RDMA-capable networks. This is the first new built-from-the-ground-up networked storage technology to be developed in over 20 years.

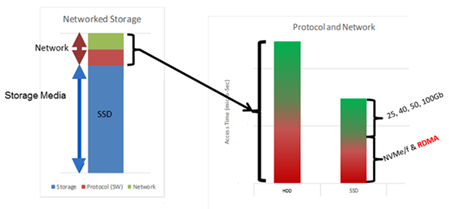

Figure 6: As storage latencies decrease, protocol and network latencies become relatively more important and must also be reduced.

Coupled with new Ethernet and InfiniBand speeds, which now top out at 100Gb/s, NVMe-oF will not only dramatically improve the performance of existing storage network applications, but will also accelerate the adoption of many new and future computer technologies like Scale-out and Software Defined Storage, Hyperconverged Infrastructure, and Compute/Storage disaggregation.

NVMe-oF uses Remote Direct Memory Access (RDMA) technology to achieve very high storage networking performance.

Figure 7: RDMA allows direct, zero-copy and hardware-accelerated data transfers to server or storage memory, reducing network latencies and offloading the system CPU.

RDMA over InfiniBand and RoCE allows data in memory to be transferred between computers and storage devices across a network with little or no CPU intervention. This is done with hardware transport offloads on network adapters that support RDMA. This is not new technology. RDMA has been borrowed from the High Performance Compute (HPC) market where it has been in use within InfiniBand networks for more than a decade.

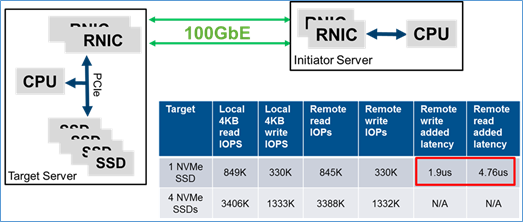

How fast is NVMe-oF? Of course performance depends on many factors: the SSDs, the Initiator (Server) and the Target (Storage Device) architectures, and, of course, the network components. Figure 8 shows the results of one test done for a storage conference in the fall of 2015.

Pre-standard NVMeoF demo with Mellanox 100GbE networking demonstrates extremely low fabric latencies compared to using the same NVMe SSDs locally.

The most interesting data is the added latency numbers. This is the difference in latency between testing the SSDs locally in the Target Server vs. testing the SSDs remotely across the network. It should be noted that this was an early pre-standard version of NVMe-oF and used highly optimized Initiator and Target systems tightly integrated to the SSDs with dual RoCE 100GbE connections using Mellanox ConnectX-4 Ethernet adapters. But even doubling or tripling these numbers provides impressive performance that is un-attainable with existing storage networking technologies.

In conclusion, the move from HDDs to SSDs is not slowing, in fact, it is accelerating. Because of this, storage networking applications are moving the long-existing bottleneck for performance from the HDD to the network port. Luckily, InfiniBand and the new faster 25, 50, and 100Gb/s Ethernet speeds, along with RDMA protocols like RoCE, are proving up to the challenge. Faster storage needs faster networks!

About the author: Rob Davis is Vice President of Storage Technology at  Mellanox Technologies and was formerly Vice President and Chief Technology Officer at QLogic. As a key evaluator and decision-maker, Davis takes responsibility for keeping Mellanox at the forefront of emerging technologies, products, and relevant markets. Prior to Mellanox, Mr. Davis spent over 25 years as a technology leader and visionary at Ancor Corporation and then at QLogic, which acquired Ancor in 2000. At Ancor Mr. Davis served as Vice President of Advanced Technology, Director of Technical Marketing, and Director of Engineering. At QLogic, Mr. Davis was responsible for keeping the company at the forefront of emerging technologies, products, and relevant markets. Davis’ in-depth expertise spans Virtualization, Ethernet, Fibre Channel, SCSI, iSCSI, InfiniBand, RoCE, SAS, PCI, SATA, and Flash Storage.

Mellanox Technologies and was formerly Vice President and Chief Technology Officer at QLogic. As a key evaluator and decision-maker, Davis takes responsibility for keeping Mellanox at the forefront of emerging technologies, products, and relevant markets. Prior to Mellanox, Mr. Davis spent over 25 years as a technology leader and visionary at Ancor Corporation and then at QLogic, which acquired Ancor in 2000. At Ancor Mr. Davis served as Vice President of Advanced Technology, Director of Technical Marketing, and Director of Engineering. At QLogic, Mr. Davis was responsible for keeping the company at the forefront of emerging technologies, products, and relevant markets. Davis’ in-depth expertise spans Virtualization, Ethernet, Fibre Channel, SCSI, iSCSI, InfiniBand, RoCE, SAS, PCI, SATA, and Flash Storage.