Inside Dell EMC’s New Soup-to-Nuts Data Science Platform

(Timofeev Vladimir/Shutterstock)

Dell EMC today unveiled a new converged platform that combines the hardware and software data scientists need into a single package. By enabling data scientists to self-provision a set of cultivated Hadoop resources, they can start crunching data and delivering insights from Hadoop in a matter of weeks, rather than the months or years it often takes to stand up a working cluster, the company says.

It’s no secret that putting together a production-ready Hadoop cluster is a lot of work. Selecting a Hadoop distribution and installing it on a cluster of X86 clusters is just the start. You must also manage it, secure it, and provision access to Hadoop resources. Oh, and don’t forget the governance, lest the data become unruly. All the data and hardware must be stitched together, which consumes salaries of internal IT teams or ends up as billable hours in a consultant’s balance sheet.

In short, the act of building a big data framework to leverage data science to deliver business insights in a scalable and reputable way—or what is often referred to as the operationalizing of data science—is a lot tougher than it looks on TV.

The folks at Dell EMC are hoping to cut out a lot of the time and expense from this process with a new converged platform called the Analytic Insights Module (AIM). At a high level, AIM consists of Dell blades, EMC Isilon storage, VMware virtualization, certified Hadoop distributions from Hortonworks (NASDAQ: HDP) and Cloudera, and third-party Hadoop management tools from Attivio, BlueTalon, and Zaloni.

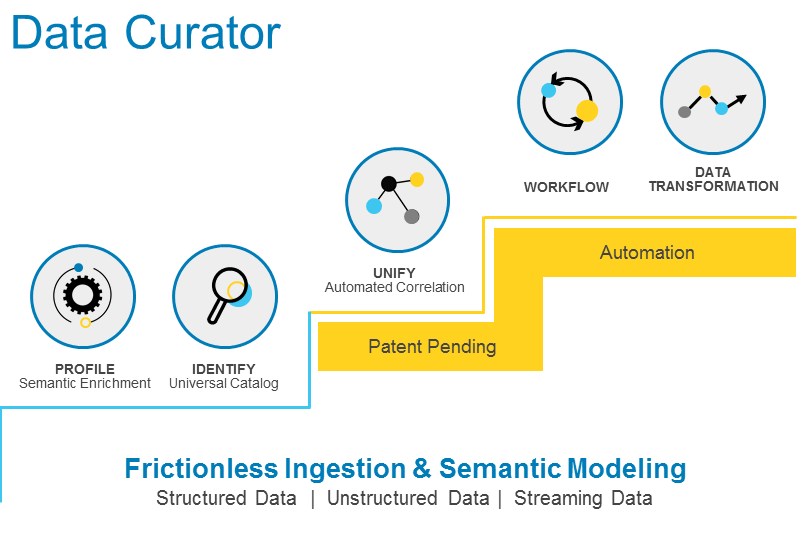

AIM’s Data Curator component is built partly on Zaloni’s solutions and simplifies data ingestion and cleansing

Bringing all these pieces together in a holistic manner gives the AIM customer more value and lower risk, says Ted Bardasz, senior director of product management for cloud native and big data solutions at Dell EMC.

“That’s been the medicine that a lot of the market has been looking for,” Bardasz tells Datanami. “How do you get the data scientist, who’s a scarce resource, to be extremely proficient…but reduce the risk in terms of the total platform, so that they’re really executing at a high level in terms of the insights they’re going after.”

Curated and Integrated Software

AIM was designed in the mold of the Pivotal Cloud Foundry. But instead of making life easier for Web application developers, AIM hopes to elevate the data scientist above the detailed technical minutia that often leads to six-to-18 month roll-outs of Hadoop clusters.

“What this platform does is take that buy-versus build proposition and do the same thing,” Bardasz says. “We’re trying to bring that data scientist persona above the value line, where Dell EMC provides them the infrastructure and the solution to allow them to get the insights that are going to drive business value, such as increased revenue, decreased expenses, better customer interaction, and customer 360.”

Data scientists are still on their own when it comes to analyzing the data. There’s such a wealth of analytic tools out there, from Apache Spark and Anacaonda to R and SAS, that this wasn’t an area that Dell EMC thought it could improve things. Data scientists can use whatever analytic tools they want with AIM.

Instead, Dell EMC sought to make its mark by simplifying the selection and integration of the management and security tools that are necessary but inevitably add time, expense, and complexity to big data rollouts. Bardasz says Dell EMC’s research found a common thread among data scientists who were frustrated with the status quo.

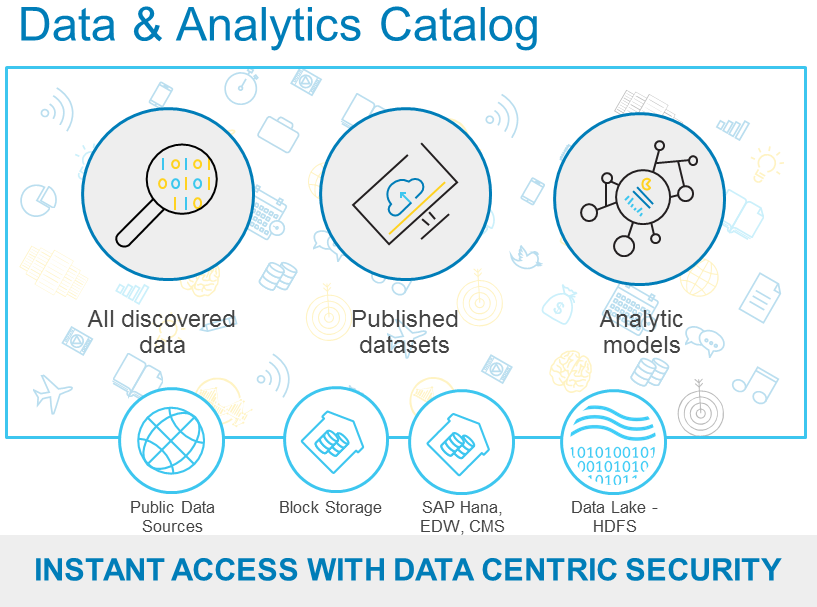

Data scientists can find raw and refined data in the AIM Data & Analytics Catalog, which is based on Attivio’s softwre

“What we really found was…they wanted the ability to discover data, to ingest it, to have a self-service environment, and the ability to secure it,” he says. “These were the key things that came back.”

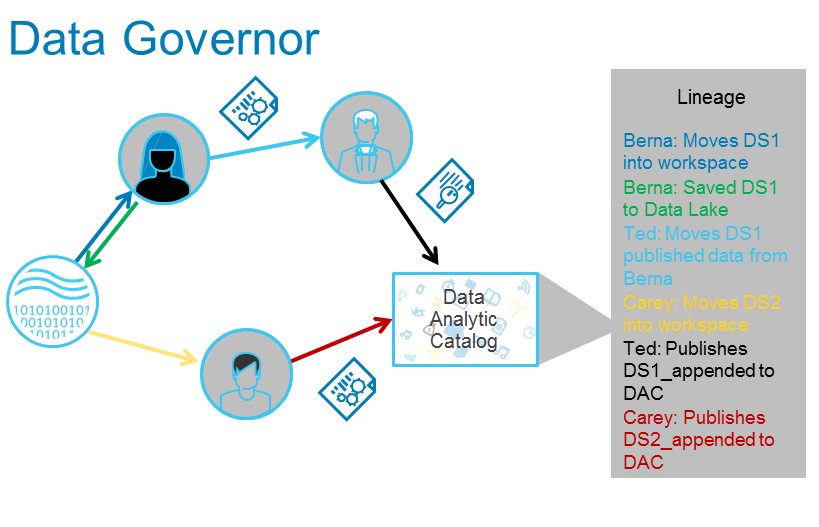

So Dell EMC set out to find the best third-party tools to help them do that. That search led them to the Attivio for governance and data cataloging; BlueTalon for role-based access control and security; and Zaloni for data ingestion. The company has a patent pending for how it integrates these three products into the AIM solution.

Together, these three tools are critical for delivering the self-service experience to data scientists, Bardasz says. “They can see everything in the data lake. They can go out and sample it, and with one button click, bring it in,” he says. “We engineered it to all work together…We’ve invested in integration so it all works together as a smooth workflow, so the data scientist doesn’t have to worry about it, and give them a holistic system.”

Self-Provisioned Hadoop

By integration VMware’s vCenter hypervisor into the offering, data scientists will be able to self-provision Hadoop resources to themselves in 10 to 15 minutes, says Matt Losanno, manager of product management big data solutions for Dell EMC.

The hope is this new level of freedom will lead data scientists to be a little more cognizant of the hardware resources their consuming, and kick off a virtuous cycle where data scientists are not afraid to shrink their Hadoop clusters, and IT professionals no longer cringe when data scientists spin up $50,000 worth of server resources without batting an eyelash, and fight vigorously to keep it.

The Data Governor component is based on BlueTalon’s software and provides tight security of Hadoop-resident data

This is a critical point, Lossano says, because Dell EMC’s research shows that the difficulty in provisioning Hadoop resources means that data scientists seldom reduce the size of their Hadoop clusters. “They’re like, ‘No way, man. It took me six months, I’ll never give it up. It’ll take me six months to get it back,'” Losanno says. “But now if they have a quota and they can deploy it within 10 minutes, they won’t mind tearing it down.”

While the real innovation is happening in the software layer (as is typically the case in big data), the integration is a big part of the AIM offering too. The company knows that the capability to deliver a cloud-like experience to customers who are adamant about keeping their data on-prem is critical to the success of this product.

The hardware layer starts with building blocks composed of Dell VxRail blades. The storage layer consists of EMC x410s, each with 75TB, which can be added as needed. As the VxRack fills up, customers move to the VxRack and eventually the massive VxBlock. “Once you get to VxBlock, it will go as big as you need it,” Bardasz says.

AIM doesn’t come cheap. The AIM software module itself is around $300,000, and once you bring together minimum levels of storage and processing to the table, the starting price is about $1.3 million. That’s in line with the $1.5-million-to-$1.7-million range that Dell EMC sees as the sweet spot for production Hadoop clusters.

“Price hasn’t been the factor,” Bardasz says. “The value proposition is bringing that whole platform in. Lots of times, companies can’t even get to where what this provides. They’re sitting behind six months, one year, to one-and-a-half year [roll-outs]. They’re wresting, spending a lot of money, and it’s not working right. But now you have underserved data scientists and application developers. We’re unleashing those resources.”

Related Items:

Build or Buy? That’s the Big (Data) Question

Michael Dell Talks EMC, Hybrid Cloud, PCs at Dell World