Advanced Scheduling for Containerized, Microservice Applications

Containers are being adopted at a record-setting rate and although many container projects are still in pilot, dev, or test, as these deployments get to production scale resource constraints will surface. These constraints, whether compute related or budgetary, will dictate the need for more advanced methods of managing microservice workloads to achieve optimal utilization of on-premise and/or cloud resources.

Containers are being adopted at a record-setting rate and although many container projects are still in pilot, dev, or test, as these deployments get to production scale resource constraints will surface. These constraints, whether compute related or budgetary, will dictate the need for more advanced methods of managing microservice workloads to achieve optimal utilization of on-premise and/or cloud resources.

Technical computing and big data system administrators have known for many years that resource constraints and competition for compute resources always surface as multiple projects begin to reach scale. In a world of finite compute resources it is inefficient to over-provision, server hug, or create isolated application-specific mini clusters for each type of workload. Gartner points out in their 2012 study that industry wide utilization rate is at a remarkably low 12 percent. While utilization of this nature is common in the broader data center world, this utilization is way below the norm for HPC and technical computing clusters that utilize advanced scheduling and policy management to ensure optimal cluster utilization.

Container scheduling is highly complex

While it might seem that service and micro-service based architectures are static and involve long running services that don’t change much at all, this is far from the case. Due to the dynamic nature of components, which include replication controllers and dynamic load balancing, the number and nature of executing service components may change numerous times throughout a day. With containers, there are many more (and much smaller) moving parts than with traditional, more monolithic application development approaches.

The cloud is constrained too!

Cloud computing has the perception of limitless resource availability but these cloud resources are ultimately constrained by budget. By 2019, IDC predicts cloud IT infrastructure spending will be 46% of total expenditures on enterprise IT infrastructure. Managing this cloud spend by reducing over-provisioning and maximizing cloud utilization has the ability to save organization significant amounts of money.

Navops Command – Bringing Advanced Scheduling to the World of Container and Kubernetes

When Google designed Kubernetes, their open source container orchestration platform, they had the foresight early on that a simple, one-scheduler fits all approach would not serve the unique enterprise needs well. Therefore, they designed Kubernetes with modular architecture and the ability to readily replace the Kubernetes scheduler. Univa brings many years of scheduling experience in enterprise technical computing applications to scheduling on Kubernetes.

We are building upon our proven expertise and have architected the Navops Command scheduling capability to be Kubernetes-aware with a slick container-oriented web interface as well as APIs, and a command-line interface. Navops Command’s ability to run on any Kubernetes-based distribution means that customers can choose to work with any number of vendors that support Kubernetes like RedHat’s OpenShift, Navops Launch, CoreOS’s Tectonic, and Rancher, and still get the benefit of Command’s advanced scheduling and policy management.

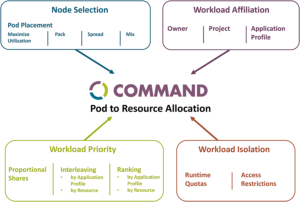

Navops Command includes enterprise-ready policies for managing workloads like:

- Sophisticated policies for managing SLAs, such as:

- Management of proportional resource shares

- Resource isolation by department or organization (dev, test,…)

- Access restrictions and run time quotas

- Automated prioritization when resources become scarce, such as:

- Resource interleaving

- Application and resource ranking

Visit www.navops.io/command.html to download the beta version.