How TransUnion Maximizes Data Science Tools and Talent

You may know TransUnion as one of the credit bureaus that controls the interest rate on your new loan. But in fact the company does much more, and has solutions around fraud detection, collections, and marketing, among others. Keeping such a diverse data ship on the straight and narrow is no easy task, but TransUnion makes it easier thanks to a few key principles that drive big data development.

If you’re looking for big data scale, you need look no further than TransUnion (NYSE: TRU). Through its 25 data centers, the Chicago-based company maintains a staggering 30 petabytes of data, including credit-related records on more than 1 billion people and full credit histories on 500 million. TransUnion receives more than 3 billion monthly updates from its 90,000 or so data sources and processes more than 15 billion batch inquiries every month.

All told, the company maintains 30 petabytes of data, which it uses to serve the informational needs of 65,000 customers. With so much data, it’s not surprising that the $1.5-billion company is a big user of big data technologies, such as Spark and Hadoop, as well as more traditional technologies based on relational databases and data warehousing tools.

While the company is a big proponent of emerging tech, it must also ensure that it’s actually solving customer’s problems and not using technology for technology’s sake.

Kevin McClowry, director of analytic solution development for TransUnion, recently discussed with Datanami how the company strikes the right balance between speed and agility on the one hand, and continuity and governance on the other.

For McClowry, who sits at the junction of IT and data science, it all starts with listening to customers, which includes both TransUnion’s customers as well as his internal data science team.

“While these platforms are really powerful, we have some strong data science talent, and as a technologist, my job is to make sure they have what they need to deliver value,” he says. “We have a really strong collaboration between technology and analytics to make sure that the tools work for them, and that they’re manageable from an IT perspective.”

Incubator Mentality

McClowry attributes part of TransUnion’s success to the company’s incubator-like mentality, which allows data scientists to try out a range of tools with the company’s data set.

“We have very large scale R&D environment where we let users come and use what they want,” he says. “Then once we’ve deemed some value, we’ll allow it to graduate into true production, where we’ll actually start to build against those technologies and processes at scale.”

TranUnion’s 200 data scientists use a variety of tools to explore data sets, build models, and create applications that exploit data, including MapR‘s Hadoop distribution and IBM‘s Netezza data warehousing environment; open source tools like Python, R, Spark, Hive, Pig, and Drill; and commercial tools from SAS and Tableau (NYSE: DATA).

The company is a big user of open source tools, especially the ones that are evolving quickly, as so many in the big data science are today. But that doesn’t mean there’s no room for proprietary offerings as well. “We’re a big proponent of using technology to deliver value, rather than aligning to just one tech stack,” McClowry says.

This laissez-faire mentality has served TransUnion well, but it’s not a free-for-all. “We don’t really say ‘no’ much in terms of tool adoption, as it allows us to innovate,” he says. “Then when it comes time to operationalize and productionalize advancements, that’s where we’ll set up processes to help us govern that.

“It’s worked quite well for us,” he continues. “We have a user community that’s allowed to innovate and really use those big brains of theirs. Then we have a release management process that allows us to do so in a controlled manner when it comes time to scale that.”

Primed for Prama

Recently, TransUnion unveiled a new big data solution called Prama that enables customers to explore seven year’s worth of TransUnion consumer credit data in a self-service, interactive manner. It’s one of the first times TransUnion is allowing customers to directly access their content.

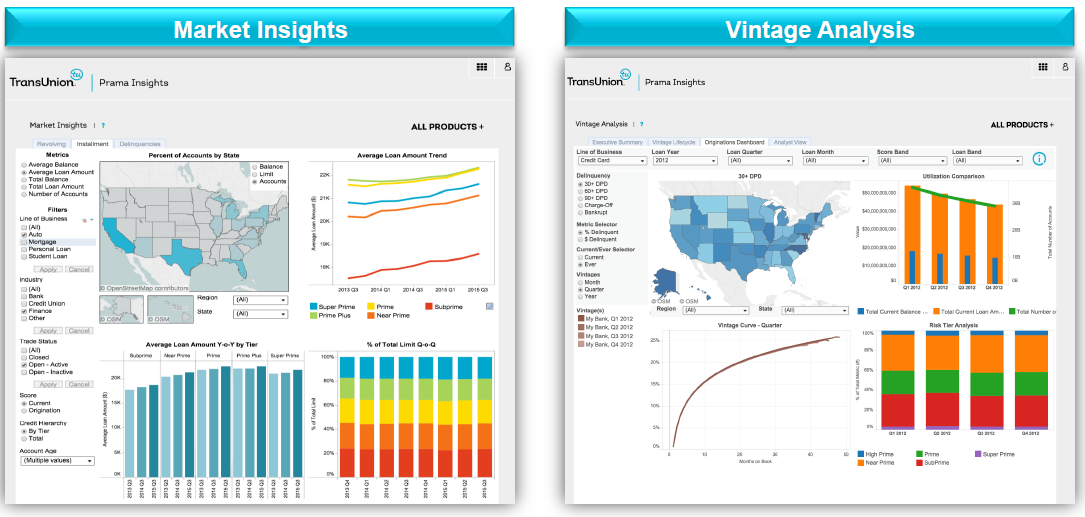

TransUnion launched Prama with two products

The Prama suite will eventually provide an end-to-end solution, but for now consists of two products in the Prama Insights category, including one called Market Insights and another called Vintage Analysis.

According to TransUnion, Prama builds on established BI reporting design principles, but delivers data using the company’s existing data pipeline and analytic resources. The company chose to deploy Prama atop MapR’s distribution and picked Apache Drill to serve as the back-end SQL data store powering the visualizations.

Hadoop has proven to be very adaptive to TransUnion’s needs, and it’s no surprise that the

company chose it to power Prama. “Certainly Hadoop in general helps us to scale very incrementally,” McClowry says. In terms of MapR specifically, functions like voluming, snapshotting, and mirroring have proven valuable to TransUnion. “MapR has made that quite easy,” he says.

Multi-Disciplinary Approach

TransUnion follows agile development precepts, and maintains small teams of developers who works closely with data scientists and IT professionals to develop new solutions. Whereas some development teams work in isolation with a “throw it over the fence” mentality, TransUnion has found big data works best when everybody is kept in the loop.

(Konstantin Chagin/Shutterstock)

“We’re very big on making sure we foster that multi-discipline team mentality,” McClowry says. “Our analytic and app dev teams are on an agile team together when it comes to operationalizing some of these production solutions. It’s a close transition, so that by the time something gets into a production state, it’s a mindshare between the two sides.”

Getting everybody to work together isn’t always easy. For instance, analytic and IT pros don’t’ always use the same language to describe the same thing, McClowry says. But the benefits of working through those minor disruptions are well worth it, he says.

“We realize that these are not slam-dunk, easy-to-build solutions,” he says. “We’re trying to innovate against data with new tech and new understanding of data and bring value to our customers, so it has to be a cross-team collaboration to do that. We found a way that works to keep it nimble.”

Related Items:

How DevOps Can Use Operational Data Science to See into the Cloud

8 Tips for Achieving ROI with Your Data Lake