Speak Easy: Why Voice Data Is Poised for Big Growth

Thanks to continued improvements in the accuracy voice recognition technology, we’re closer than ever to having a natural conversation with computers, without the interaction devolving into a frustrating, phone-throwing experience. As Mary Meeker explains in her recent Internet Report, we could be near an inflection point for voice-based interactions.

When Apple (NASDAQ: AAPL) first launched Siri on iPhones four years ago, the personal assistant was a bit of a novelty. It was fun to ask Siri off-the-wall questions and see what she would say. But over time, people have come to rely on Siri, and to appreciate the way that she seems to “learn” about her users and their preferences.

Since then, we’ve seen Internet giants launch their own voice-enabled digital assistants, including Google (NASDAQ: GOOG) Now, Amazon (NASDAQ: AMZN) Echo, and Microsoft (NASDAQ: MSFT) Cortana. Instead of peppering the assistants with random questions, people are starting to use the services to do real things, such as re-ordering baby wipes from Amazon Prime.

Meeker did an excellent job compiling various pieces of data to paint a comprehensive picture on the state of voice-based human-computer interaction. Consider these voice-related stats from Meeker’s report:

- Since Google first launched its Voice Search in 2008, the number of voice-based queries processed by Google’s search engine have increased by 35x;

- The percentage of smartphone owners who use voice assistants went from 30% in 2013 to 65% in 2015;

- 25% of Bing searches performed in the Microsoft 10 taskbar are voice searches;

- Apple Siri handles more than 1 billion requests per week.

There are several reasons why voice is on the upswing. For instance, the ability to interact with a mobile phone in a hands-free manner is critical when driving a car. It’s also faster to get a result back when you don’t have to type—especially true on smartphones.

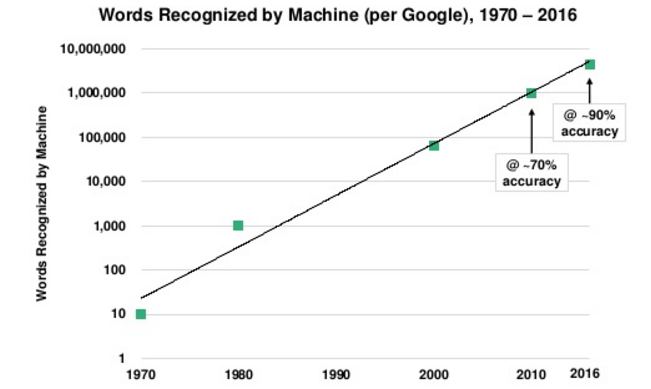

Source: Mary Meeker’s Internet Report 2016

Whatever the reasons, none of this would be possible without one crucial change in the machinery of voice recognition: better and faster machine learning algorithms.

According to Meeker’s report (which you can access here), the accuracy rate of voice recognition platforms has advanced considerably in the past four years. Baidu has gone from about 83% accuracy in 2012 to about 95% today. Google’s voice-recognition machine has advanced from about 78% accuracy in 2013 to about 92% in 2015, while Hound, an iOS-based voice search and assistant app from SoundHound, claims its accuracy has risen from about 60% to about 95% over the past five years.

Meeker said that it’s still “early innings” in the voice recognition game, but it’s an area where Internet giants are investing heavily. Knowing what people want to read, buy, or listen to is very valuable, and companies like Google, Amazon, and Baidu are spending billions to build predictive systems that can do this better and faster.

Crossing the 99% accuracy threshold will be key to enabling more widespread adoption of voice recognition technology. “As speech recognition accuracy goes from say 95% to 99%, all of us in the room will go from barely using it today to using it all the time,” said Baidu Chief Scientist Andrew Ng, according to Meeker’s presentation. “Most people underestimate the difference between 95% and 99% accuracy – 99% is a game changer.”

At the current rate, we’re probably about five to 10 years away from achieving that level of accuracy. Earlier this spring, Facebook CEO Mark Zuckerberg declared that improvements in machine learning technology would result in computers being able to conceive of our world – including sights and sounds – as well, or better, than humans. He said that point would occur within the next five to 10 years.

Edd Dumbill, the vice president of strategy at Silicon Valley Data Science, is enticed by the future. “I’m very excited about the idea that you can talk to the computer,” he said in a recent Hadooponomics podcast. “But that only works if you can talk to computers however you want….You kind of still need to learn how to talk like a robot in order to make a robot listen to you.”

Source: Mary Meeker’s Internet Report 2016

While the Internet giants work to solve the tricky machine learning problems, the wider developer community is starting to pick up on the trend. According to Meeker, third-party developers are moving quickly to adapt to voice as a computing interface.

Amazon is an early leader in this regard, especially with its Echo device. But Amazon is expanding the potential for voice-enabled apps through its Alexa Voice Service, which exposes voice recognition capabilities through APIs. No understanding of natural language processing (NLP) is required to voice-enable an application, provided that a microphone and a speaker is present. Uber, CapitalOne, Domino’s, Pandora, Spotify, and Fitbit are reportedly using Alexa services.

Apple is also moving to ramp up development around Siri. Earlier this week, the computing giant announced that it’s opening Siri up to third-party developers. This will undoubtedly expand the universe of iOS apps that work with Apple’s popular assistant.

As voice recognition gets better, it will narrow the divide between the real world and the digital world. We’re already seeing data analytic startups, such as Boston-based indico, use the power of deep learning models running on GPUs to analyze large amounts of textual data from news sites, financial filings, and other sources of words.

When everything we say can be instantly captured, transcribed, and then mined for useful bits of information, it will open up all sorts of new opportunities, as well as risks. We’re not there yet, but we should start thinking how we’re going to navigate the world when computers can understand everything that we say.

Related Items:

Unstructured Data Miners Chase Silver with Deep Learning

AI to Surpass Human Perception in 5 to 10 Years, Zuckerberg Says