Keeping on Top of Data Drift

Data is often thought to be constant and immutable. A given piece of data is defined by 1s and 0s, and it never changes. But there’s an emerging school of thought in the big data world that sees data as constantly drifting and mutating in response to forces around it. Without processes put in place to detect it, this data drift can wreak havoc on one’s understanding, expert warn.

Organizations that collect huge amounts of data from many different sources, and process it or analyze it in many different ways, are the most likely to suffer the effects of data drift, says Girish Pancha, the CEO and co-founder of StreamSets.

“In the old days, we had a simple data lifecycle,” says Pancha, who previously worked at Informatica. “Data went from databases into data warehouses and applications, and was then fed into BI and reports and dashboards. That was the primary means of consumption.”

But those old data consumption patterns are rapidly changing. “In the new world, consumption has exploded,” he tells Datanami. “It’s not just BI but search, big data applications. You have OLAP SQL stores, a whole bunch of SQL on Hadoop flavors, NoSQL, NewSQL…People think of this as a greater variety of sources. But from our perspective, we think of it as the data itself drifts.”

Pancha elaborates. “What we mean by that is the sources themselves can drift from an infrastructure perspective,” he says. “You have data moving from on-premise to cloud, physical to virtual. But also the actual data structure and semantics also can mutate unexpectedly. This happens mainly because much of the data is a byproduct, it’s exhaust data, not necessarily accessed through traditional means of schema and APIs.”

The cleansing, transformation, and normalization of data is a big problem for any organization that wants to engage in data science to improve their competitive position, productivity, and profitability. Without accurate data, our ability to enact change on the real world is hampered. This situation is well understood, and is being addressed at many levels within the big data and Hadoop communities.

Data schemas can change unexpectedly–especially when using third-party data–leading to data drift within your organization.

Now you can count Pancha and his colleagues at StreamSets—including Arvind Prabhakar, who was an early Cloudera employee who worked on Apache Flume and Apache Sqoop, and who is co-founder and CTO of StreamSets–as the latest warriors to join the battle against bad data.

Pancha shared his thoughts on the matter in a recent blog post on data drift. “Arvind had come to realize over his four year career at Cloudera that the best practice for most customers ingesting data into Hadoop was manually coding data processing logic and orchestrating them using open source frameworks,” Pancha writes. “I was flabbergasted! As Chief Product Officer at Informatica, I had spent more than a dozen years at Informatica delivering various technologies that automated the processing and moving of data into data warehouses. So why were people doing this manually for big data stores?”

The problem is that the data drift problem can’t be solved through traditional ETL tools, such as what Pancha developed at Informatica. Older ETL tools are just to schema-centric to handle the unexpected changes that are evident in today’s big data sets, he says. And any attempt to rectify the problem manually will result in a lack of transparency into the processes, as well as a brittleness that makes it weak.

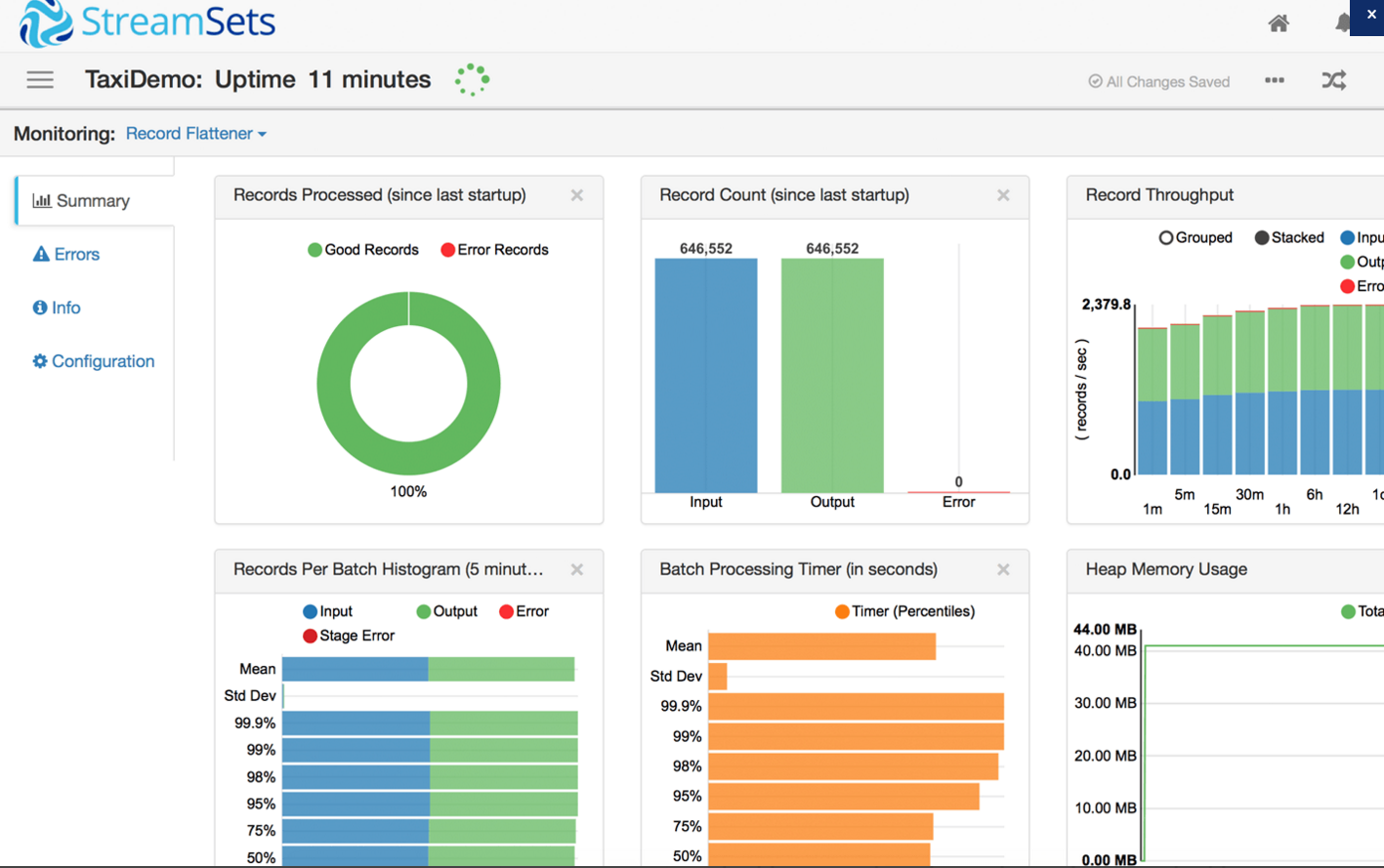

StreamSets came out of stealth last fall with a new product designed to help organizations keep on top of changing data. “In the first version we’re focusing on the capable to handle data drift,” Pancha says. “What that means is as data structures and data shapes change, we can automaticity fix it if fixable or we can provide early warning alert if we think it’s not fixable or it’s a grey area. We may say ‘we fixed it but you should know this looks pretty different.'”

The emerging data economy is based largely on the ability for people and organizations to consume and process third-party data. But from a data consumer’s perspective, third-party data is more subject to drift than internal data, Pancha says. “What often happens in these situations is…the ordering of the data may change. Something that came in in field 1 is now field 20,” he says.

Because they were heavily schema driven, the legacy ETL tools have difficulty coping with unexpected changes in the layout of a particular’ piece of data. But because StreamSets’ tool is “lightly schema driven,” it’s better able to adapt, Pancha says.

“We talk about it as we’re intent-driven,” he explains. “The schema is just being specified as a byproduct of your intent. Then we would be very resilient to these sorts of changes. If [a particularly piece of information] showed up in an unexpected spot, we would find it, and we’d find it through a different means, [perhaps by] from looking at the metadata to possibly things like profiling data and inferring if the metadata wasn’t there.”

StreamSets software is designed to detect data drift, and automatically fix it, if possible.

StreamSets is designed to work upon data that has some structure to it, such as a JSON file. The tool can also act upon unexpected data suddenly showing up, Pancha says. “If you have a JSON formatted email showing up, we can say ‘Hey listen, nobody has ever really asked for this email, but nothing is being done. Perhaps this is something you may care about.'”

The software is agnostic about where it runs. It can run on a Hadoop cluster, or as a Spark Streaming job on a Mesos cluster. It can be used in a batch mode, interactive, or real-time mode, but that isn’t as much of a factor, Pancha says. “The bottom line is our focus is very much adding value while data is in motion,” he says. “We think of this as a continuous problem, about trying to be resilient to change and data drift.”

The underlying technology behind StreamSets is available as an open source license, and the company offers paid subscrptions. So far, more than 100 organiztaoins have downloaded the software and started using it, while paying customers number in the double-digits.

Among the early adopters is Cisco Systems. The company is using it internally to improve the quality of data used in inter-cloud services and to monitor for data drift among various components in the data center, including Kafka and ElasticSearch, Pancha says.

StreamSets is based in San Francisco, and completed a Serise A round of financing last fall that netted the company $12.5 million.

Related Items:

Data Quality Trending Down? C’est La Vie

Finding Your Way in the New Data Economy