Startup to Offer Neural Network Training in the Cloud

One of the most time-consuming components of using deep neural networks is training the algorithms to do their jobs. It can often take weeks to train or re-train the network–and that’s after you set it up. A startup called minds.ai today announced how it plans to tackle that problem: by allowing customers to train their neural network on its speed cloud-based GPU cluster.

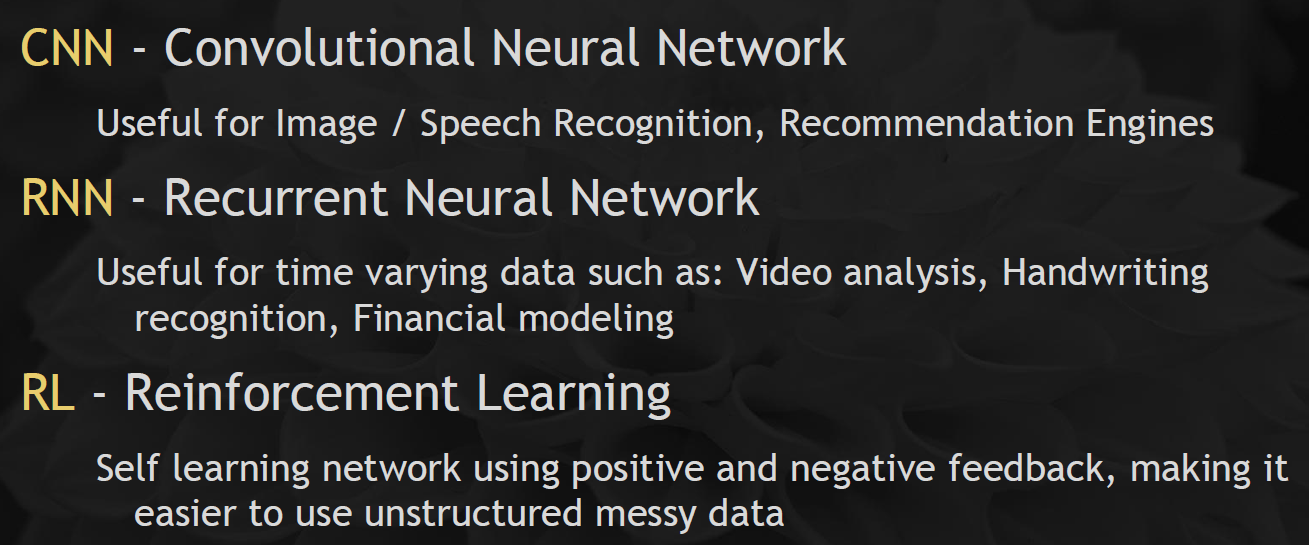

Deep neural networks have recently emerged as a competitive differentiator for organizations hoping to make sense of big data. Deep learning is proving to work especially well on problems such as image, speech, and facial recognition; handwriting analysis; classification; and recommendation engines. When implemented on fast GPU clusters, the deep learning approach makes it possible to crack what would otherwise be intractable computational problems.

But these are still very early days for deep learning. Much of the software that promises to automate the management of deep learning approaches is fairly immature, and the human expertise needed to build custom deep learning systems is extremely hard to find. This presents a business opportunity for the folks at minds.ai (pronounced “mind’s eye”).

“The only people who have this are Google and Baidu,” says Steve Kuo, the director of business development and a co-founder of minds.ai. “Anybody else who has it homegrown isn’t letting it out because it’s a competitive advantage for them.”

Minds.ai hopes to level the playing field by essentially giving anybody access to the type of GPU-powered neural network that Google and Baidu are using. The company’s AWS-like offering eliminates the need for clients to build and configure their own mini-supercomputer to train the algorithms, which can take six months to a year.

And thanks to some “secret sauce” in the minds.ai framework, algorithms will train up to four times faster on the minds.ai network compared to using the standard tech stack, including Nvidia (NASDAQ: NVDA) GPUs, CUDA drivers, and the Caffe neural network software framework.

“We’ve been able to beat Caffe’s performance by a factor of four, meaning a job that took you a month to train will take you one on our platform,” Kuo says. “So a certain problem that takes a client 12 hours, we can knock it down to three, allowing them to do three or four revisions.”

The minds.ai cloud framework is a combination of proprietary software and industry-standard hardware. The first iteration of the cluster—which should be available for select beta customers to train upon in early 2016—will feature a single X86 node attacked to seven NVidia GeForce GTX TITAN X graphics cards. Each GPU card delivers 7 Teraflops of computational power, giving it potent number-crunching capabilities for tackling tough data problems.

“We’ve spent the last few months cherry picking all the best high-performance products we could find off the shelf, assembling them in a way that’s going to benefit everything that our software is going to do,” Kuo says. “Our core IP lies in the software, how to parallelize any problem that we have thrown at us.”

Customers won’t have to learn how to use minds.ai’s framework or interface with it in any way. Instead, clients simply submit their neural network definitions via Caffe. After uploading their data to the minds.ai cluster, they can run their neural networks on the cluster, iterating as needed until the algorithms are fully trained.

Once the algorithm is fully trained, the client is free to deploy it as needed, whether it’s a recommendation system for a social media site or a facial recognition app for a smartphone app. Minds.ai has nothing to do with the actual application of algorithms; it’s focused purely on the training and re-training of algorithms (the company will cache customers’ data so they don’t have to re-upload it every time they re-train).

The plan calls for the cluster to scale up to 28, 50, or perhaps even 100 GPU nodes connected via InfiniBand, which would be a sizable supercomputer in recent years. A GPU cluster of that size should be able to handle just about any deep learning problem customers can throw at it, the company says.

Maintaining performance as the cluster grows should not be a problem, says minds.ai co-founder and CEO Sumit Sanyal. “We developed a way to scale this up almost arbitrarily and maintain linear performance,” says Sanyal, an HPC expert who has worked at Fairchild Semiconductdor and Broadcom. “As we add more GPUs, we get a bigger speedup.”

The company came together when Sanyal started talking with co-founder Tijmen Tieleman, a deep learning expert and PhD holder who worked in Geoffrey Hinton’s lab at the University of Toronto. The two learned from each other and decided to start a deep learning company. The plan initially called for the company, which was called Nomizo at the time, to deploy an integrated deep learning appliance running atop a custom-built ASIC or FPGA. Eventually, the plan morphed to providing deep learning as a service. Customers will be billed hourly.

The company counts its HPC expertise as an advantage. Among the experts working at the company are Ross Walker, a research professor in Molecular Dynamics at the UCSD Supercomputing Center; Jeroen Bédorf, who has a PhD computational astrophysics; Edwin Van Der Helm, who has a PhD in computational astrophysics; and Ted Merrill, a veteran embedded systems guru.

If you’d like to be considered for the minds.ai beta, you can contact the company through its website: minds.ai.

Related Items:

Cloud-based ‘Deep’ Learning Tool Explains Predictions

Nvidia Sets Deep Learning Loose with Embeddable GPU

Machine Learning Tool Seeks to Automate Data Science