Inside Yellow Pages’ SQL-on-Hadoop Journey

Like many national companies, scale is important for Yellow Pages, or YP as the company is now known. So when the Georgia-based local marketing firm set out to find a suitable SQL engine to deliver real-time analytics atop its 1,000-node Hadoop cluster, performance at scale was a prime directive.

It wasn’t long ago that the yellow pages were the go-to directory for Americans. You could find them in every household and in public phone booths (remember those?) Today, most directory services have migrated to the Web, and YP Holdings, the parent company of Yellow Pages, has kept up with the times.

Every month YP Holdings attracts about 70 million unique users to its YP.com website or its mobile apps. In addition to directory look-ups and advertising, the company serves local maps, hosts a cheap gas finder, and serves information about local restaurants and movies.

As you might expect, YP captures data from those 70 million sessions and uses analytics to optimize the experience of visitors to its Web and mobile properties. Because of the scale of the system—it captures more than 3.5 billion events per day—the company uses Hadoop as a staging ground.

Kinder, Gentler ETL

YP currently uses MapReduce-based ETL routines to hammer that data into something more manageable and suitable for its data warehouse, which is based on Vertica, the massively parallel processing (MPP) database from Hewlett-Packard. The company wants to move beyond MapReduce to something that’s faster, says Bill Theisinger, vice president of engineering for YP’s Platform Data Services group.

“I’m very interested in trying to move toward something that doesn’t look like MapReduce,” Theisinger tells Datanami. “I think that MapReduce is a little bit slow and kludgey. It’s run its course. We’re seeing that it’s a little too slow in some regards for what we’re trying to do.”

The company is looking to SQL on Hadoop tools to give it a processing edge within its 5 PB cluster, which is based on Cloudera‘s CDH5. This would enable the company to do more processing of the user behavior data as it sits on HDFS before moving the best summary data to the separate Vertica cluster for reporting and ad hoc analytics.

This solution is focused on YP’s internal decision making, not its advertising business. “If we release a product on any of our consumer sites or any apps, we can collect feedback on how that product feature is performing very quickly without having to build an architecture around it or instrument anything,” he says. “We can just simply use [a SQL on Hadoop solution] to query the data and be able to get the results…pretty instantaneously, if not within minutes or an hour.”

SQL Hadoop Showdown

The hunt for a SQL on Hadoop solution would not replace its Vertica MPP database, but instead would make it easier to access less commonly used data, Theisinger says.

“While we collect a tremendous amount of data on a daily basis, we don’t necessarily find all that data valuable on a given day,” he says. “Like traffic quality. Sometimes we filter traffic quality from the reports…but sometimes they want to do analyses of traffic quality. Because there’s not a lot of utility for storing that data within the warehousing platform, we’ll keep it in HDFS.”

“While we collect a tremendous amount of data on a daily basis, we don’t necessarily find all that data valuable on a given day,” he says. “Like traffic quality. Sometimes we filter traffic quality from the reports…but sometimes they want to do analyses of traffic quality. Because there’s not a lot of utility for storing that data within the warehousing platform, we’ll keep it in HDFS.”

Theisinger is holding a bake-off between two SQL on Hadoop contestants: Cloudera’s Impala and HP’s Vertica SQL on Hadoop. While he acknowledges that YP has been a happy Vertica customer for some time, he says he’s open to other approaches, including Impala. “I’m tool agnostic,” he says. “I just want to find the right tool for the job.”

It’s all about delivering fast access to data. “We want to be able to have a tool to allow analysts to go to our Hadoop infrastructure and run a very fast query engine against the data to get results within minutes, rather than having it chug through the data in a slower way,” he says.

Based on Theisinger’s comments, Vertica SQL on Hadoop—a version of the MPP database that’s been ported to run natively on YARN and HDFS–is the early favorite. “Vertica SQL on Hadoop is extremely promising in terms of what we can provide to users,” he says. “It’s performing exceptional well.”

File Format Daze

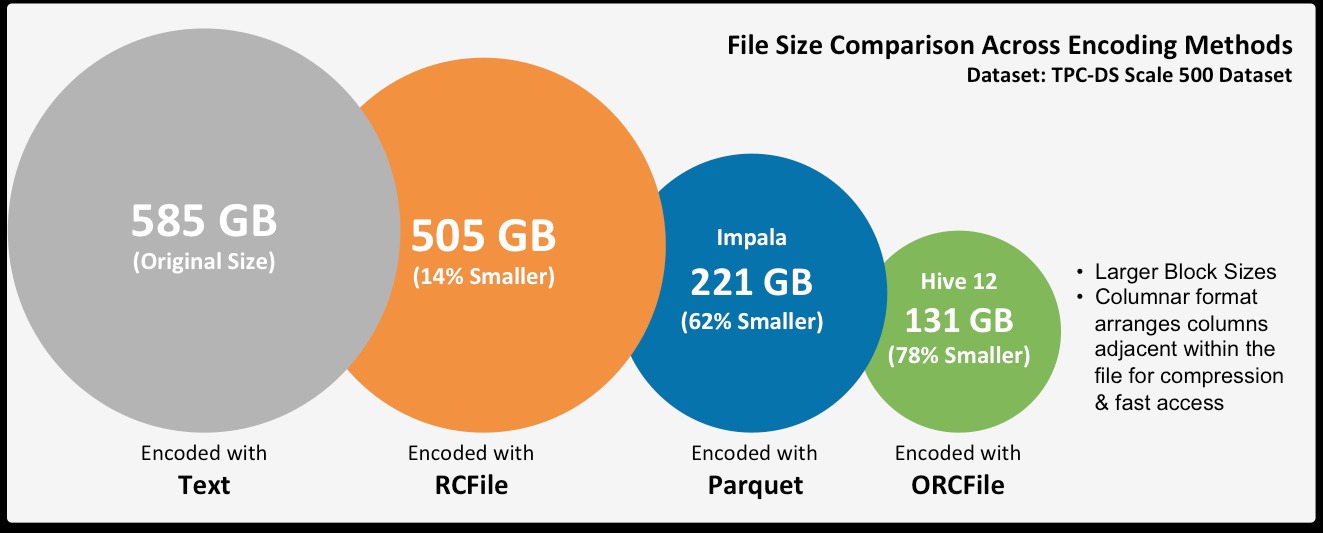

The returns are not all in, and YP continues to test both SQL on Hadoop solutions. But according to Theisinger, a big reason for Vertica’s early lead has to do the file formats used by the two solutions. Namely, Cloudera stores its data in Parquet, whereas Vertica SQL on Hadoop uses the Optimized Row Columnar (ORC) format.

Comparison of common Hadoop file formats, courtesy of Datametrics

Theisinger is leaning toward standardizing on ORC as a file format over Parquet. “We’re capturing a lot of data in Hadoop and HBase, so a lot of data resides in HDFS,” he explains. “And we can store that data in ORC far more performant than any other formats we’ve used in the past, like sequence files for example.”

The ORC format gives YP more options for access the HDFS files data from different tools, including natively accessing ORC data as an external table from the Vertica MPP database. Hive also supports ORC, which meshes well with its Hive activity.

At the end of the day, Theisinger would like to standardize on a single file format, and ORC appears to be leading that race.

“We’ve been balancing file formats for different tools for quite some time and I would love to avoid having to do that in the future,” he says. “If we were to deploy multiple tools in this environment, we’d need to do a lot of data conversations. It would be great to avoid doing that if we can.”

Related Items:

How Advances in SQL on Hadoop Are Democratizing Big Data—Part 1

How Advances in SQL on Hadoop Are Democratizing Big Data–Part 2