Five Ways Big Genomic Data Is Making Us Healthier

We’ve come a long ways since the human genome was first decoded 13 years ago. Thanks to continued advances in high performance computing and distributed data analytics, doctors and computer scientists are finding innovative new ways to use genetic data to improve our health.

The economics of genetic testing is creating a flood of genomic data. The Human Genome Project took over a decade and cost $3 billion, but today whole-genome tests from Illumina can now be had in a matter of weeks and $1,000. While storing these test results (a whole genome is about 250 GB) is a big data exercise in itself, it’s what you do with that data that counts. Here are five ways genetic data is being put to use:

-

Genetic Counseling? There’s an API for That

We all know that DNA plays a big role in whom we become. But in the real world, it’s the interplay between our genetics and our environment that truly defines us. Accurately breaking these two components down and differentiating “nature versus nurture” is not easy, but that’s essentially what a genetic health software company called Basehealth is trying to do, at least for a few dozen diseases.

Basehealth came out of stealth a year ago with a software platform called Genophen that’s designed to do the hard work of deciphering all of the various risk factors that come into play for certain diseases, such as type 2 diabetes, heart disease, depression, and various forms of cancer. Mixing one’s genetic data with real-world data describing other factors–such as the patient’s diet, exercise, weight, and lifestyle–provides a better description of someone’s risk for contracting a given disease (and creating solid plans for avoiding them) than genomic data all by itself.

When Basehealth launched its MongoDB– and R-based application a year ago, it figured individual healthcare practices would use it as a standalone health portal to provide patients with counseling and “what-if” style analysis similar to online retirement calculators. But since then, the Redwood City, California company realized that enabling simpler access to its software is a better approach, so yesterday it announced that Genophen is accessible over the Web via API.

“A lot of folks in the market already had system of their own,” says Basehealth CTO Prakash Menon. “They work with EHRs [electronic health records] and patient portals. People kept coming back to us and saying, ‘This is all really good, but we’d like to integrate this stuff that you’ve done into our own platforms.'”

The new REST API that Basehealth is exposing lets subscribers take advantage of the research into medical literature that Basehealth has done. Using natural language processing (NLP) algorithms, the company has essentially quantified risk factors for 40-some diseases and conditions. Those factors are used to create models that allow doctors to predict health outcomes based on a person’s combination of genes and environmental factors.

The APIs will enable Genophen to plug into the emerging virtual world of medical data services. “Physicians are building their own portals that bring all of the data they have for their patients,” Menon tells Datanami. “A lot of people have genetic information on 23andMe or Ancestry.com for instance, and they want to bring that data. They get information from their own EHRs, from patient devices like Fitbits, and put together a profile. Then they call us for an assessment and they use the assessment to drive their engagements with their patients.”

-

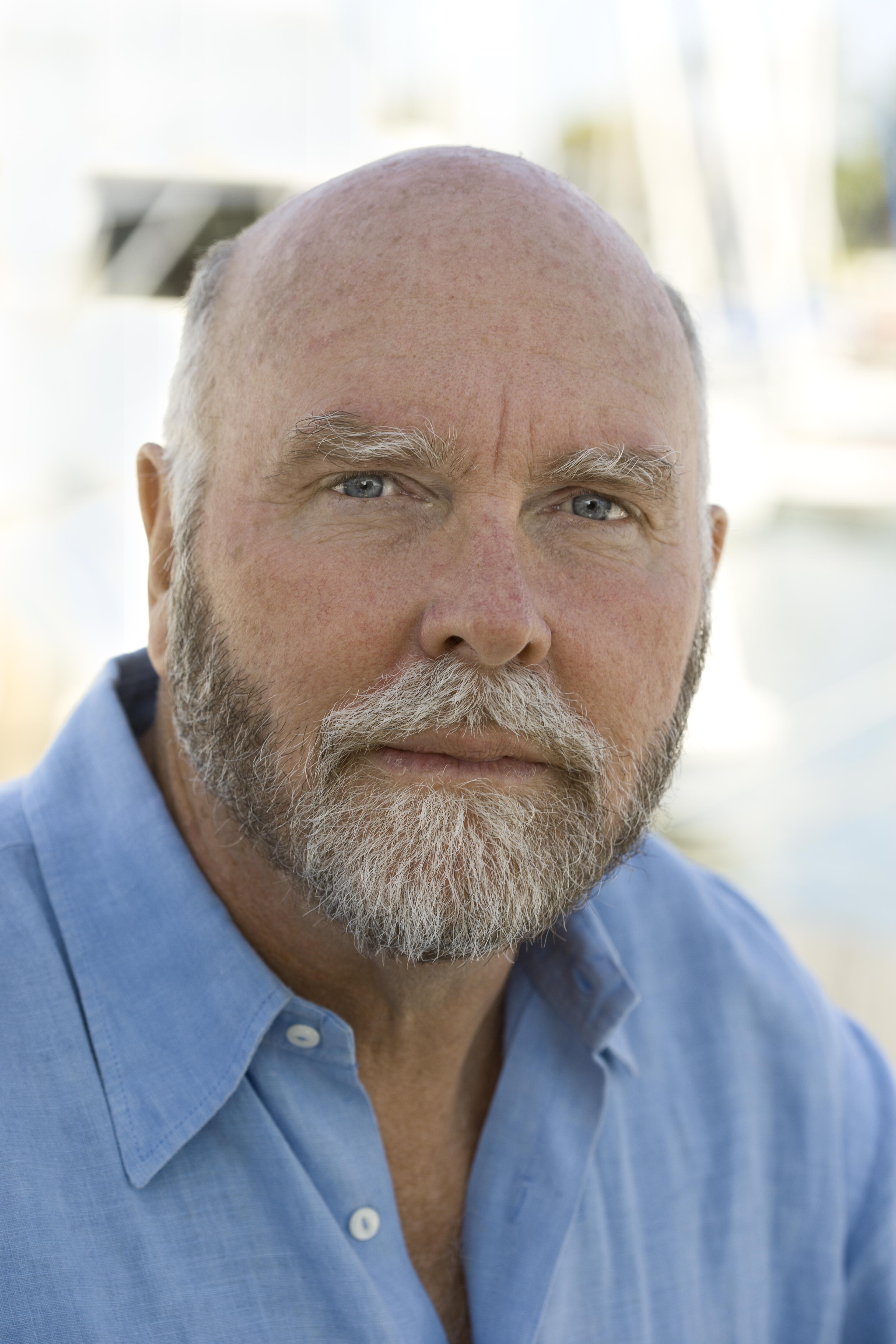

Craig Venter’s Giant Gene Database

While the National Institute of Health was spending billions of dollars on the Human Genome Project, former NIH biologist J. Craig Venter launched his own parallel effort at Celera Genomics, taking a different approach and spending just hundreds of millions. Eventually, the two camps combined efforts, and shared in the glory (which was even more special for Venter, who provided the DNA that was first decoded).

Now Venter is trying to take genomics to the next level with his latest venture, Human Longevity Inc. The San Diego, California-based company has already bought two of Illumina‘s next-gen genetic sequencing systems, the HiSeq X Ten, and could buy several more in its bid to sequence 100,000 genomes per year.

Along with the genotype data from the Illumina sequencers, HLI also will be collecting phenotype data, or information about how the genes are expressed in human physiology and bio-chemistry.

Armed with these data sets, HLI aims to create the largest human gene-phenotype database in the world. It plans to use this data to identify relevant patterns that could be used to create novel drugs and therapies that extend human life and slow the process of biological decline.

While HLI will be taking and processing DNA samples, Venter considers HLI at heart to be a “data analysis group,” he said in a November story in The Huffington Post. The size of its HLI Knowledgebase may be huge, but software algorithms can help make the data manageable, much like a picture can be compressed, he said.

HLI will be employing the latest machine learning and artificial intelligence techniques to tease knowledge from the huge gene-phenotype database. “Teams are developing and applying novel statistical genetic, bioinformatics, and data mining algorithms to identify these patterns,” the company says on its website. “These will be implemented on novel computing architectures in partnership with leading computing vendors and centers such as the San Diego Supercomputer Center.”

-

A Pipeline for Diagnosing Undiagnosed Diseases

Approximately 25 to 30 million Americans suffer from rare diseases, which are defined as those that effect 200,000 people or fewer. Not all of them have a genetic component, but a good number of them are believed to be hereditary. The NIH is seeking to find cures for some of these by studying genomics through its Undiagnosed Diseases Program (UDP).

Assisting the UDP with its goal is Appistry, a St. Louis, Missouri company that develops high-performance big data solutions. The company helped the program launch a genetic analysis pipeline that allows the UDP to compare genomes within a family to identify changes that may be causing disease. By using a form of genetic triangulation, the UDP Integrated Collaboration System (UDPICS) is able to dis cern where genetic variations from a parent to a child may be causing disease.

cern where genetic variations from a parent to a child may be causing disease.

“For an individual with an unknown disease, we need to identify which genetic change is the cause,” says William A. Gahl, the doctor who ran the UDP program at the NIH before recently joining Appistry. “Current methods compare an individual’s genome to a generic reference genome, which may differ significantly from the individual’s. That creates an unnecessarily large number of possible genetic changes to pursue. Determining which changes are relevant is time-consuming and computationally intensive.”

So far, the pipeline has helped the UDP processed genetic data from about 250 patients. What’s more, in late April, the program was awarded a Best Practices Award from Bio-IT World. According to Applicatory, Bio-IT World’s editorial director Allison Proffitt called the system “orders of magnitude more valuable than Craig Venter’s genome.”

-

Parallel Genomic Sequencing on Hadoop

With its HiSeq X Ten sequencers, San Diego-based Illumina seems to have a lock on the mechanical devices used to decode human genes, at least for now. But when it comes to the software used to analyze the data that comes out of those machines, there’s been a flurry activity and progress from many institutions.

As it turns out, Apache Hadoop is playing in the genetic analysis game too. The University of North Carolina (UNC) at Chapel Hill is running a 50-node Hadoop cluster to process the genetic data coming out of their Illumina machines. According to this story on the Intel website, the parallelism of Hadoop matches perfectly to the deep analysis of genetic data that UNC demands for both research and clinical healthcare settings.

“The Hadoop system allows us to perform very custom analysis that you wouldn’t find in a traditional business intelligence tool or that would work in a SQL relational type of structure,” writes Charles Schmitt, director of informatics and data Sciences at UNC’s Renaissance Computing Institute (RENCI). “Our analyses amend well to a MapReduce structure. The other issue is that tests with databases that use extract, transform, load (ETL) take an incredibly long time with that much data. With Hadoop there’s no ETL; we just add a file into the system.”

The group is analyzing about 30 genomes a week, according to Schmitt, and has between 200 and 300 TB of genomic data stored in an EMC Isilon object storage system. Along with Hadoop are several other critical components of the workflow, including Enterprise iRODS grid software for managing the data, and the Secure Medical Workspace system for securing patient data.

-

Saving Kids from Genetic Disease

It’s estimated that one in 30 people have a genetic disease of some sort. Hopefully, it’s just a mild form of asthma that’s merely an inconvenience for the carrier, but unfortunately, there are a lot more nasty genetic diseases circulating in human DNA.

Considering that we have identified the genes responsible for only about 5,000 out of the 8,000 known genetic diseases, there’s a lot of work left to be done. One medical practice that’s on the frontline of this work is the Children’s Mercy Hospital of Kansas City.

Shane Corder, a senior HPC system engineer at Children’s Mercy, shared his experiences with pediatric genomics during a keynote address at the Leverage Big Data conference in Florida this March. According to Corder, the hospital is gearing up to take advantage of the fast Illumina sequencers to help newborns who are suffering from genetic diseases.

“There are some fairly exciting new technological advances that we’re hoping to implement here in the center that will eventually let us go sub-24 hours on a full genome,” Corder said. Currently, the hospital’s fastest sequence technology takes about 50 hours to get a diagnosis.

“When a child is waiting for a diagnosis, a lot of times the disease lays waste to their body or their mind,” Corder said. “With quicker diagnoses and treatment, that can ultimately change the child’s life forever.”

Related Items:

Saving Children’s Lives with Big Genomics Data

Peek Inside a Hybrid Cloud for Big Genomics Data

Google Targets Big Genome Data