Don’t Overlook the Operationalization of Big Data, Pivotal Says

You may use Hadoop to suck up all kinds of data, and you may even analyze some of it there. But any insights you glean should not exist in a vacuum. To succeed in this big data world, you must put your insights into use—that is, you must operationalize them–and that sometimes requires a different set of skills and technologies outside of traditional Hadoop.

Hadoop may be the poster child for big data, but it’s not the whole story. While organizations are increasingly using Hadoop as a data lake to store all their data, that’s just the beginning of what’s possible. Even Cloudera, the Hadoop distributor that first elucidated the “enterprise data hub” strategy nearly a year ago, recognized that it can’t go it entirely alone, which is why it partnered with MongoDB earlier this year.

“We’re at the cusp of a tectonic shift in how organizations manage data,” Mongo vice president of business development Matt Asay said at the time. “It’s such a big opportunity it’s frankly far too big for any one company.”

The folks at Pivotal might not totally agree with that assessment. While Cloudera and Mongo are working on connectors and joint solutions, the EMC spinoff–which owns its own in-memory, NoSQL database called GemFire–is looking to provide an all-in-one, soup-to-nuts big data solution.

Getting the operational aspect correct is a critical but often overlooked component of having a successful big data strategy, says Sudhir Menon, the director of R&D for GemFire, an established database that’s geared toward running large and distributed production applications, such as the airline reservation system for Southwest Airlines (an actual customer).

“Just storing the data does nothing for you,” Menon tells Datanami. “Using operational data stores to actually become more intelligent and to make the applications that use the stores more intelligent, is the defining legacy of what we’re calling big data.”

Today, there’s often a gap of weeks to months between when the insights are gleaned–often in Hadoop or an MPP warehouse such as GreenPlum–and when those insights are pushed out toward the front-line applications that customers interact with. “Closing that loop between getting insights and operationalizing those to actually build more intelligent applications is really what Pivotal is all about,” Menon says.

Today, Pivotal unveiled a major release of GemFire, which is essentially a distributed, in-memory key-value store that supports documents and objects. With GemFire version 8, Pivotal made three main enhancements around scalability, reliability, and programmability.

On the scalability front, support for the Snappy compression algorithm will shrink data sizes in GemFire by 50 to 70 percent, depending on the data type, Menon says. “It actually makes the system a lot more maintainable, a lot more manageable,” he says. “You can now run a bigger data farm with a fewer set of nodes. It makes it easy to manage, monitoring, provision, plan etc.”

GemFire, which has been in development for more than 10 years and has more than 1,000 customers around the world, has already seen some big deployments. Several customers support 400- to 500-node clusters that are geographically distributed. As an in-memory system, the total amount of data may not exceed 30 TB, but that is sufficient to quality for “big data” in a transactional setting, Menon syas.

As transaction-oriented database, GemFire is already known for providing a high degree of reliability and data integrity. With version 8, Pivotal is shrinking the amount of downtime you can expect during planned upgrades and maintenance, as well as minimizing unplanned downtime through new “self-healing” capabilities.

“If a switch were to go down,” Menon says, “there would be no need for anybody to  manually intervene. GemFire would recognize this…The process would go to a quiet mode, and when it came back up, it would recover from the other nodes the data changes that had happened and would start functionality at full capacity.”

manually intervene. GemFire would recognize this…The process would go to a quiet mode, and when it came back up, it would recover from the other nodes the data changes that had happened and would start functionality at full capacity.”

On the programmability front, Pivotal is exposing a REST API for GemFire, making it easier for developers to incorporate the database into their applications. It’s also offering supporting Ruby and Python as programming languages, and supporting the data type JSON, which will enable GemFire to be the backend supporting a new class of modern, Web-based applications.

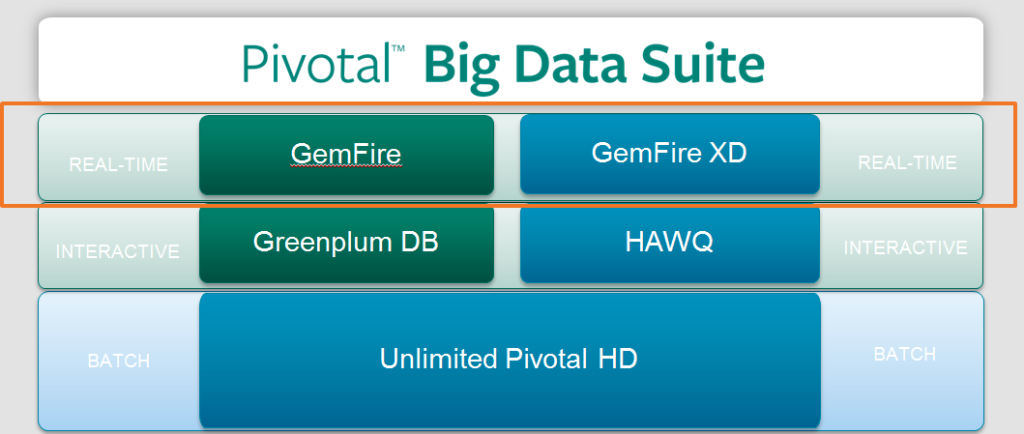

As a component of Pivotal’s Big Data Suite, GemFire is counted on to capitalize on the insights delivered by other components, whether it’s the GreenPlum data warehouse, the HAWQ interactive SQL layer, or a MapReduce job running on Pivotal HD, its Hadoop distribution. The enhancements in version 8 will allow customers to do more with big data.

For example, a hedge fund could use GemFire to improve how it calculates risk across its entire portfolio. “These calculations used to be done at end of trading data. Then Wall Street started moving to intraday data management,” Menon says. “Now with something like this you can actually run and precompute and do your risk management not just intraday, but it will be done multiple times per day, which gives you a sustainable business advantage when you’re trying to hedge your position.”

While Pivotal’s pure-play competitors in the Hadoop, NoSQL, and MPP data warehouse spaces are busy building bridges to complete the virtuous cycle of big data development, Pivotal seems quite content to go it alone, at least from a technology point of view. That can be a gamble in the rapidly evolving big data space. But considering the backing that Pivotal has from industry giants EMC and General Electric, it might be a bet that pays off nicely.

Related Items:

Pivotal: Say No to Hadoop ‘Tax’ with Per-Core Pricing

Pivotal Refreshes Hadoop Offering, Adds In-Memory Processing

Pivotal Launches With $105m Investment From GE