Big Data Challenges in Social Sciences & Humanities Research

One commonly mentioned benefit of the study of history is that we may gain insight into the thoughts and behavior of our ancestors. Learning where we came from can help us better prepare for where we are going. At the launch of this age of Big Data, we are only now well positioned to accomplish that ambition.

Armed with Big Data Analytics, we can improve the well-being of ourselves and our offspring, particularly in Social Sciences & Humanities Research. In this story, we’ll look closely into the topic to see if there are any unique challenges facing social scientists and humanists in Big Data Analytics, whether they can be addressed, and how other professions and disciplines may be enlisted to help.

Nature of Systems in Society

In an article previously published in Datanami (Interplay between Research, Big Data & HPC), the author asserted that complex systems ranging between the two extreme worlds of subatomic systems and the cosmos in its entirety all exhibit emergent properties that are difficult to comprehend using a single first principle.

Correspondingly outcomes are also difficult to predict with a traditional reductionist approach when performing research. To gain the most meaningful insight into such complex systems calls for the use of large scale and high performance computing (HPC) incorporating multiple principles and the ability to traverse a graph of vectors. Additionally, the author asserted that the most complicated example of an emergent system is the human consciousness. All social phenomena exhibit properties that essentially emerge from various interactions between a large numbers of human brains. Social scientists and humanists will need to leverage HPC to process the Big Data generated from these complex, intricate social phenomena or risk publishing findings from a statistical sample that has become insignificant in the era of Big Data.

There are a number of essential elements in HPC solutions. These include system hardware and software, data management middleware, visual/numerical analytic tools and other advanced information technologies. There is also critical human capital and skills required to take full advantage of these highly sophisticated HPC solutions and maximized the potential for discovery inherent in any given line of investigation. These elements contribute to the Big Data challenges in modern Social Sciences & Humanities Research (SS&H).

Human Capital Challenges in SS&H Research Computing

There are two types of highly skilled professionals needed by the research community in order to take full advantage of Big Data HPC infrastructure. They are process-focused analysts and data-focused scientists. Process-focused analysts focus on providing services to integrate data from all processes in a research workflow end to end. These are computing system professionals who help build and support the entire data-centric computing workflow for specific research projects. Data-focused scientists focus on leveraging these data processing services to gain scientific insight.

The digital revolution has parallels to the Industrial Revolution in the 19th century. Where the Industrial Revolution introduced tools that greatly improved the efficiency of tasks previously performed by the human body, the digital revolution incorporates tools that greatly improve the efficiency of tasks performed by the human brain. However, there is a critical distinction between these two types of tools. Today’s digital tooling has advanced to a stage where it can itself originate interaction with the human mind, e.g. the advent of cognitive processing technology. IT can now serve as an active collaborator providing a researcher chooses to interact with it instead of simply using IT to speed up calculations.

A significant recent example is shown by medical doctors at the Memorial Sloan-Kettering Cancer Center in New York and the way they are interacting with IBM’s Watson system (famed for winning the TV Jeopardy game) and its large cancer disease database in diagnosing cancer.

Data-focused scientists represent a new generation of scientist who can exploit the full potential of digital analytic tools to greatly benefit his or her research endeavors. Historically, they were cultivated out of the natural science research community, e.g. high energy physics, climate science and astrophysics, and more recently, from the medical science research community, e.g. genomics, proteomics, system biology, and various translational medical research disciplines.

For those research domains, the Big Data era started decades ago when they started deploying high throughput data collection devices in their research projects and data-focused scientists in those domains are more numerous than in other disciplines, notably SS&H.

One of the critical success factors in research is the ability of asking the “right question.” There are two contexts for the right question. One is the domain specific context. Simply put, top-notch researchers with deep insight in the specific research field are most likely able to ask the right questions. The other context is research-tool related. Whether the researcher is asking the right question depends on whether what they’re asking can practically be obtained using the tools at their disposal, including large data sets.

Data-focused scientists have the ability to make requests to Big Data analytic tools that fully exploit the capabilities of those tools. The prerequisite is that data-focused scientists must have a solid knowledge of the capabilities of the Big Data analytic tools available.

This assertion is supported by an analogy to physics research. Prior to Einstein’s introduction of special relativity, all physicists requested three-dimensional information from their research instruments as they neither realized that the physical world had the fourth dimension nor knew that their instruments had the potential to extract information from a four dimensional world. A similar situation would appear to exist in the SS&H research community as community members in general are not as savvy with big data analytic tools as researchers in, say, natural sciences and engineering.

In recent years, IT data analytic service companies have promoted many customer success stories for engagements using Big Data analytics tools. It would appear that there is an effective pool of Big Data talent in the social media and commercial domains. But that talent pool has a very narrow focus. Analysts there only ask “right questions” on how to help a commercial concern generate profits, by anticipating customer’s buying preferences, creating good shopping experiences for new customers, recommending proactive measures for high profit sourcing, or for purpose of retaining customers.

Like virtually anything with a narrow focus, it has limited value to the society as a whole. While we can to a certain degree leverage the private sector skills, a coordinated effort between the SS&H research community and government research funding agencies is required to create a similar talent pool for research.

IT Challenge in SS&H Research Computing – Big Data Analytic Tools

Jean-Baptiste Michel of “Culturomics” fame stretches the importance of tools as devices from which leaps in understanding can be made. He made the analogy of the microscope and the telescope in natural sciences with Big Data Analytics and calls it a “cultural telescope” with its lens focused on the social structure of humanity.

There is a well-known set of commercially available data analytic software such as SPSS and SAS, and their open source sidekicks. They don’t need further coverage here. It is more interesting to examine newly developed Big Data Analytic tools that can help gain deep insight from complex systems in our society. That is, systems with many components each interacts with many others and when viewed in their connected entirety, collective properties emerge. Complex systems are basically networked systems of systems and can be represented well by nodes and edges in graphs.

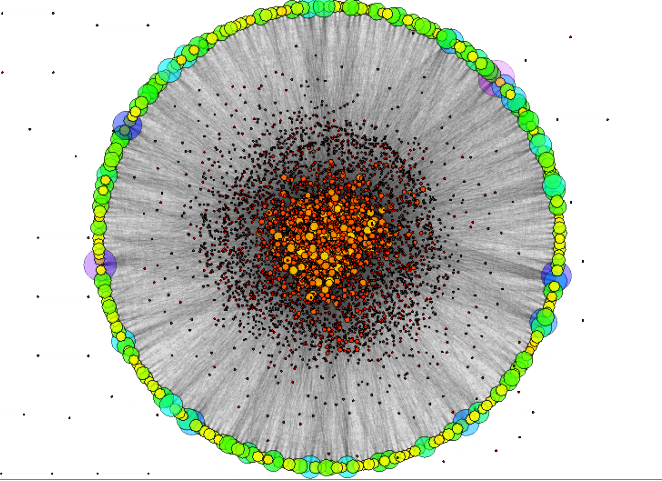

In recent years, sophisticated graph-based network visualization tools were developed to help analyze high dimension network systems in translational medical research. For example, using visualization tool such as NAViGaTOR to present the entire set of data in protein-protein interaction (PPIs) network in cancer research, researchers can now gain new in-sight otherwise inaccessible from traditional computational and statistical analysis.

There are lots of network systems in our human society similar in complexity to PPIs. The main point to take away from this discussion is that social scientists can gain sometimes surprising insights from social Big Data by interacting with a visualization research tool such as NAViGaTOR.

The degree of interaction depends on both the capabilities of the tool and the practice of the researcher, for instance his or her habit of interacting with technology. Visualization technology is well established and while we should continue to develop more sophisticated use of visualization tools, there are many other new data analytic technology came in the last decade. Many of these new tools are called “cognitive processing” technologies.

This year, Gartner published a provocative Maverick Research report on warning businesses to start adapting the new technology (that they call “smart machines”) or they will not be competitive in as short a time as three years from now. Meanwhile, Google has been busy pushing forward with a number of Artificial Intelligence projects for quite some time and even scooped a Canadian expert in the field, Geoff Hinton from Canadian Institute for Advanced Research (CIFAR) (although he is still a part time professor at the University of Toronto).

IBM is another driver of this type of technology. It recently spent $1 billion to establish the Watson business division. Instead of offering a standalone language-based cognitive processing capability on unstructured data with its Watson system, it also has an ambitious plan to integrate this technology with traditional data analytic technologies such as information management, stream computing, predictive analysis and others under a solution service offering called Watson Foundations. These are all about artificial intelligence, machine learning, adaptive systems, expert systems, neural networks, pattern discovery, probabilistic planning; and many more capabilities that could only be found in our brain in the past.

Two of our five senses are key to scientific research and our understanding of this world–sight and sound. Visualization technology enhances what we can see. And many cognitive processing technologies interact with our speech and hearing. For example, Watson’s natural language processing capability. In addition, cognitive processing technologies such as neural networks and machine learning augment our learning and discovery experience, performing even more effectively as a collaborator to researchers than visualization technology. These capabilities are particularly useful to social scientists and humanists as their research targets are highly dynamic emergent systems. Being able to fully leverage these new technologies is critical to the success of Social Sciences & Humanities Research in this new age of Big Data.

It can also be advantageous to identify analytic tools that address specific challenges in Social Sciences & Humanities Research presented by the Big Data dimension.

The era of Big Data began with the advancement of high throughput instruments used to collect data in natural sciences (and later, life sciences) a few decades ago. Those data are by and large in numerical format and static in nature. Dynamic images from astronomy and medical science are a significant exception. In those days, Big Data was only characterized by its volume, i.e. “big” in size.

With the popularity of social networking and advanced digital sensors being deployed to literally everywhere, Big Data–in particular, that with social content–takes on varied other dimensions. It may be an unstructured heap, or consist of multiple integrated media streams. And it can also be generated in a dynamic fashion, such as the Canadian federal election results. These start steaming across the continent when the last polling station closes in British Columbia.

Finally, Big Data can have a high degree of uncertainty. In particular, data originated from responses based on human perception, such as opinion surveys. In some cases, the data may be outright false as opposed to inaccurate. Taken together these considerations are known as the 4V’s of modern Big Data – Volume, Variety, Velocity and Veracity.

Modern big data analytic tools are being developed to address challenges from these data dimensions. For example, the Geo+Social Pattern Analysis work done on inner city Vancouver, Canada by Rob Feick (University of Waterloo) and Colin Robertson (Wilfrid Laurier University). They turn “features” in geotagged photographs from the Flickr API into a geographic database of the city to study its physical and social landscapes such as its population growth, cultural & social diversity, land use transformation and high density residential development (you can read more about it here).

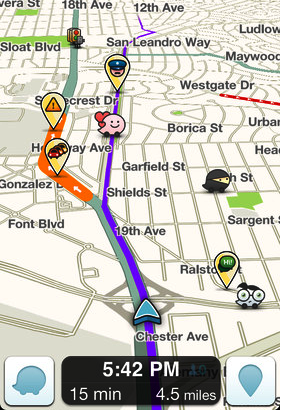

On steaming data, WAZE, one of the world’s largest community-based GPS applications provides users real-time traffic information and route advice by analyzing speed of participating commuter cars. This data is integrated and enhanced with other real-time data. Establishing veracity of Big Data arguably presents the biggest challenge of all. Cognitive processing technology has barely started to address this big data dimension and it is in this way technology intersects with epistemology, a major focus of philosophy and one of the original Humanities.

All these advancements in Big Data analytics technology bring us back to the issue of human capital challenge mentioned in the last section. While there is no need to educate social scientists and humanists (or for that matter, any scientists) in the technical detail and inner workings of these tools, it is absolutely critical to introduce to them its capabilities and ability to trigger their imagination, assisting them in asking the “right questions.”

IT Challenge in SS&H Research Computing–Fully Integrated Workflow System Environment

A common characteristic of technologies described in the previous section is that they are all components of a fully integrated workflow environment in any Big Data research endeavor. All scientists need an integrated system to do research computing. This means that they need to build a system with an end to end scientific research workflow built-in before they can start computing. (The author also referred to this requirement in his previous Datanami article.)

A scientific research computing workflow consists of basic tasks such as data access, a variety of analysis on single or multiple datasets, visualization/interpretation and finally discovery and reporting. Physicists, chemists, and engineers have been used to building systems like this by themselves ever since they started practicing research computing decades ago. Medical researchers got around this by collaborating with computer scientists over the past 15 years. The author witnessed and participated in such collaborations. It was often painful and slow. One interesting result out of that painful experience is that many computer scientists changed their career and became biologists or even physicians. It might just be a little bit too demanding to ask computer scientists to turn themselves into artists but a new opportunity is arising for HPC support professional to take on the challenge of helping Social Scientists and Humanists.

This is a new role that we termed process-focused analyst in the human capital challenge section. It is important to appreciate the increasing complexity arising from the onslaught of Big Data from various “-omics” disciplines in medical research. With the large volume and large number of different types of data, the data access task alone has to effectively address a combination of complicated issues such as security/privacy, curation, and federation, among others. For any given research project, it demands a unique integration of a set of otherwise common data management tools based on the project’s use case. A similar situation exists for the data analytic task. In other words, workflow integration for Big Data research is a case base and technology/skill rich exercise. As mentioned in the author’s previous Datanami article, this can be a very rewarding task and offer tremendous career opportunities. In particular, young HPC support professionals should give it serious consideration and be actively encouraged to pursue it.

About the author: Dominic Lam has a Ph.D. in Condensed Matter Physics  from the University of Toronto. He is a consultant on business (funding and collaboration) and technology issues on High Performance Research Computing. Prior to establishing his consulting practice, he had been responsible for HPC business and solution development for IBM Canada for the past 12 years. In that capacity, he worked with IBM Research and the Canadian research community (both academia and industries) to facilitate collaboration in joint projects on various scientific, engineering, social and economical subjects which make extensive use of information system technology. His understanding of the domestic funding challenges has made him a valuable part of the alliances of Canadian researchers and HPC support professionals. Dominic can be reached at [email protected]

from the University of Toronto. He is a consultant on business (funding and collaboration) and technology issues on High Performance Research Computing. Prior to establishing his consulting practice, he had been responsible for HPC business and solution development for IBM Canada for the past 12 years. In that capacity, he worked with IBM Research and the Canadian research community (both academia and industries) to facilitate collaboration in joint projects on various scientific, engineering, social and economical subjects which make extensive use of information system technology. His understanding of the domestic funding challenges has made him a valuable part of the alliances of Canadian researchers and HPC support professionals. Dominic can be reached at [email protected]

Related Items:

Interplay between Research, Big Data & HPC