Data Virtualization and Big Data Stacks—Three Common Use Cases

There is a critical need among businesses today for a platform that enables them to access any kind of data from anywhere it lives without necessarily moving it to a central location like a data warehouse. In fact, every new innovation in technology or business is driven by better use of increasingly complex and disparate data. Data virtualization represents a straightforward way to deal with the complexity, heterogeneity, and volume of information coming at us, while meeting the needs of the business community for agility and near real-time information.

Simply put, data virtualization (DV) is the shortest path between the need for data and its fulfillment. Unlike other integration technologies, it lets the data live wherever it is already located but provides integrated access to the users, speeding up the time it takes to make it actionable. It enables business leaders, data scientists, innovators and operational decision makers to “do their thing” to achieve better outcomes; all without having to worry about how to access, store, convert, integrate, copy, move, secure, and distribute data upfront and on an ongoing basis.

While data virtualization brings a lot of capability, it is very important to recognize that it is not a direct replacement for either traditional replication-based integration tools such as ETL, ELT, data replication, etc. or message-driven integration hubs like ESB, Cloud and web services hubs. Often, data virtualization is used either between sources and these integration tools or between them and applications to provide faster time-to-solution and more flexibility. However, for rapid prototyping and for some use cases it can also eliminate the need for heavier integration altogether.

Most companies now get the value proposition of data virtualization and have confirmed their intentions to add this capability. However, in order to drive successful DV adoption in different areas, clear data virtualization patterns are needed and these are emerging and evolving in waves. For example, informational/intelligence use patterns such as agile business intelligence, information as a service (self-service BI), and logical data warehousing originally set off the trend and are becoming some of the mainstream use cases of data virtualization.

Additionally, operational/transactional patterns such as provisioning of data services for agile application development (mobile and cloud), single customer view applications, abstraction layer for application migration and modernization, etc. are boosting data virtualization. And some enterprises are using DV to allow the enterprise to make better use of unstructured Web, public data.gov, and social data.

Big data and real-time analytics are also now fanning the flames. Although the initial focus of big data has been on specific storage/processing platforms like Hadoop to analyze large or high-velocity data at significantly lower cost, a true big data analytics platform architecture has to have data virtualization to complete the information cycle of data access and results dissemination. Not only will data virtualization efficiently intake all the disparate real-time, unstructured and streams data it also disseminates actionable analytics to the right applications or users in real-time in a hybrid analytical environment combining NoSQL and traditional data platforms.

Best Practices for Data Virtualization

The following are some best practice patterns for using data virtualization as part of a big data analytics platform architecture. Note that these patterns are typically supported only on best-of-breed data virtualization platforms, and not in basic data federation tools or federation components bundled with BI or ETL tools.

Pattern 1. NoSQL as an Analytical Engine/Logical Data Warehouse Pattern

On the north side of big data, data virtualization can access analytic result sets from big data/NoSQL and expose them through virtual views, combine them with other views and present them as Web services or for SQL query for BI tools. This can be done in two ways:

- Access (asynchronous) – Sometimes the analytics are performed independently/on schedule and output results are stored inside the big data stack for access.

- Trigger (synchronous) – Other times data virtualization calls the big data stack to trigger the MapReduce or other analytical engine to produce the results. This assumes that the required data needed to perform analytics is already in the big data stack. The most effective DV solution should launch a MapReduce job in the Hadoop cluster and then the job copies the results into an HDFS file that is accessed and processed through the platform so results can be queried using SQL from any BI tool or application.

The command to be issued is provided in execution time as parameter in the WHERE clause. This also enables the execution of Pig and Hadoop streaming jobs. It is generic, so any existing shell script can be issued, including those that invoke analytic libraries such as Mahout. For accessing Hadoop results, DV provides different options with connectors for accessing data from Hive, HBase, Impala, HDFS key-value files such as text and binary – MapFile, SequenceFile, etc. using Hadoop API both from HDFS and Amazon S3 routes, Avro files from HDFS or other file systems. DV also reads Hadoop files using WebHDFS or HttpFs, which are protocols that provide HTTP REST access to HDFS.

It is also important that the DV solution has the capability to deal with hierarchical data structures, which will help to represent Avro schemas, HBase tables that do not have a fixed schema, MongoDB collections including serialized as binary JSON documents, etc.

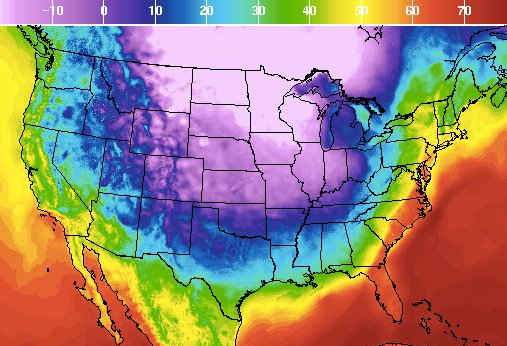

Use Case: The Climate Corporation aims to help farmers around the world protect and improve their farming operations with uniquely powerful software, hardware and insurance products. Using data virtualization, the company is able to ask questions like “what is the likelihood of the arctic vortex creating a freeze in Kansas and which policy holders will be affected and how much will be our payout?”

Using a combination of complex weather pattern analytics combined with CRM data on customers and ERP data on insurance policies, the Climate Corporation can answer highly complex questions by combining hyper-local weather monitoring, agronomic modeling, and high-resolution weather simulations that helps farmers improve profitability by making better informed operating and financing decisions, and an insurance offering that pays farmers automatically for bad weather that may impact their profits.

Pattern 2. Hybrid DV-ETL for Big Data

On the south side of big data, DV acts as an abstraction layer to convert a myriad of data types from arcane to common, structured to unstructured sources such as social media, log files, machine data, text or web scraping, etc. and load that into big data stores which effectively acts as ETL. In this case, Sqoop is used to issue SQL queries on virtual views using the JDBC driver and the results are saved in a directory on HDFS, into Hive and/or HBase tables.

Use Case: Navteq, a geo-location content and platform provider, has a system to store POI (points of interest) location data in a hybrid data environment including Hadoop and multi-dimensional data warehouses — but the basic POI information and map coordinate data is enriched from myriad sources like reviews, menu/sale items, offers, social media etc. The ETL jobs that handle the refresh into these data stores can sometimes point directly to sources if they are stable and structured or to the DV platform presenting them as structured views but underlying they are actually dynamic, frequently changing and complex/unstructured.

Pattern 3. NoSQL for Cold Data Storage

Like Pattern #1, this is also on the north side of big data, but instead of accessing analytics/results, it accesses the raw data stored in big data/NoSQL. In this example it is usually used as a low cost storage alternative to RDBMS/DW and is combined with other views and presented through the data virtualization layer. The DV layer adds value by making it easy to access archived and current data through familiar interfaces and BI tools.

Use Case: Telecommunications providers are required by law to retain call detail records (CDRs) and make them available for regulatory or legal discovery purposes. Several of them, including Vodafone, R Cable, and others, now use data virtualization to provide an easy to query interface for regulatory compliance reasons by combining CDR and customer data. The CDR logs are housed in big data stores as cheap storage that are rarely accessed and are combined with other data such as customer, etc. to ensure the company remains in compliance.

Modern Data Virtualization Platforms

There is much more to modern DV platforms both in terms of their capability and application than people initially realize. While DV has been compared to earlier forms of data federation, it does much more than just real-time distributed query optimization. It is a powerful technology that provides abstraction and decoupling between physical systems/location and logical data needs. It includes tools for semantic integration of structured to highly unstructured data, and enables intelligent caching and selective persistence to balance source and application performance. DV platforms can also introduce a layer of security, governance and data services delivery capabilities that may not be available or a good match between original sources and intended new applications.

Today, progressive organizations are adopting a complete data virtualization platform to meet their ongoing data needs. Although they may start using DV for agile BI, logical data warehousing, or a big data analytics infrastructure, they anticipate the future use for web and unstructured data, or provisioning mobile and cloud applications with agile REST-ful data services. Put simply, these companies look beyond data federation and set out to adopt flexible information architecture that will keep them at the forefront of their business competitors for the long-term.

Related Items:

BlueData Eyes Market for Hadoop VMs

Teradata Moves Virtual Data Warehouse Forward with MongoDB

Moving Beyond ‘Traditional’ Hadoop: What Comes Next?

About the Author: Suresh Chandrasekaran, senior vice president at Denodo Technologies, is responsible for global strategic marketing and growth. Before Denodo, Chandrasekaran served in executive roles as general manager, and VP of product management and marketing at leading Web and enterprise software companies and as a management consultant at Booz Allen & Hamilton. He holds undergraduate degrees from India and an M.B.A. from the University of Michigan.