Solving Big Data Storage at CERN

Not long ago we published an article about the role of tape in the big data era, citing recent announcements from SpectraLogic that poise the company as ripe for the exabyte era.

For a thirty year-old company that has watched an endless parade of storage technologies march past, the big data hoopla could certainly be just the right buzzword at just the right time.

For a thirty year-old company that has watched an endless parade of storage technologies march past, the big data hoopla could certainly be just the right buzzword at just the right time.

Tape has been receiving quite a bit more attention over the last year, in part because companies and research centers require ways to retain data at a low cost while still possessing the ability to quickly spin up needed volumes on the fly for reuse or analysis.

Some have argued that tape is not relevant for many of the storage and analytics operations, but if some recent wins from the company are correct, big tape is finding a big in with data-centric businesses and research centers—and not simply as a “backup and forget” method of socking away massive data sets.

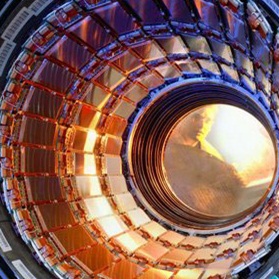

This week SpectraLogic announced that CERN is taking a close look at the company’s T-Series tape library technology, stating that the research facility already installed the exabyte-ready line in July to handle copies of data from the Large Hadron Collider (LHC) and now plans to migrate the collider data from their T380 tape library to the Spectra T-Finity using CERN’s Advanced STORage manager (CASTOR) to achieve hierarchical storage management.

To offer a sense of the scale of the data, imagine the work that is conducted on a daily basis at the facility. The world’s largest particle accelerator generates over 25 petabytes each year of scientific data that not only needs to be stored for later use, it also must be distributed in part or parcel to the variety of related institutions that carry out their own work on the data.

This means that the data the center generates, even if it is sits idle for a time, must be quickly available in large volumes for redistribution and analysis of elements that need to be separated out from other data—or analyzed in large chunks.

According to Vladimir Bahyl, who handles the tape environment at CERN, with such vast data volumes, there was a clear need for “a solution that would provide scalability for growth while ensuring the integrity and accessibility of the data.” He says that using CASTOR made the integration of the new T-Series libraries easy and now the new platform will allow CERN “to scale both hardware and software to make capacity upgrades quick, seamless and affordable as data sets grow.”