Does InfiniBand Have a Future on Hadoop?

Hadoop was created to run on cheap commodity computers connected by slow Ethernet networks. But as Hadoop clusters get bigger and organizations press the upper limits of performance, they’re finding that specialized gear, such as solid state drives and InfiniBand networks, can pay dividends.

InfiniBand was released to the world in 2000 as a faster networking protocol than TCP/IP, the primary protocol used in Ethernet networks. Thanks to its use Remote Direct Memory Access (RDMA), InfiniBand allows a computer to directly read and write data to and from a remote computer’s memory, effectively bypassing the operating system and all the overhead and latency that entails.

You can get 40Gb per second of raw capacity out of a Quad Data Rate (QDR) InfiniBand port, which is the most common type of port used today. That’s four times as much bandwidth as a single 10Gigabit Ethernet (10GbE) port can deliver. As you aggregate links, the speed advantage grows. Of course, users can aggregate Ethernet links too.

While Ethernet has maintained its dominant position as the protocol of choice for the vast majority of enterprise networks, InfiniBand has made inroads in the HPC market, where its superior speed and latency give it an advantage on massive parallel clusters. More than 50 percent of the supercomputers in the latest Top 500 list use InfiniBand, up slightly from the previous year. High-speed traders and others in the financial service industry are also big consumers of InfiniBand ports made by vendors like Mellanox and Intel.

But when it comes to Hadoop, InfiniBand adoption lags behind HPC. There are several reasons for that, including a built-in bias towards Ethernet at most enterprise shops, along with the perception that InfiniBand is exotic and expensive (not always true). Hadoop was developed, after all, by hackers accustomed to cobbling together their own solutions from cheap commodity systems. Ethernet has just always been there, like air. That gives it a lot of momentum that will be difficult to overcome.

While InfiniBand on Hadoop is not mainstream, it is not new either. All of the major Hadoop distributors have partners, such as Hewlett-Packard, IBM, and Dell, who support InfiniBand in their reference implementations. If you’re going the appliance route, as about 20 percent of Hadoop implementers do, you’ll find that Oracle and Teradata both support InfiniBand.

Why Choose InfiniBand

There are some compelling reasons you might consider using InfiniBand over 10GbE. One person with an inside view of InfiniBand-on-Hadoop issues is Dhabaleswar K. (DK) Panda, a professor and Distinguished Scholar at the Computer Science and Engineering school at Ohio State University, and head of its Network-Based Computing Research Group.

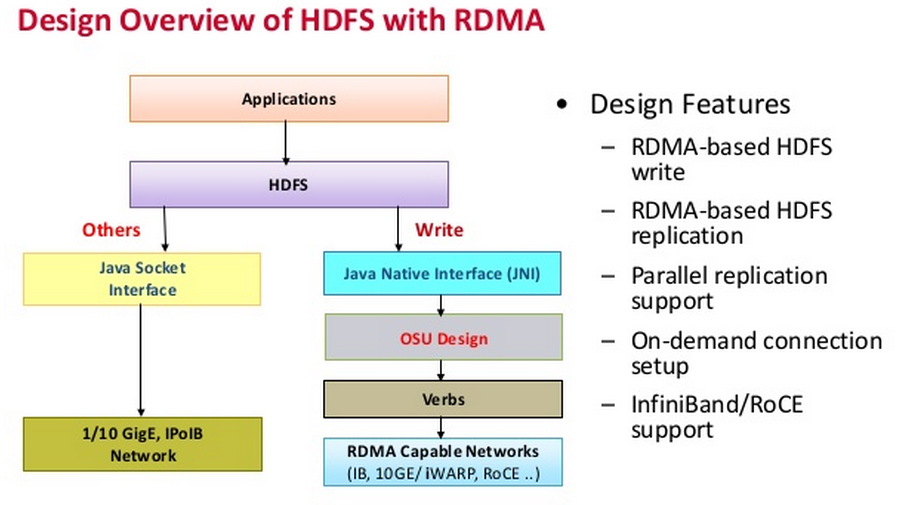

Panda oversees the High-Performance Big Data (HiBD) project at OSU, which develops, ships, and supports a set of libraries for Hadoop versions 1 and 2 (as well as HDFS and MapReduce) that support the native RDMA “verbs” that InfiniBand uses to communicate. Apache Hadoop and Hortonworks’ distribution are currently supported, with a plug-in for Cloudera’s distribution on the way. The group has also written code for supporting InfiniBand on Memcached key value store database, and is currently working on libraries supporting Apache Spark and HBase.

Panda, who has been doing research into HPC interconnects for the past 25 years and has worked with InfiniBand since it came out, acknowledges that InfiniBand isn’t widely used in Hadoop, but he expects that to change.

“The HPC field, they jump into technologies quickly. But in the enterprise domain, they have a little bit of lag,” Panda tells Datanami. “On the enterprise side, it’s catching up. So we might have to wait another one or two years to see it more widely used there.”

Since HiBD shipped its first InfiniBand library several years ago, the “stack” has been downloaded more than 11,000 times. More than 120 organizations worldwide are using them, according to the group’s website.

The common thread powering InfiniBand adoption is the desire to obtain the maximum scalability and performance, and overcoming bottlenecks in the I/O, he says. “Traditionally [Hadoop] has been designed with Ethernet,” he says. “But even if you go to 10GbE, especially with large data sets, you get choked. This is where the benefits of our designs come in, so that you can actually try to scale your application and get most of the performance and scalability out of them.”

There is a common misconception in the Hadoop community that InfiniBand is too expensive and is generally overkill for clusters of commodity gear. That may be true for smaller setups, Panda says. But for larger clusters, InfiniBand is actually more cost-effective than Ethernet, he claims.

“If you go for a very large system, InfiniBand FDR might be better and more cost effective than 10GbE,” he says. “If you have a four-node or 16-node cluster, you might not see the difference. But if you go to 1,000 nodes, 2,000 nodes, or 4,000 nodes, you’ll see the cost difference.”

Just as a race car is only as fast as its slowest component, Hadoop clusters can be slowed by underperforming parts. “You can have a very good engine, but if you have poor tires, you won’t get the benefits,” he says. “We see that the I/O and the network need to be balanced to get the best performance.”

Hadoop Caveat Emptor

While raw network speed definitely plays major role in Hadoop performance, there are a lot of other factors one can consider, and not all of them are obvious. As usual, the devil is in the details.

Last July, Microsoft and the Barcelona Supercomputing Centre launched Project Aloja to try to set a baseline for Hadoop performance. Project Aloja concluded there were more than 80 tunable parameters in Hadoop that impacted performance, including hardware factors, such as amount of RAM, storage type, and network speed, as well as software parameters, such as how many mappers and reducers, HDFS block size, and size of virtual machines.

The group found that just adding InfiniBand had almost no impact on the performance of Apache Hadoop, as measured in benchmark tests. However, adding InfiniBand and solid state drives (SSDs) together in the same system delivered a 3.5x performance boost compared to using SATA and Gigabit Ethernet. Just adding SSDs while keeping Gigabit Ethernet delivered a 2x advantage, it found.

That jibes with Panda’s experience. “What happens when you use SSDs, of course the I/O becomes faster, so that means you actually need a high-performance network,” he says. “Obviously if you go from 1Gb to 10GbE, you’ll see benefits. But if you use InfiniBand, you’ll see much more benefits….As these technologies mature, you’ll see there will be more pressure on the network. That means the better solutions, like RDMA-based solutions, will work fine on those types of systems.”

Not everybody is sold on InfiniBand. In an informative post to the question-answer site Quora, former Cloudera engineer Eric Sammer, who’s currently the CTO and a co-founder of Rocana, argues in favor of 10GbE.

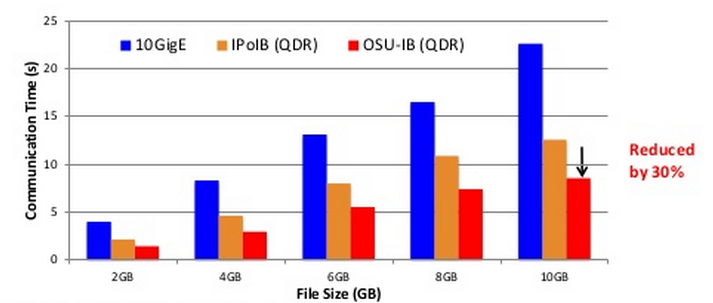

Sammer’s main issue with using InfiniBand is the overhead it incurs while running Internet Protocol over InfiniBand. “The issue here is there’s overhead, just as with most compatibility layers,” he wrote. “In this case, and for various reasons I’m afraid I’d explain poorly, the actual bandwidth winds up being about 25Gb for IP over a 4X QDR 40Gb link.” (To be fair, the libraries that Panda develops at HiBD enable native support for InfiniBand, which eliminate the overhead.)

A comparison of Hadhoop performance of 10GbE, IP over InfiniBand, and HiBD’s library for native InfiniBand QDR.

If it were his decision, Sammer would deploy 10GbE, perhaps a bonded pair, in his Hadoop deployment. “The ubiquity of Ethernet is nothing to sneeze at, and with a system such as Hadoop, I’d be more inclined to bank on advancements that continue to improve network locality and reduce shuffles (Cloudera Impala, the upcoming Tez changes in Hive, etc),” he wrote. “I also firmly subscribe to the notion that the same cash could be applied to a few more boxes, and to optimize my data center to that end.”

RoCE Comes Out Swinging

InfiniBand is also competing against a new technology that delivers some of the benefits of InfiniBand but over a standard Ethernet network. It’s called RDMA over Converged Ethernet (RoCE) and it delivers faster throughput and lower latencies than traditional Ethernet.

Panda’s HiBD group also developed libraries for supporting RoCE switches and networking gear with Hadoop and Memcached software. Mellanox also supports both RoCE and InfiniBand with its gear.

Whichever interconnect technology a user selects, Panda encourages them to carefully consider the impact that the selection will have. “It’s a question of the [quality of the] switches and the management,” he says. “If an organization feels comfortable and they have a good sys admin who’s familiar with Ethernet, for them RoCE is an easier way. But a lot of organizations have good knowledge of InfiniBand, and those organizations won’t mind whether they use native InfiniBand or use RoCE.”

As data sets grow larger and organizations come under pressure to analyze big data more quickly, which forces them to build bigger and faster clusters equipped with SSDs and multi-core chips, it seems clear that the RDMA approach—either InfiniBand or RoCE–will eventually need to be adopted by any organization running production big data workloads at scale.

Related Items:

Unravelling Hadoop Performance Mysteries (EnterpriseTech)

Why Big Data Needs InfiniBand to Continue Evolving